FPGAs in Cloud Computing

The availability of Field Programmable Gate Arrays (FPGAs) in cloud datacenters has opened up new ways to improve application performance by letting users write their own custom hardware accelerators that they can realize on the FPGAs. Unfortunately, the ability of users to implement (almost) any logic function they want on the FPGAs also provides unique avenues for malicious attacks on other cloud users’ applications and data, and the cloud infrastructure itself. The goal for this blog post is to draw attention to the recent introduction of FPGAs in cloud datacenters and highlight current research opportunities and challenges, with special focus on security of the FPGA-accelerated cloud server architectures.

The emergence of public, FPGA-accelerated cloud computing started around 2016 with introduction of FPGA-accelerated virtual machine instances, so-called F1 instances, in Amazon Web Services. In the subsequent years, a number of cloud providers have begun to offer public access to FPGA-accelerated instances. Today, many different providers let users rent FPGAs: Xilinx Virtex UltraScale+ are available from Amazon AWS, Huawei Cloud, and Alibaba Cloud; Xilinx Kintex UltraScale are available from Baidu Cloud; Xilinx Alveo Accelerators are available from Nimbix; Intel Arria 10 are available from Alibaba Cloud and OVH; and older Intel Stratix V are available in Texas Advanced Computing Center (TACC). These offerings keep expanding, however most of the public cloud infrastructures include FPGAs from one of the two major vendors: Xilinx (now part of AMD) or Altera (now part of Intel). Other vendors include Lattice Semiconductor or Achronix, for example, although these are not commonly available in public clouds. There has also been use of FPGAs in datacenters where users do not have direct access to them, with one well-known example being Microsoft’s Catapult project that used FPGAs to accelerate web search, and a follow up Microsoft Brainwave project for acceleration of AI. However, this blog post will focus only on public cloud computing where end users have direct access to the FPGAs.

Public FPGA-Accelerated Cloud Computing Architectures

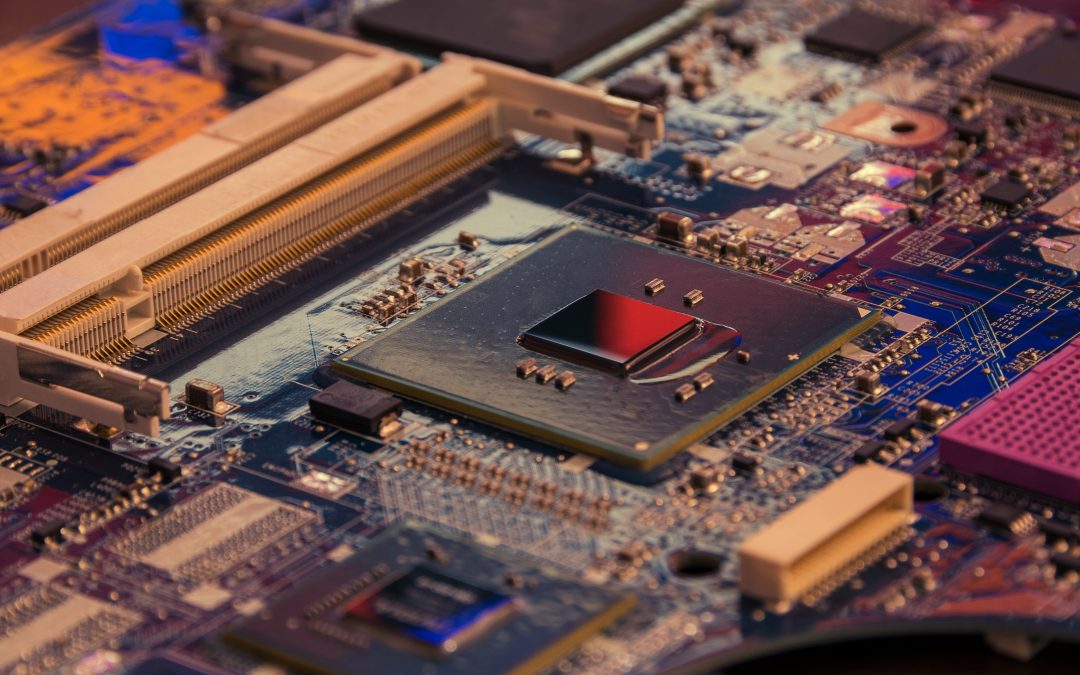

This blog focuses on public, FPGA-accelerated cloud computing where users pay to access the hardware resources in a shared datacenter. The dominant architecture in this setting is one where the FPGA is an accelerator attached to the main processor. The cloud servers host a number of FPGA boards, and users wishing to use an FPGA are assigned a virtual machine that runs on the server and interacts with its assigned one or more FPGAs. Each FPGA board has an FPGA chip, as well as Dynamic Random Access Memory (DRAM) that is on the FPGA board, and is separate from the server’s DRAM. The hypervisor manages the access to the FPGAs, and assigns one or more FPGAs to a user. The FPGA boards use PCIe bus to communicate with the processor, and all communication is processor centric: the FPGA is an accelerator managed by the processor with no direct access to the network, for example. The FPGAs can often perform Direct Memory Access (DMA) to copy data to or from the host’s memory. They can also directly communicate with each other via the PCIe peer-to-peer communication (only for FPGAs that are assigned to the same user). Other architectures are possible, but not available today in public clouds.

One alternative is the bump-on-the-wire architecture where the FPGA has direct access to the network. The advantage is that the FPGA could directly process network packets. However, giving users direct access to FPGA that can manipulate network packets is challenging, especially from a security perspective as users may be able to sniff on the network or generate malicious packets if the network is not properly isolated and controlled. Nevertheless, FPGA processing of network packets is already done in other settings such as private clouds where the server’s owner controls what the FPGA does.

A third possible architecture is FPGA directly integrated to the CPU. Already, vendors such as Xilinx offer development boards with ARM processors embedded with the FPGA. However, with recent acquisition of Xilinx by AMD, and Altera by Intel, it can be expected that future server architectures may also include processors and FPGAs on the same motherboard and possibly being in the same coherence domain with direct access to server’s memory, or even share registers between the CPU and FPGA.

It may be worth noting that there also exist coarse-grained reconfigurable architectures (CGRAs) which are similar to FPGAs, yet the basic reconfigurable unit is an arithmetic and logical unit (ALU) and not a lookup table (LUT) as in FPGAs. But similar to FPGAs, there could be many ways to connect them to the system: as accelerators, integrated with the CPU, etc.

Security Challenges of Low-Level Access to FPGAs in the Cloud

Security of FPGAs in the cloud is a new challenge, as users have low-level access to program the FPGAs with (almost) any designs they wish. Potential malicious users can especially create various sensors which would otherwise not be possible nor accessible to users in CPU or GPU based cloud computing. These sensors can be used to leak information or spy on the operation of the data centers or other cloud users.

In the single-tenant setting, users can create ring oscillator (RO), time-to-digital converter (TDC), or DRAM based sensors that can observe voltage or thermal changes on or near the FPGA. Typically, the FPGA logic is developed so that it will hide small variations in voltage and temperature – just as CPUs or GPUs executing on the server are not impacted by the small changes. However, special ROs, TDCs, or DRAM based sensors can let users directly observe the small voltage or thermal variations. For example, ROs use a loop of odd number of inverters. The frequency of the oscillations of the signal in the loop depends directly on the voltage or temperature of the FPGA. By instantiating an RO in the FPGA, a user can directly estimate the voltage or temperature by looking at the RO’s frequency changes. TDCs are other possible sensor circuits, they use signal propagation delay and can be used for voltage or temperature measurements. Such RO or TDC sensors could even be used to possibly leak information across different FPGAs through voltage and power channels. In CPUs or GPUs, users are not able to modify the hardware configuration to create such sensors.

Further, with low-level access to control DRAM on the FPGA boards, users can develop circuits that can measure decay of data in the DRAM cells when DRAM refresh is disabled – in CPUs or GPUs users have no interface to control the refresh, but with FPGAs they can load and unload DRAM controller to emulate disabling of the DRAM refresh. Rate of the DRAM decay is known to depend on the temperature and thus can be used as a simple thermal sensor. With such sensors, users can observe what is going on in the datacenter, such as monitor thermal changes or possibly leak information between users based on thermal covert channels. Giving each user dedicated FPGAs does not prevent information leaks since other users of FPGAs on same server can create these sensors – which are not possible in CPU or GPU based cloud computing.

Another low-level interface that the users can access is the PCIe interface. Although the FPGA “shell’’ interface blocks direct access to the PCIe, the FPGA users can still generate circuits that generate fast PCIe traffic, creating contention on the PCIe links. Recent work has shown that PCIe contention can help users find FPGAs co-located on same server.

In the multi-tenant setting, the above security challenges get expanded with new issues of users sharing the same FPGA fabric. Here, there are even more information leaks that are possible: wire cross talk can be used to leak information from one user to another, or RO and TDC sensors can be used to measure voltage changes as other users operate, which can be used to leak secret cryptographic keys or machine learning models from other users.

Future of FPGA-Accelerated Cloud Computing Architectures and Security

Current FPGA-accelerated cloud infrastructures effectively take existing FPGA boards, put them in servers, and make them available to end users. This has allowed a number of cloud providers to quickly deploy these FPGA boards in their datacenters and make them available to end users. However, there are many opportunities at all the levels of the hardware and software stack for improving the architectures. From new hardware designs (e.g., new CPU integration of FPGAs), to system designs (e.g., selecting bump-on-the-wire vs. accelerator type), and software and programming (e.g., use HLS or new abstractions). Further, security remains an open challenge since low-level access to FPGA hardware lets malicious users create new sensor circuits that could contribute to information leaks.

Especially, the availability of FPGAs in cloud datacenters has opened up unprecedented levels of application flexibility and performance. But FPGAs in the cloud also provide unique avenues for malicious attacks on other cloud users or the infrastructure, not possible with CPU or GPU based cloud computing. Security remains a challenge where the architectures have to balance the low-level access that allows users to create custom, powerful accelerators vs. malicious users’ ability to abuse the low-level access to create different sensors and leak information or attack the infrastructures.

About the author: Jakub Szefer is an Associate Professor of Electrical Engineering at Yale University where he leads the Computer Architecture and Security Laboratory (CASLAB). His research interests broadly encompass architecture and hardware security of computing systems.

Disclaimer: These posts are written by individual contributors to share their thoughts on the Computer Architecture Today blog for the benefit of the community. Any views or opinions represented in this blog are personal, belong solely to the blog author and do not represent those of ACM SIGARCH or its parent organization, ACM.