In this blog article we touch the subject of architectural evaluation methodology. We took the effort to interview some experts and collect opinions from experts on this subject. While everyone raises unique points, there seems to be some consensus..

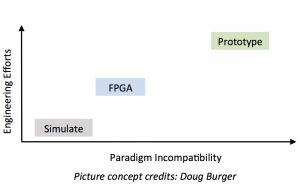

The picture below is a good take-away. If your idea has good paradigm compatibility then simulate! Yes, some revolutionary ideas can have good paradigm compatibility (e.g. SMT). Of course be careful about your simulator configuration and verify its ground truth. If your idea requires a software overhaul – perhaps take the effort to build an FPGA prototype. FPGAs will provide the speed you need to run the new software stack. If your idea is incompatible with conventional paradigms or has components which today’s digital circuits can’t mimic – go forward and build a prototype. Show the faith and put in the effort! Even then be smart about choosing which component of your system to prototype and which components can be simulated. Minimize the pain. Hopefully the open-source hardware movement will be one way to minimize the pain.

*Disclaimer: The para above is my views based on expert opinions and own experience. It does not reflect any one expert’s opinion.

When to Prototype? When to Simulate? When to emulate on FPGAs?

Snippets from experts in alphabetical order : Todd Austin, David Brooks, Doug Burger, Lieven Eeckhout, Babak Falsafi, Karu Sankaralingam, Michael Taylor, David Wentzlaff.

Todd Austin “I will rephrase the question to – when can I not simulate? Prototyping is expensive both in terms of time, money and opportunity costs. One could potentially invest the time in other innovative research. It is not clear what can be learned by fabricating ASICs which cannot be learned from just completing design-time backend-placement – the last step before fabing an ASIC. Sometimes, the outcome of an ASIC is a validation of backend tools, which isn’t very valuable.

So, when can I not simulate? When there are parts of your system which are not 100% digital. For example our Razor project taped out a chip to test metastability issues, and we recently taped out the A2 malicious circuit chip to test analog attacks. Another example is your compute cache work which does in-cache gymnastics on bit-lines. Even in these scenarios you only need to fabricate a piece of the system which actually has the mixed-signal characteristics – for instance in Razor, we did not fabricate a complex multi-processor.

Beyond providing a window into the analog portions of your design, prototyping has the added advantage of high visibility. Prototyped projects generate a lot of attention and become a lightning rod of interest – so if you do go the route of building a chip, there is a good payback in project visibility for the expensive cost.

An FPGA as an ASIC evaluation platform is not particularly useful because it does not provide any clear insights about how an ASIC would perform, in terms of critical path delays and overall power. But I’m still a big fan of FPGAs, because they are useful to speedup up simulations and design prototypes, when you really need the operational speed.”

David Brooks “There are at least three scenarios where prototyping can help. First, when the research includes a new circuit (e.g. mixed signal analog-digital) or operating paradigm (e.g. near-threshold computing) in which the physical characteristics are hard to capture in high-speed, high-fidelity simulations. Such ideas are best evaluated in prototypes and ideally lend themselves to publications in both the VLSI and computer architecture communities. A circuit precursor shouldn’t negate the architectural contributions, in fact it makes them more tangible. But it isn’t necessary to build a Xeon processor. A small chip focused on the novel circuit component is sufficient and simulations can bridge the gap to larger systems. The second scenario is when the research has a critical software component. As examples, recall the RAW and TRIPS projects from MIT and UT-Austin respectively. These architectures required new OS and compiler infrastructure. It is hard to motivate software researchers to write code for a machine that doesn’t (and may never) exist. Of course, the added benefit is that the prototype will be orders of magnitude faster than simulations. Third, when researchers contemplate a start-up or other tech transfer. The recent surge in silicon startups is a boon for the computer architecture community. Silicon prototyping is useful to transition technology from a research lab to a start-up. Investors want to see something tangible and proof that the team can take ideas to the next level. Prototyping provides credibility and visibility, and Berkeley’s succession of RISC-V chips is a great example of this.

These are three scenarios when prototyping can make a big difference. Otherwise simulate! Simulations are great for getting ideas out in a rapidly moving field or evaluating ideas which build on top of well understood baselines. Consider simultaneous multi-threading. The intuition behind the idea was backed up with simulations. Although the idea was revolutionary, it built on top of a well-known micro-architecture so industry practitioners could immediately see the value. Another scenario where simulation is necessary is where the core technology (e.g. 3D stacking or other advanced packaging technologies) is not mature enough to be available.

Of the three scenarios described, FPGAs can be quite useful for the second scenario – new architecture that also require an overhaul of the software stack. If we were to re-do the TRIPS project with today’s available high-end FPGAs, an FPGA prototype may be sufficient. FPGAs are perhaps not that useful for startups, because they often can’t provide power-performance quality of design metrics equivalent to ASICs. They are least useful for the first scenario because it is hard to emulate physical characteristics in an FPGA.

As the Catapult project from Microsoft shows, high-end FPGA are making their way into massive datacenter deployments. Amazon’s EC2 now offers an FPGA instance. These projects open up enormous opportunity to prototype ideas at scale for cloud workloads, which has traditionally been impossible for most researchers due to the staggering cost of building ASICs for 200-600 mm2 dies. On the other hand, small ASICs targeting mobile/IoT systems are well within the capabilities of most academic groups.”

Doug Burger “It’s not just simulation or prototyping. There are three buckets: modelling, simulation, and prototyping. In many scenarios modelling is sufficient – think how many times you have used Amdahl’s law or Little’s law. However as system complexity grows, simulations become more important. Simulations are really useful when the paradigm is well understood and researchers have a good mental model. SimpleScalar is a great example. Everyone knew how an out-of-order core works. Simulations were good to evaluate techniques which extending it or improving it.

Final bucket: prototyping. Prototyping is useful for several reasons, primarily because it lowers the levels of abstractions. First, to capture lower-level RTL complexity or physical design speed or power or other design aspects which are “abstracted away” in simulators. Second, training of students and faculty. Prototyping gives a deep understanding and researchers develop an intuition for future design- what will work well in hardware. Third, the pressure to tape-out leads to rigorous verification of design. Fourth, understanding the phenomena at scale. Prototyping produces fast hardware on which you can run complex software. This is essential for modern, beyond the SPEC world. Further, prototyping allows you to study large scale distributed systems. The Catapult project distributed hardware prototypes over 1632 servers, in a real datacenter. Physical deployment at scale brings forth interesting challenges and problems which can’t be understood by using single node systems or mimicking few number of nodes. Simulations are too slow to mimic large scale. Fifth, prototyping builds confidence, trust and leads the way to commercial impact. This is especially necessary for weird, unconventional architectures where there is a fear of not knowing the unknowns.”

Lieven Eeckhout “There is no single tool or methodology that fits all needs. We need to pick the right tool for the job. For some experiments, this may be cycle-accurate simulation. For others, this may be hardware prototyping. For yet other experiments, this may be analytical modeling. The key point is to make sure the methodology is appropriate and drives the architect in the right direction. An inappropriate methodology may lead to misleading or even incorrect conclusions.

For chip-level or system-level performance evaluation, I’d argue for raising the level of abstraction in architectural simulation. Cycle-accurate simulation is simply too slow to simulate the entire chip or system. Ironically, the level of detail gets in the way of accuracy, i.e., because the simulator is so detailed, it is extremely slow and hence one cannot use the tool to simulate large systems nor can one simulate a representative long-running workload. Hardware prototyping using FPGAs enables modeling large systems at a sufficiently high simulation speed, however, simulator development and setup time may be impractical.

Raising the level of abstraction in architectural simulation has the potential to achieve high accuracy for modeling large systems at sufficiently high speed, while keeping development cost modest. By combining the strengths of analytical modeling with the strengths of architectural simulation, one can achieve a powerful hybrid modeling approach that enables the architect to quickly explore the design space of increasingly complex systems. This was proven a useful methodology for modeling multi/many-core systems. Moving forward, I believe it will be an indispensable method in the architect’s toolbox to model future heterogeneous SoCs with general-purpose multicore processors along with a set of integrated accelerators”

Babak Falsafi “The days of sticking to one simulator and one set of workloads are over (used to be SimpleScalar and SPEC, now gem5 and SPEC/PARSEC). The post-Dennard, post-Moore era calls for using the right tools at the right time. To make a strong case for a design we need to pick the right set of tools to measure performance, power and area.

Profile-based sampling (common practice) works for user-level apps with distinct phases (e.g., DNN’s, graph processing). Workloads with deep software stacks and frequent interaction among software layers not only require much larger measurement windows (e.g., TPC or server workloads) but also are often not phase-based. One practical way of extracting a representative measurement from them is statistical sampling (e.g., SMARTS).

With FPGAs launched in the mainstream with enhanced toolchains and a development ecosystem on the one hand, and open-source technologies, on the other, we will be relying more than ever on FPGAs in design evaluation. Fabbing chips will remain a niche with limited access only to those (in academia) who have the budgets and resources for them.”

Karu Sankaralingam “When simulations are used, the most important thing that researchers must realize is that simulators are not meant to be used as black boxes. They should at least internally verify their simulators and understand the details of how they work for the scenarios they are considering in their research. Researchers must also be extremely cautious when using these tools for configurations outside the validated point. Furthermore, it is very useful to consider simple first order models as well instead of always looking for cycle-level simulation. When ideas span multiple layers of the system stack cycle-level simulation becomes less and less important and useful.

Prototyping by building ASIC chips or mapping to an FPGA is another vehicle for research exploration. Prototyping is extremely useful for researchers and students in particular as a learning vehicle and to work in interdisciplinary ways with application developers since they are more likely to use an FPGA model or ASIC than a (slow) simulator. Building a prototype helps flesh out the entire system stack and learn about design constraints and complexity of the implementation that can only be uncovered by doing.”

Michael Taylor “I employ both simulation and prototyping extensively in my research group.

Simulation’s greatest strength is that it gives you incredible agility in exploring design spaces at low cost. The challenge is that, as the adage goes, simulation is doomed to succeed. Since software does not need to obey physics, it’s easy for bugs or assumptions to creep in, especially the larger and more complex the simulation, and the less we spend verifying the code and the assumptions (I spend a lot of time on this, much to the chagrin of my students eager to get the next paper out!). Sardonically, in the Arch 2030 workshop, I gave a basic algorithm for simulation that highlights some of the troubling issues in our community below:

performance simulation:

repeat (modify_c_simulator())

until (perf>=10%

|| sim_bug_in_my_favor

|| overtrained_on_my_10_benchmarks )

assert (it_would_really_work_in_hw)

power simulation:

assert(we_used_McPat && no_space_to_describe)

Prototyping great strength is that it exposes your design to the three iron laws: Physics, Amdahl, and Real World Crap. Prototyping autocorrects those simulator bugs, and often exposes the real challenges in building systems.

Some ideas are particularly sensitive to the laws of physics and make a lot of sense to prototype to just make the research believable; for example, operating at near threshold or protecting from power side-channel attacks. Or, anything that is truly transformative in terms of energy/power. Other ideas, for example, the MIT Raw Tiled architecture and UCSD GreenDroid, result in systems that are very different than prior designs and need to have a reasonable baseline implementation that enables accurate simulators and enough confidence for follow on research to be done.

Especially with the availability of open source processors like Berkeley’s Rocket generator, reusable IP libraries like Basejump STL, ASIC bootstrap infrastructure like Basejump and HLS, it becomes possible to prototype real versions of your system with far less effort and time than ever before. Our recent Celerity SoC tapeout had 5 Linux-capable Rocket cores, a 496-core manycore, a binarized neural network accelerator and a high-speed I/O interface. My team, Chris Batten’s team, and Ron Dreslinski’s team taped this out in TSMC 16nm in 12 months. Why mess with simulators when you can build the real thing? All of the team members learned a ton about designing HW, and we get to explore creative designs far outside of what industry is doing.

I think that the longer a community researches something based only on simulation, the more likely they are to make a wrong turn somewhere and end up researching something based on false hypotheses or optimizing the wrong thing. Think for instance, the presumed dominance of VLIWs like Itanium over x86 OOO processors. More prototyping could potentially have corrected these assumptions earlier and accelerated our research progress as a community.”

David Wentzlaff “Prototyping is incredibly valuable when doing computer architecture research. It can provide insight way beyond what simulation or analytical models can provide. Having said that, I am also a firm believer that you should only prototype research ideas after they have been thoroughly modeled or simulated. Philosophically, I believe that researchers should build prototypes not products. As a researcher, hardware prototypes should push the boundaries of research, test out novel ideas, and be designed in order to scientifically evaluate the ideas (ex. include extensive power monitoring, extra performance monitoring, extra flexibility, and the ability to disable new features in order to evaluate with and without the new ideas if possible). In particular, I have found building complex processors such as the 25-core Princeton Piton Processor is important when evaluating ideas at scale and at speed. The scale and speed provided by building complex prototypes enables classes of research and insight that are just not feasible at simulation speed such as operating system/hardware co-design, research that involves running real operating systems, real hypervisors, complex programs with full inputs (ex. Piton runs Doom (video game) and SPECint on full size inputs), and research that requires large numbers of cores working together (scale). Building prototypes also provide much more ground truth fidelity for historically difficult to model parameters such as energy modeling, clock speed, wire delay, and process-level constraints which are becoming increasingly important as feature size shrinks. Prototyping can also be essential when using novel devices, for instance, our recent work on biodegradable and organic semiconductor processors is in a technology that does not even have much intuition or ground truth, necessitating prototyping.”

About the Author

Reetuparna Das is an assistant professor is University of Michigan. Feel free to contact her at reetudas@umich.edu

Disclaimer: These posts are written by individual contributors to share their thoughts on the Computer Architecture Today blog for the benefit of the community. Any views or opinions represented in this blog are personal, belong solely to the blog author and do not represent those of ACM SIGARCH or its parent organization, ACM.