As architects, we have heard the drumbeat about the impending end of Moore’s Law for at least a few decades, and in more recent years, the end of Dennard scaling. It is this latter phenomenon that has been extremely impactful to the power consumption of the processors and systems we build. While the industry has continued to produce new process technologies with smaller feature sizes and astonishing ingenuity, the fact is that power consumption is no longer scaling with feature size, and we are seeing systems with increasingly high power envelopes. At the same time, smaller features sizes have made leakage current an increasingly important problem, and so system temperatures must be tamped lower and lower to prevent excessive leakage.

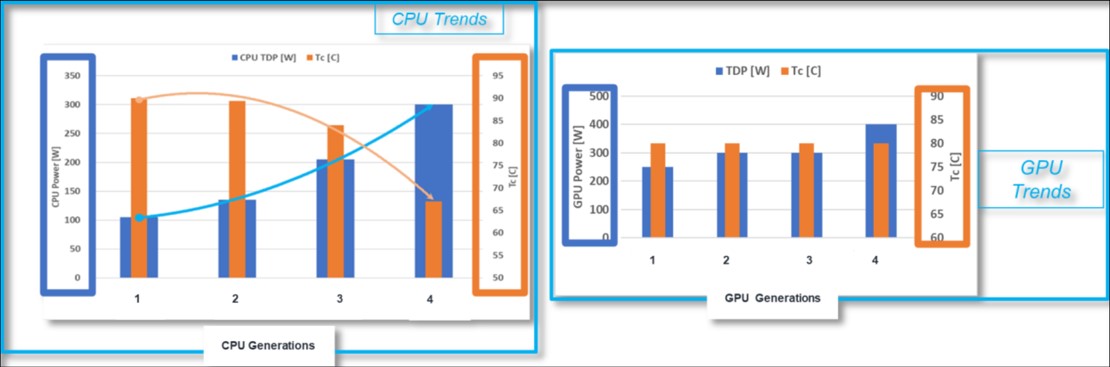

The graph above shows this trend for some previous generations in Azure. Note that TDP is on a clear superlinear path in CPUs, and the beginnings of a superlinear in path for GPUs. Meanwhile, the T_c metric is the max rated case temperature of the system — i.e., the maximum temperature that a system enclosure can reach before problems (like excessive leakage) ensue. These plots show a clearly unsustainable trend – the power consumption of our systems is increasing, and yet we must keep them even cooler with each passing generation. It should be clear that spending more energy on ever more HVAC to blow cold air to make increasingly hotter systems drop to ever lower temperatures is a losing proposition. In a previous blog post, I wrote about the importance of thinking about end-to-end systems, and this is one of those cases – whereby if an increasing chunk of the datacenter energy budget is spent on cooling the datacenter and not on computation, we need to rethink cooling technology.

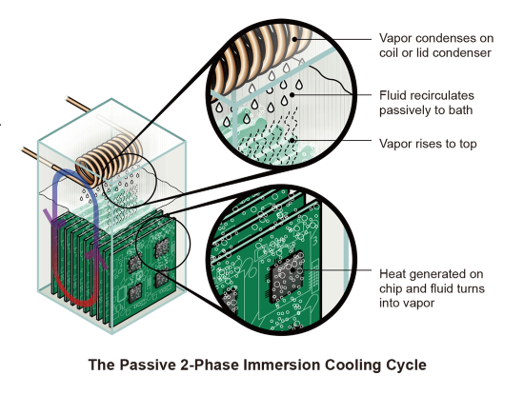

As a result, the industry has been exploring alternative ways to cool datacenters using liquid instead of air. One such technology is liquid cold plate cooling, where components are outfitted with plates and pipes, such that cold liquid can be pumped through them and carry heat away. Since this kind of heat transfer is more effective than blowing air, this is one way to enable higher power densities while maintaining acceptable component temperatures. However, while this is more efficient than air cooling (i.e. the liquid does not have to be cooled as cold as air does to carry away heat), even more dramatically effective is 2-phase immersion cooling, whereby servers are fully immersed in fluid. While anyone who has ever had a toddler drop a phone in a glass of water has an immediate reaction to the idea of submersing electronics in fluid, this fluid is most definitely not water. It is a non-conductive and non-reactive liquid. When kept at a boiling temperature of 50C, it will carry heat away through an evaporative process (hence the 2-phase moniker). This evaporative method of carrying heat away is 10x more effective at heat transfer than air, and incredibly, can be totally passive. To put it very simply and qualitatively, to keep case temperatures low, we have to spend lots of energy to make very cold air to blow away heat, some energy to make cool liquid to pull away heat, and basically no energy to circulate outside ambient air for evaporative cooling, thus leaving a larger portion of total energy budget for computation instead of cooling.

To explain more deeply, you may remember from chemistry classes that liquids do not get hotter than their boiling points and instead evaporate to maintain constant boiling temperature. Thus, the electronics in the liquids essentially maintain a constant and stable temperature. But as anyone who has forgotten about a pot of spaghetti knows, eventually, the liquid in a boiling pot boils off and evaporates away – but not in this closed system. If we cool the vapors to lower than 50C, it will condense and return to the pool of liquid. Cooling vapors to less than 50C is a much simpler proposition than creating very cold air or even cold liquid, because ambient air temperatures nearly everywhere in the world are less than 50C most of the time. In other words, if we maintain mostly passive system of pipes that carries outside ambient temperature liquid throughout the datacenter, that could be enough to condense the liquid so that it falls back in the tank in a closed system. Thus, this is not only a closed system, where the liquid is conserved, but it is essentially passive – the only energy consumed is to fans to circulate outside air.

This is a dramatic leap in energy efficiency, as it leaves significantly more budget in the datacenter to spend on computation instead of cooling, and enables much higher power and thermal densities, which leads to requiring less space to support a certain amount of compute – in other words, you can pour less concrete to support the same amount of computation.

What does this really mean for architects? In this paradigm, the server components can essentially ignore thermals, because as long as the server is immersed in this liquid, the liquid will boil at 50C and heat will be effectively carried away. So many things that have governed our field – power density, thermal runaways, and even the form factor of boards (flat, planar, and spaced out enough for air to blow through and carry away heat) can be reconsidered. One example of what can be done is overclocking processors hotter than previously considered wise – recent work on this exciting topic can be found here. What can you think of? It’s time to go hog wild!

About the Author: Lisa Hsu is a Principal Engineer at Microsoft in the Azure Core group, working on strategic initiatives for datacenter deployment.

Disclaimer: These posts are written by individual contributors to share their thoughts on the Computer Architecture Today blog for the benefit of the community. Any views or opinions represented in this blog are personal, belong solely to the blog author and do not represent those of ACM SIGARCH or its parent organization, ACM.