Despite quantum computing (QC) being an emerging technology, it is critical to consider new emerging technologies for this paradigm. Current machines, constructed from superconducting transmons or trapped ions, have demonstrated impressive success recently but it is unclear what the eventual winning technologies will be. Most available systems have struggled to scale beyond their prototypes while simultaneously suppressing gate errors and increasing qubit coherence times. This limits the types of programs which can execute effectively.

One approach to push the limits of current devices is to improve the compilation pipeline both close to the hardware and with high-level circuit optimization. An alternative approach is to evaluate the viability and trade-offs of new quantum technology and associated architectures. While it is often the case that we adapt compilation to the hardware, i.e. transforming applications into the right shape for execution, an alternative approach is to explore how to design new architectures which are better suited for the target applications.

Evaluating new hardware technology at the architectural level is decidedly different, though intrinsically coupled to, the development of quantum hardware. Device physicists’ goal is often to demonstrate the existence of high-quality qubits and operations in prototypes, while the architect’s goal is to evaluate the systems-level ramifications of this technology, exploring the inherent trade-off spaces to determine viability in the near and long term. Architectural design-space exploration leads to important insights about what is most important for hardware developers to optimize. If some limitations can be effectively mitigated via software, hardware developers can focus on other more fundamental issues. The process of co-design is central to accelerating scalability.

In this blog, we discuss two examples of emerging quantum technologies. First, the use of local “memory-equipped” superconducting qubits to reduce hardware requirements to implement error correction, an example of application-driven hardware design. Second, the use of neutral atoms as a competitive alternative to industry foci, an example of using software to mitigate fundamental hardware limitations to both guide development and accelerate scalability.

Virtualizing Logical Qubits with Local Quantum Memory

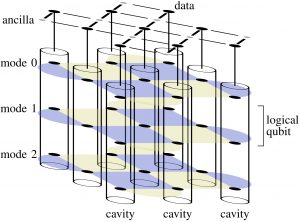

Figure: A fault-tolerant 2.5D architecture with random-access memory local to each transmon storing logical qubits (shown as checkerboards) spread across many cavities to enable efficient computation.

Current quantum architectures do not tend to make a distinction between memory and processing of quantum information. While viable, scalability challenges are clear, for example, superconducting qubits suffer from fabrication inconsistency. To scale to the millions of qubits needed for error correction, a memory-based architecture can be used to decouple qubit-count from transmon count.

Recently realized qubit memory technology which stores qubits in superconducting resonant cavities may help to realize exactly this memory-based architecture. Resonant cavities also appear in QCI’s commercial quantum machine. Qubit information can be stored in the cavity and when an operation needs to be performed it can be transported to the attached transmon. Local memory is not free. Stored qubits cannot be operated on directly prohibiting parallel operations. The realization of this technology alone isn’t sufficient to understand its viability and instead dedicated architectural studies are needed.

By virtualizing surface code tiles in a 2.5D memory-based architecture, logical qubits can be stored at unique virtual addresses in memory cavities when not in use and are loaded to a physical address in the transmons for computation or to correct errors, similar to DRAM refresh. The goal is to take logical qubits stored in a plane and find an embedding of that plane in 3D where the third dimension is limited to a finite size. We can slice the plane to form patches, one for each logical qubit and stack them into the layers so that each shares the same physical address and therefore transmons.

This design requires many fewer physical transmons by storing many logical qubits in the same physical location, allows a faster transversal CNOT which is traditionally executed using a sequence of many primitive surface code operations, and fewer total qubits to distill magic states used for universal quantum computation. This translates to reduced execution time and resource requirements, such as a 1.22x speedup for Shor’s algorithm or allowing it to run on smaller hardware. Despite an increased delay in error detection cycles, the thresholds of the embedded code are comparable to a standard surface code implementation.

Error correction protocols are essential for the execution of large-scale quantum programs. The surface code is one such code, designed with currently available architectures in mind. It is a low threshold code which requires only local operations on a 2D grid. This application is better matched with this 2.5D architecture which allows qubits to be virtualized and stored in local memory reducing resource requirements.

Architectural Trade-offs in Emerging Technology – Neutral Atoms

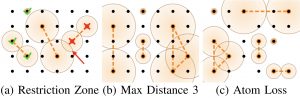

Figure: (a) Interactions of various distances are permitted up to a maximum. Gates can occur in parallel if their zones do not intersect. (b) The maximum interaction distance specifies which physical qubits can interact. (c) Neutral atom systems are prone to sporadic atom loss. Efficient adaptation to this loss reduces computation overhead.

Evaluating the viability of new hardware is essential. Architectural studies which fully explore their unique trade-off spaces are key for finding the best way to accelerate beyond prototypes. One alternative to current devices is neutral atoms. Neutral atoms are the focus of recent quantum startups ColdQuanta and QuEra.

This technology offers distinct advantages which make it an appealing choice for scalable quantum computation. Atoms can interact at long distances leading to reduced communication overhead yielding lower gate count and depth programs. These devices may be able to execute high fidelity multiqubit operations natively without needing an expensive decomposition. However, long range interaction requires proportionately large regions of qubits to be restricted leading to reduced parallelism.

Exploring the use of new hardware also serves a critical role in the development of the platform itself. Neutral atom systems suffer a potentially crippling drawback: atoms can be lost both during and between program execution. When this happens, measurement of the qubits at the end of the run is incomplete and requires the output of the run to be discarded. The standard approach to coping with this loss is simply to run the program again after reloading all of the atoms in the array. Typically programs are run thousands of times and so each run which must be discarded incurs a two-fold cost — we have to perform an array reload and we have to execute an additional run. This is especially bad when execution time is limited by atom reload rate which is significantly longer than the actual program run.

Software solutions can effectively mitigate this increased run time overhead by avoiding expensive recompilation and atom reloads and instead adjust the compiled program by adjusting the placements of the qubits and addition in a small number of extra communication operations. The best strategies directly take advantage of the neutral atom benefits. For example, compiling the program to less than the maximum gives us flexibility in the final compiled program as atoms are lost over time. The best strategies sustain large numbers of loss by minimizing the total number of reloads, having low compilation time overhead, and adding a small number of gates.

Atom loss is a fundamental limitation of neutral atom architectures. Probabilistic loss of atoms is inherent in the trapping process itself and prior hardware studies have focused on hardware solutions to reduce this probability of loss. However, software solutions can effectively mitigate problems due to loss. Demonstrating effective mitigation strategies is critical to the overall development of the platform–by solving fundamental problems at the systems level with software, hardware developers can focus on solving and optimizing other problems. Co-design of quantum systems is key to accelerate the advancement of quantum computing technology.

The Takeaway Message

There is a large gap between currently available quantum computing hardware and the applications we want to run. To bridge this gap, we must use applications to guide the design architectures which best implement them. New, memory-equipped transmon technology has a fortuitous match with lattice surgery based surface codes, trading some serialization for an efficient error correction implementation. Furthermore, we must evaluate new technology as it is developed at the systems level to determine its viability for long-term scalability and develop software solutions to new technologies’ fundamental limitations to guide hardware developers. For example, software can mitigate the downsides of atom loss in neutral atom architectures, lending hardware developers the space to work on other aspects of the hardware. New technology inspired each of these ideas but the techniques developed here are general and could be applied to other similar technology as they emerge.

About the Authors:

Jonathan Baker is a graduating (June 2022) Computer Science Ph.D student at the University of Chicago, advised by Fred Chong studying quantum computer architectures. He is interested in a wide array of topics including quantum compilation, logic synthesis, and architectural design for near-term and fault tolerant quantum computers among others. Jonathan will be seeking a faculty position in 2021-2022.

Fred Chong is the Seymour Goodman Professor of Computer Architecture at the University of Chicago. He is the Lead Principal Investigator of the EPiQC Project (Enabling Practical-scale Quantum Computation), an NSF Expedition in Computing and a member of the STAQ Project. He is also Chief Scientist at Super.tech and an advisor to QCI.

Many ideas in this blog stem from conversations with the rest of the EPiQC team: Ken Brown, Ike Chuang, Diana Franklin, Danielle Harlow, Aram Harrow, Andrew Houck, Margaret Martonosi, John Reppy, David Schuster, and Peter Shor.

Disclaimer: These posts are written by individual contributors to share their thoughts on the Computer Architecture Today blog for the benefit of the community. Any views or opinions represented in this blog are personal, belong solely to the blog author and do not represent those of ACM SIGARCH or its parent organization, ACM.