Virtual memory was invented in a time of scarcity. Is it still a good idea?

— Chuck Thacker 2010 Turing Lecture

It is time for the computer industry to consider larger minimum page sizes. The ~4KB page size has been around since 1962. With cell phones and even watches having 4GB or more of memory, managing it in a million tiny pieces is excessive. Small pages waste power and cost performance in multiple ways. Decoupling page size from memory protection size is a way forward.

1. Why do we have pages?

We no longer need virtual memory to fake a large physical memory, but virtual memory provides other useful mechanisms: heaps and stacks that can grow, copy-on-write data sharing, memory-mapped files, and per-page access protection. Memory mapping fundamentally does two things: (1) remap high-order address bits and (2) apply access rules. Decoupling these can allow a larger minimum page size while maintaining backward compatibility.

2. Need for change

Small pages have several costs, each of which has grown with time.

TLB miss rate. Even with 256 TLB entries, most chips cannot access all their on-chip cache memory without TLB misses.

Memory latency. Each TLB miss can take up to five memory accesses to walk a page-table tree. (For a virtual machine, five squared memory accesses.) Implementation restrictions to single walk at a time increases latency on other cores.

Minor page faults. Heap extension, copy-on-write and other actions modify page table entries via bursts of individual minor page faults, each of which can also trigger cross-CPU TLB shootdowns.

L1 cache size. With just 12 low-order unmapped address bits to select within a set-associative cache, each set can be no more than 4KB. So a fast four-way associative cache is no bigger than 16KB. Bigger caches require wider associativity.

Power consumption. Excess associativity in TLB or cache hardware means extra power to read more unused tags and their data.

I/O transfer size. A common design is a set of hardware mapping registers with scattered 4KB physical addresses; 256 such registers limit transfers to 1MB before the OS must reload the map.

Optional huge pages usually do not help with these. A larger minimum page size, and hence more unmapped low-order address bits, reduces all these costs.

3. Barriers to change

The computer industry is an odd mixture of astounding technological advances and dogged clinging to decades-obsolete designs and historical accidents.

The A20 bit hack to force DOS real-mode boot software addresses to wrap around at 1MB shipped with the IBM PC in 1981, yet is still with us 40 years later.

Over nine years elapsed between the 1985 Intel 386 32-bit chips and the 1995 Microsoft operating system’s support for 32-bit programs.

It took four years to increase the hard-drive sector size from 512 bytes to 4KB, from first discussion to Windows 7 support for aligning the default disk partition on a 4KB boundary.

Operating system services such as mmap() or MmProbeAndLockPages() require page-aligned arguments, with the design assumption that pages are exactly the size of a 4KB disk sector.

A common way to detect runtime stack overflow is to put a no-access 4KB guard page off the end of the allocated stack, and resize the stack if that is accessed.

Linkers change access protection at 4KB boundaries, so that memory protection must work on 4KB boundaries.

Legacy software is the tail that wags the dog. Almost none of this legacy code will run correctly if the page size is larger than 4KB.

The FUD argument (fear, uncertainty, and doubt) strongly says not to change the page size. Ever. Yet the cumulative inefficiencies will eventually force a change. We should plan for it.

4. A possible way forward

How can we make the minimum page size larger without invalidating 50 years of accumulated software? The basic idea is to map addresses in larger chunks, while preserving 4KB access protection for backward compatibility.

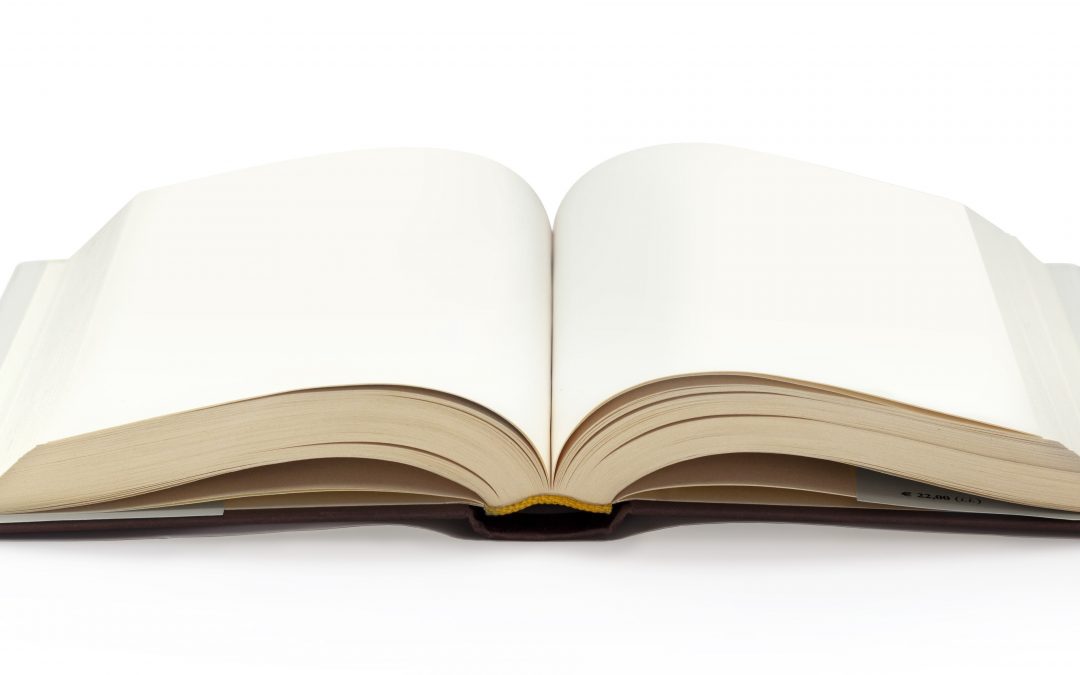

Figure 1 shows an example change from 4KB pages on the left to 64KB pages on the right, giving 16 unmapped bits that can be used immediately to address an L1 cache set.

In our enhanced design, each TLB entry maps one large page, consisting of 4KB subpages. The address mapping applies to the full page, thus giving more than 12 low-order unmapped address bits, but the memory protection field applies only to an aligned power-of-two group of subpages. All other subpages are no-access.

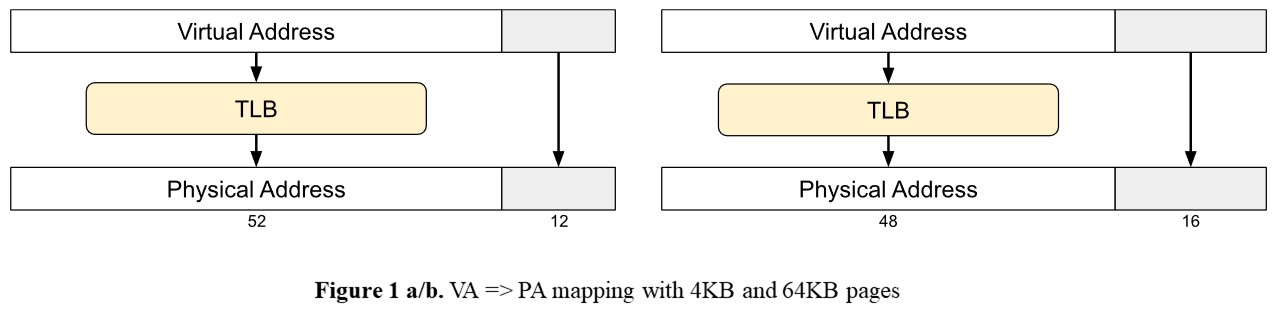

Normally, a TLB entry would have a VA tag, a corresponding PA, and some memory protection bits. For 64KB pages, the tag and the PA would both be 48 bits and the protection bits apply to the entire page, Figure 2a.

Our enhanced design TLB entry has a VA tag of 52 bits (i.e. 4KB address resolution) and an extra size field specifying 1, 2, 4, 8, or 16 subpages. The size field is mapped to a four-bit hardware mask at TLB load time: 1111, 1110, 1100, 1000, and 0000 respectively. This mask is ANDed with the low four bits of the 52 bits of VA during the tag match, as shown in Figure 2b. The protection bits apply to the specified subpages.

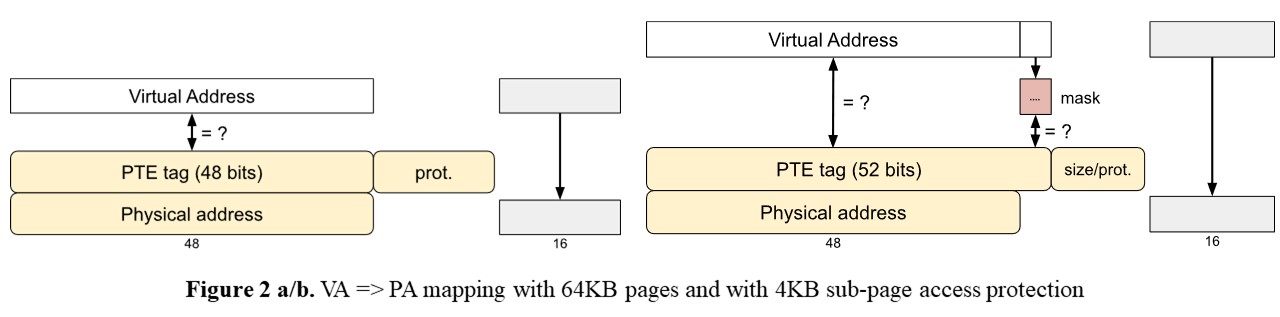

At the cost of a four-bit mask and four AND gates, the effect is that for size=1, the full 4KB VA<63:12> must match exactly — the other 15 subpages do not match and hence cannot be accessed via that TLB entry. At the other extreme of size=16, bits VA<15:12> are ANDed against 0000, and the low four bits of the tag are also 0000, so any address in the full page matches the tag. Intermediate size values map aligned groups of 2, 4, or 8 subpages, Figure 3. The blank subpages do not match the tag so are no-access via that TLB entry.

Various TLB entries can map different VAs to the same full-page PA but to disjoint subpages, allowing multiple unrelated 4KB subpages to be packed into a single physical page — there is no page fragmentation issue.

5. Page Table Tree walk

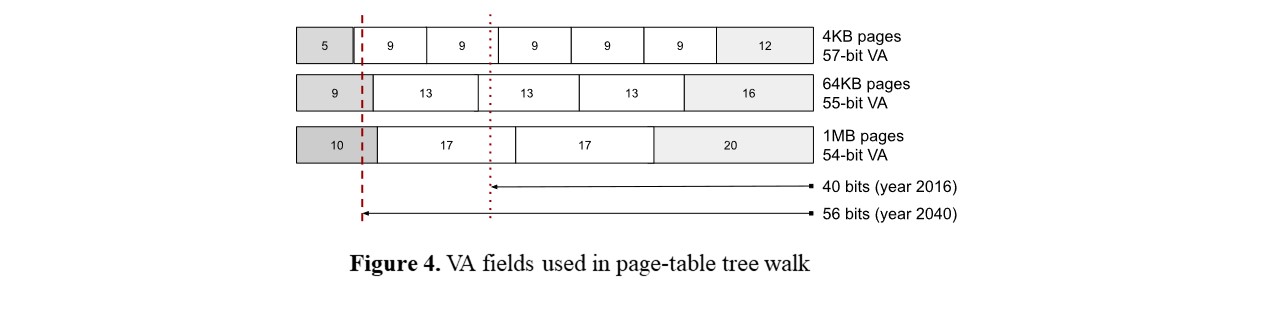

With 4KB pages, each TLB miss can take five memory accesses to walk a page-table tree, using five 9-bit VA subfields to index within a page at each level, Figure 4 top. Only three accesses are needed with 64KB pages, improving worst-case latency; only two with 1MB pages (256 subpages).

The enhanced design page-tree words have the normal PA of the next level and also a size field as above. Use of the size field means that sparse page-tree pages need only occupy 4KB subpages of physical memory, with all the entries that would be on other subpages treated as no-access.

6. Varying Subpage Protection and Mapping

Normally, the last level of a tree accesses a PTE that is loaded into the TLB. To allow varying subpage protection for backwards compatibility, the size field in this word can have an extra value meaning “one more level” with a PA that points to a short array of final PTEs, indexed by the subpage bits of the VA, e.g. 16 subpage PTEs for 64KB pages. These PTEs can vary the final subpage PAs in addition to varying their protection, giving the same mapping flexibility as today’s 4KB pages except that VA<15:12> will always equal PA<15:12>.

7. Need to start soon

On past evidence, it will take ten years for the industry to change the minimum page size. The costs of too-small pages are apparent now and will get progressively worse each year. We need to start changing soon.

About the Author: Richard L. Sites has been interested in computer architecture since 1965. He is perhaps best known as co-architect of the DEC Alpha, and for software tracing work at DEC, Adobe, and Google.

Disclaimer: These posts are written by individual contributors to share their thoughts on the Computer Architecture Today blog for the benefit of the community. Any views or opinions represented in this blog are personal, belong solely to the blog author and do not represent those of ACM SIGARCH or its parent organization, ACM.