In mid-July of this year, Synchron, a brain-computer interface (BCI) startup, announced that it had partnered with Mount Sinai West in New York to permanently implant a stent-like BCI in the motor cortex of a paralyzed patient and read neurological signals to capture their intended movement. Implantation was performed without open brain surgery; instead, the BCI was inserted through the patient’s jugular vein. The surgery took two hours and the patient was able to return home within forty-eight hours. Careful monitoring and assessment of the patient will be necessary over the upcoming months to understand the longer-term medical implications of this procedure and gauge its success, but this may present yet another step towards wider implantation of BCIs in humans.

BCIs have held great promise since 1964, when Grey Walter surgically implanted electrodes in the motor cortex of a patient’s brain and used brain signals to directly control a slide projector. In general, the 1960s represented a watershed era in the design of highly influential mechanisms for computer IO. The first generation of Douglas Engelbart’s computer mouses were developed around the same time, as was Ivan Sutherland’s Sketchpad, which made it possible for “man and computer to converse rapidly through the medium of line drawings”. These IO devices revolutionized how we think about graphical user and human-computer interfaces; the difference between computer programming and usage; the difference between design and implementation; as well as abstractions, modularity, encapsulation, and the principles of object-oriented programming. Longer term, BCIs promise to play a similarly seismic role in how humans may augment (or maybe even bypass) computer mouses, keyboards, etc., to directly use, control, and perhaps even program computer systems.

However, achieving this long-term vision requires more immediate short-term progress. In the upcoming years, most efforts on BCI development will be centered around therapeutic and research uses. These development efforts are currently the subject of an important debate within the neuroscience community – should clinical and research BCIs differ in design, or is a common platform preferable? The remainder of this article explains the origin of this debate and how computer architects can help shape it.

Therapy versus research: There are many clinical uses for BCIs. At Yale, we collaborate with scientists and clinicians who are implanting FDA-approved BCIs to treat epilepsy. These BCIs read biological neuronal activity, detect and anticipate the arrival of seizures, predict their spread, and electrically stimulate areas of the brain to mitigate symptoms. Implanted brain “pace-makers” that electrically stimulate the brain, also referred to as deep-brain stimulators, are used by over 200K patients worldwide to treat Parkinson’s disease.

These successes have prompted pilot studies of more experimental therapies in the last few years. In two studies that are of particular interest to this author, implantable BCIs promise to offer assistance to patients with depression and addiction, even when pharmacological intervention has failed. For example, clinicians at the University of California, San Francisco recently implanted a BCI in a patient to detect the onset of severe depression and electrically stimulate the brain to reduce suicidal impulses. The results – albeit on only one individual – were striking, with the patient’s score on a standard depression scale signaling remission. As another example, neurosurgeons at the Rockefeller Institute at West Virginia University were able to show that deep brain stimulation delivered by an implantable device dramatically reduced the cravings experienced by a patient with crippling opioid addiction.

These (and other) studies have begun shifting developers’ focus to clinical uses, away from what BCIs were originally designed for — supporting exploratory science to help understand neural circuits, network dynamics among brain regions, algorithms for electrical stimulation of biological neurons, the way in which neural circuits interact to give rise to cognition, and more.

Regardless of the use case, implantable BCIs cannot exceed tens of milliwatts of power, as exceeding 1℃ risks damaging cellular tissue. But, BCIs offer superior algorithmic capability when they can read and process the activity of as many neurons for as many brain regions as possible. Unfortunately, power scales super-linearly with brain-computer interaction bandwidth. Consider, for example, that DARPA’s goal is to read as many as a million neurons. Assuming high-fidelity recordings (e.g., 30KHz sampling rates and 10 bits per sample), data rates can easily exceed Gbps. Most modern commercial BCIs under 15mW achieve only Kbps, while our recent work on HALO offers 46Mbps [ISCA ‘20 and Top Picks ‘21, with 12nm tape-out details to be presented at Hot Chips ‘22].

The power-efficiency debate: To solve this power crisis, one school of thought advocates separating BCI design for clinical and research uses. The idea is that clinical methods need relatively coarse-grained neuronal information like spike rates, so sampling rates and fidelity can be reduced substantially, considerably lowering power dissipation. For research purposes, where capturing more fine-grained shapes of the spikes is warranted, we can use different BCIs that capture high-fidelity data from a (much) smaller number of neurons. The studies that have argued for this approach have generally focused on data collected from the motor cortex region of the brain.

The second school of thought is that clinical and research BCIs cannot be divided as cleanly as the first school of thought suggests. Studies in this camp suggest that even for those clinical deployments that are better understood (e.g., epilepsy or Parkinson’s disease), the finer-grained shapes of spikes may carry information that is important for the personalization of disease treatments. This precludes coarsening signal fidelity.

Thus far, this debate is being addressed by neuroscientists, sometimes in collaboration with analog and digital VLSI designers. While these experts will undoubtedly play a crucial role, there is also a critical need for computer architects to weigh in. Many innovations in computer architecture can help influence this debate:

Accelerator-level parallelism: The stringent power-efficiency needs of BCIs makes accelerators a necessity. At the same time, BCIs need to be flexible to personalize algorithms, and need to support an ever-expanding array of algorithms and computational methods from emerging neuroscientific studies. In our HALO work, we have studied accelerator-level parallelism for BCIs by building an array of mini-ASICs that can be configured into various pipelines using a programmable network fabric. There exists significant scope to improve upon this work; in particular, emerging BCIs for implantation in the spinal cord and retina require many new classes of signal processing support that go beyond what HALO offers. An expanded set of applications with accelerators may enable support for an adequately wide range of algorithms that the need to bifurcate clinical versus research needs is mitigated for now.

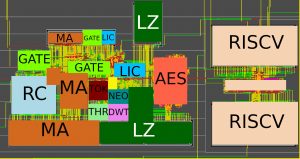

A diagram of the HALO chip tape-out in a 12nm process (joint work by this author’s and Rajit Manohar ‘s research groups, with details to be presented at Hot Chips ‘22). Several mini-ASICs (shown in colored tiles placed on the left-hand side of the chip) can be configured to realize low-power processing pipelines. Two low-power RISC-V micro-controllers support other miscellaneous algorithms.

A diagram of the HALO chip tape-out in a 12nm process (joint work by this author’s and Rajit Manohar ‘s research groups, with details to be presented at Hot Chips ‘22). Several mini-ASICs (shown in colored tiles placed on the left-hand side of the chip) can be configured to realize low-power processing pipelines. Two low-power RISC-V micro-controllers support other miscellaneous algorithms.

Fusing machine learning and signal processing accelerators: Machine learning techniques are becoming more commonly used in BCIs. Recent work on uBrain [ISCA ’22] proposed initial studies on inference accelerators for BCIs. While this is an important step forward, there exist opportunities to improve inference accelerators for BCIs. In particular, it is worth noting that the BCI space requires a mix of signal processing and machine learning approaches. An example is seizure prediction and detection, where both are needed for robust prediction of seizure advent and spread. Building common hardware platforms that can support both classes of applications remains an open area of research in computer architecture.

Exploring asynchronous & neuromorphic techniques: Our HALO chips – where each of our mini-ASICs operates in its own clock domain – revisit globally asynchronous locally synchronous designs. Even more aggressive forms of asynchronous design may be particularly beneficial for the remaining power-inefficient parts of BCIs, such as general-purpose micro-controllers. Similarly, recent progress on neuromorphic hardware, including approaches like race logic and space-time computing, may present intriguing opportunities to implement ultra-low power approaches for spike sorting, clustering, or principal component analysis pipelines on BCIs. Such approaches may reserve separation of research and clinical BCIs to situations where the desired brain-computer communication bandwidth is in hundreds of Mbps, rather than the hundreds of Kbps.

Fine-grained power management: BCIs offer interesting constraints and environmental quirks that can enable new studies on idle and active low-power modes as well as policies to manage them using heuristics, control theory, machine learning, and hybrid solutions. Consider, for example, that BCIs provoke an immune response in the brain, often resulting in the formation of new blood vessels around the physical BCI. It may be possible to use the blood flowing through these vessels to offer some natural heat removal. Perhaps there may be opportunities to revisit ideas like computational sprinting in view of these types of environmental aspects of BCI deployment.

Power-efficient radios: Although computer architects have historically focused mostly on processors, memory, and storage, the wireless radios on board BCIs present a major roadblock to better power efficiency. There may exist significant scope to leverage the ideas discussed thus far – accelerators, asynchronous design, neuromorphic computing, and more – not just for compute, but to also build more power-efficient radios. In general, co-designing compute with radios that can trade off transmission distance with energy efficiency remains relatively unexplored and could be a rich area for exploration.

About the author. Abhishek Bhattacharjee is an Associate Professor of Computer Science at Yale University and a member of the Neurocomputation and Machine Intelligence Center within Yale’s Wu Tsai Institute. His research interests span computer architecture and systems software, with a focus on address translation and the virtual memory abstraction, and more recently, on brain-computer interfaces.

Disclaimer: These posts are written by individual contributors to share their thoughts on the Computer Architecture Today blog for the benefit of the community. Any views or opinions represented in this blog are personal, belong solely to the blog author and do not represent those of ACM SIGARCH or its parent organization, ACM.