Non-volatile memory technologies have a rich past dating back to the 1960s. Fairchild R&D Lab member Chih-tang Sah first noted in 1961 the ability of electric charge to remain on the surface of an electrical device for up to several days. By the 1970s the first production non-volatile memory (NVM) technologies had emerged, and shortly after, Dov Frohman conceived of what eventually would become EPROM. And the rest is history!

Sort of.

Fast forward to 2022. If you are reading this blog post, you are touching—albeit indirectly—non-volatile memory. From mobile smartphones to desktop computers, we rely on NVMs as our last line of defense against losing critical data, including things that are irretrievable (yikes!). To put it technically, NVMs allow computer state to be preserved even when power is shut off.

Interestingly, the same richness of non-volatile memory research that emerged in the 1960s has “persisted”, and—from industry production lines to academic research labs—different flavors and implementations of NVMs continue to emerge and retain investment and interest. Phase Change Random Access Memory (PCRAM), Magnetic RAM (MRAM)—including Spin Torque Transfer RAM (STT-RAM) and Spin Orbit Torque RAM (SOT-RAM)—, Resistive RAM (RRAM), Charge Trap Transistors (CTT), and Ferroelectric based RAMs—both Ferroelectric FET (FeFET) and Ferroelectric RAM (FeFRAM)—are all examples that represent the cutting-edge of NVM research. While these technologies are still valuable for their persistence, including in intermittent computing settings, they also exhibit other compelling properties like smaller area footprint or lower energy than common commercial memory products (e.g., SRAM and DRAM). All these properties make NVMs attractive for addressing a wide range of computer architecture bottlenecks in a range of system settings.

The “Memory Wall”

“The most ‘convenient’ resolution to the problem would be the discovery of a cool, dense memory technology whose speed scales with that of processors.”

– Wulf and McKee in “Hitting the Memory Wall: Implications of the Obvious”

In their 1994 paper, Wm. Wulf and Sally McKee introduced a computer architecture bottleneck which henceforth has been referred to as the “Memory Wall.” The “Memory Wall” refers to the unbalanced aggregate performance trend of the computer processor compared to the computer memory. From 1985 to 2010, processor performance increased by over three orders of magnitude, while memory performance increased by less than a single order of magnitude. This is concerning as it limits the effect that the massive gain in processor performance has on overall system performance.

Wulf and McKee go on to suggest in their paper that this issue is primarily a result of memory technology: “The most ‘convenient’ resolution to the [memory wall] problem would be the discovery of a cool, dense memory technology whose speed scales with that of processors.” In 1994, this may have been a difficult pill to swallow. However, now, computer architecture researchers have a wide breadth of novel memory technologies to choose from, which, in many cases, offer more favorable or comparable properties to those of their conventional cousins, SRAM and DRAM.

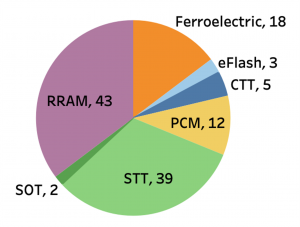

Now the question is: which one do we choose? Well, there’s good news and there’s bad news in making such a decision. The good news is, just from 2016 to 2020, there have been over 120 NVM-related publications in the VLSI literature (ISSCC, VLSI, and IEDM proceedings). The bad news is, well, the same. Clearly materials and device engineers have delivered in terms of memory technology options, but now architects have become inundated with an overly complex and expanding design space of memory technologies. To make matters worse (better?), each technology has its own unique properties, leading to disparate benefits and design trade-offs per technology and per application. For example, RRAM has potential for extremely high density but poor endurance (memory lifetime), while STT-RAM exhibits both competitive read performance and acceptable endurance at lower density.

NVM-related publications in ISSCC, VLSI, and IEDM from 2016-2020

NVMExplorer

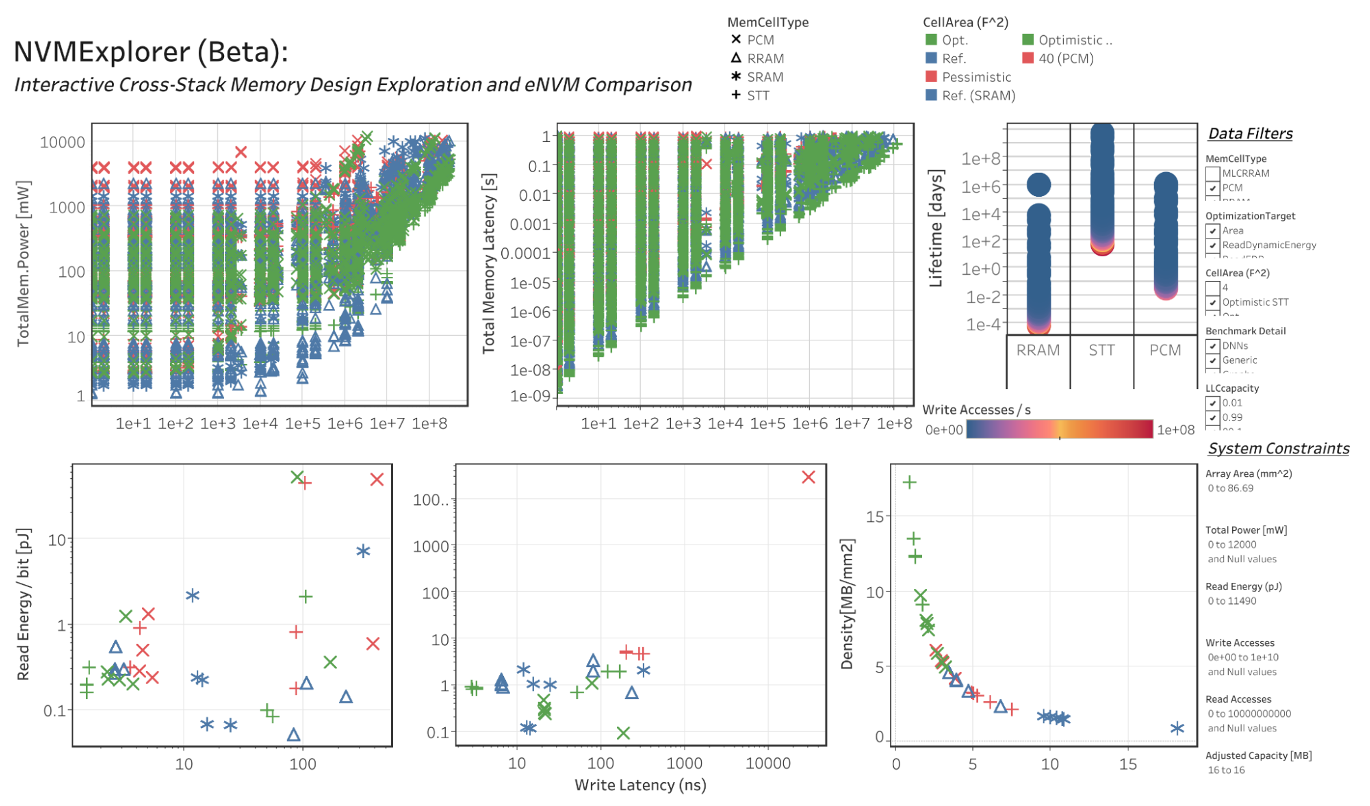

To address the design space problem of NVMs, our team of researchers at Harvard University and Tufts University developed open source software and an interactive web tool for conducting design space exploration of NVMs—called NVMExplorer (published at HPCA ‘22). In the spirit of co-design, we take a cross-stack approach which enables users to compare the application-level impacts of different NVM technologies under different system architectures.

In our HPCA ‘22 paper, we detail three particularly compelling case studies using NVMExplorer: a deep neural network (DNN) inference edge processor, a graph processing accelerator, and a general purpose client CPU. For each case study, NVMExplorer is customized to model these different settings by accepting relevant workload data per application space (e.g., Wikipedia and Facebook graph search workload data and SPEC2017 workload data in the case of the client CPU), system properties and constraints (e.g., memory capacity, word width, banking/muxing optimizations), and memory technology characterization (e.g., STT-RAM, PCM, 4 bit-per-cell FeFET).

NVMExplorer then coalesces this information and uses a combination of existing non-volatile memory array characterization tools (e.g., NVSim) along with analytical modeling to compute key metrics (i.e. memory performance, power, area, lifetime, accuracy) and ultimately determine the optimal eNVM configuration. This information can then be used to identify co-design opportunities across the stack and to build and adjust considerations across the application, system, and technology specifications to explore optimizations and guide future investment. NVMExplorer also has a built-in fault injection framework to incorporate fault modeling and reliability implications of different memory technologies on the application-level accuracy of critical applications, like image classification accuracy when considering a DNN accelerator.

The NVMExplorer software was designed with flexibility and usability at the forefront so that the community can interface it with additional tools. For example, the memory array characterization portion of the evaluation (using NVSim by default) can be swapped out with memory characterization tools that support 3D-integrated memory solutions, such as DESTINY or CACTI-3DD; or, instead of utilizing the NVMExplorer database of NVM technologies, a user could use the results of device simulation, internal measured data, or other published results as input to NVMExplorer, for example, to evaluate a novel NVM configuration. For more general or casual users, the NVMExplorer website also hosts an interactive data visualization with preloaded data from wide ranging sweeps of application traffic, memory architectures, and memory technology choices which users can use as a “first-stop shop” for understanding trade-offs in the design space of NVMs.

Summary

Computer architects should be looking towards alternative memory technologies as a compelling strategy to address the memory wall, especially in the context of increasing on-chip memory capacity and efficiency. However, there are many distinct technology proposals and memory properties, which makes it challenging to compare and match potential solutions to system and application contexts. NVMExplorer is one set of tools to enable this kind of research because it allows for comparison, as well as probing cross-computing-stack design choices, and we hope it is a starting point for many future design space exploration and system design and optimization efforts.

Snapshot of NVMExplorer’s interactive web tool

We recently hosted a tutorial at ISCA ‘22 on June 18, 2022 and will be hosting another workshop at PACT 2022 in October. NVMExplorer is being actively developed and questions and responses can be sent to nvmexplorer@gmail.com. All information on accessing the interactive data visualization and downloading the open source software is available on the NVMExplorer website at http://www.nvmexplorer.seas.harvard.edu.

About the Authors:

Alexander Hankin is a Postdoc at Harvard University mentored by David Brooks and Gu-Yeon Wei.

Lillian Pentecost is an Assistant Professor of Computer Science at Amherst College (starting Fall 2022).

Marco Donato is an Assistant Professor in the Department of Electrical and Computer Engineering at Tufts University.

Mark Hempstead is an Associate Professor in the Department of Electrical and Computer Engineering at Tufts University.

Gu-Yeon Wei is Robert and Suzanne Case Professor of Electrical Engineering and Computer Science in the Paulson School of Engineering and Applied Sciences at Harvard University.

David Brooks is the Haley Family Professor of Computer Science in the School of Engineering and Applied Sciences at Harvard University.

Disclaimer: These posts are written by individual contributors to share their thoughts on the Computer Architecture Today blog for the benefit of the community. Any views or opinions represented in this blog are personal, belong solely to the blog author and do not represent those of ACM SIGARCH or its parent organization, ACM.