Networking performance is critical to distributed applications, and there are always efforts underway to improve the performance of datacenter network communication stacks. These efforts take a variety of approaches. For example, they may result in either new hardware or new software, and they may seek to modify either the compute nodes or the network fabric itself. Examples include new OS-bypass networking APIs and devices, RPC layers, I/O threading models, congestion control protocols, fabric topologies, and dynamic multipath routing policies.

Determining which of these innovations to adopt requires running distributed systems benchmarks that simulate a variety of typical datacenter workloads and analyzing their results. These critical benchmarks are often developed in an ad-hoc, piecemeal fashion that does not result in reusable common infrastructure. In short, there is a need for better open-source distributed systems benchmarks that enable reuse of existing expertise and infrastructure.

A HIGH-LEVEL OVERVIEW OF DISTBENCH

Distbench is a new open-source benchmarking tool from Google intended to reduce the effort spent on networking benchmark development, execution and analysis by both application developers and network technology developers.

Distbench is somewhat unique in that it can produce a multitude of different network traffic patterns. To accomplish this, it synthesizes a traffic pattern given a high-level description of a target application to emulate. It also supports sending traffic using multiple RPC protocols (currently gRPC, Mercury and Homa).

The main design goals for Distbench are ease-of-use, speed of test execution, generality of simulated application behaviors, extensibility to new networking APIs and richness of analysis. In short, Distbench should, in principle, be able to run in any cluster or cloud environment, reproduce any traffic pattern, use any RPC layer, and produce results quickly in a format that allows investigators to perform any kind of offline performance analysis.

DISTBENCH ARCHITECTURE

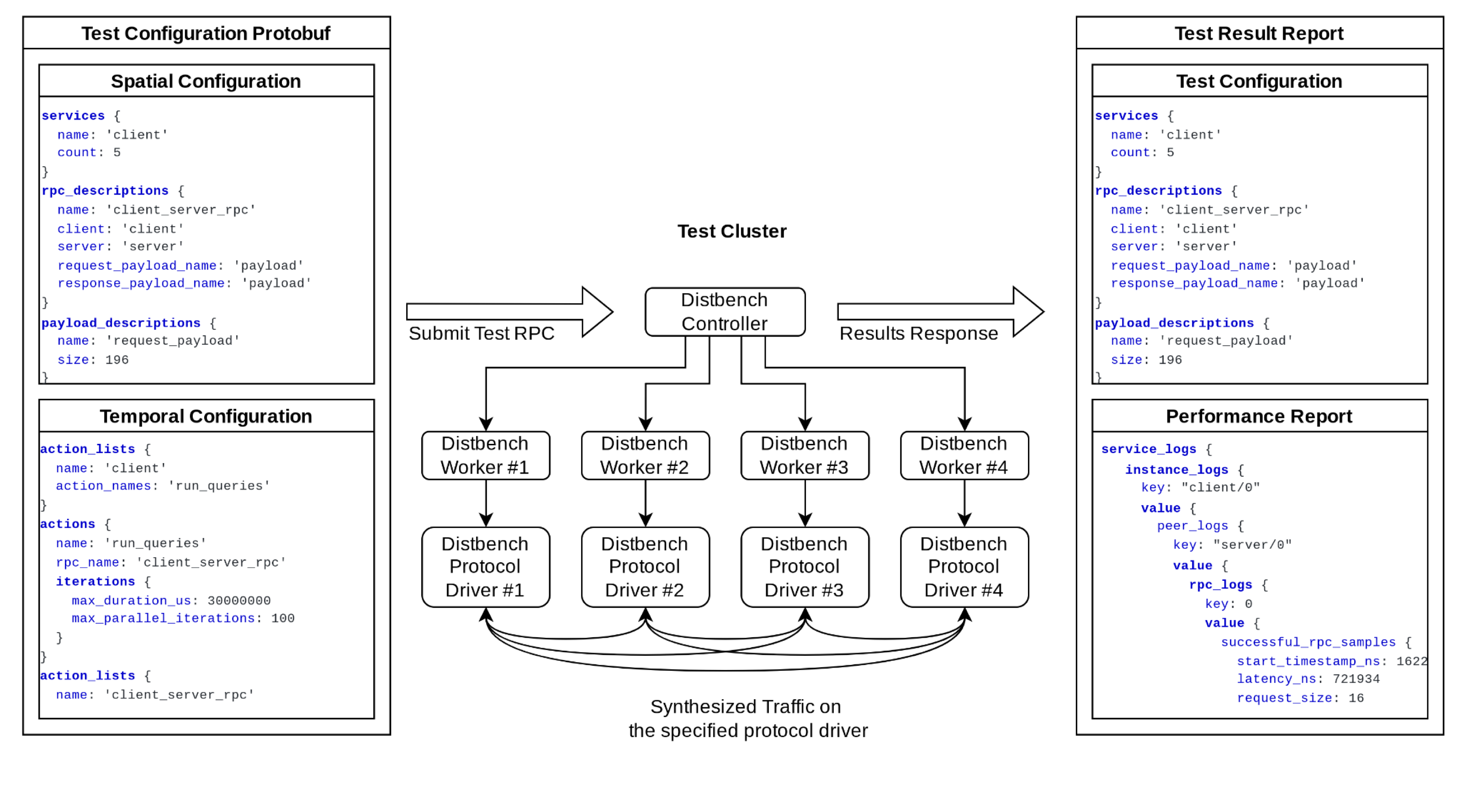

As depicted in Figure 1, Distbench functions as a distributed system that synthesizes the traffic patterns of other distributed systems. It consists of a single controller node that manages multiple worker nodes, with each worker node typically being placed on a separate physical or virtual machine. Performance experiments are started by delivering a traffic configuration message to the controller node via RPC. The controller node coordinates the activities of the worker nodes, assigning roles, introducing workers to their peers, and collecting performance data at the end of the experiment.

The controller node responds to the traffic configuration RPC with a message containing the performance results that were collected and the configuration that was tested. Upon the completion of one experiment another may be started by subsequent RPC at any time, without needing to restart or reconfigure the tasks on the individual nodes or interact with any sort of cluster scheduling system. This workflow ensures that running a collection of performance experiments with Distbench is fast and easy, regardless of the underlying cluster management system, and provides several other benefits.

Figure 1: Illustration of the Distbench benchmark workflow. See github.com/google/distbench/blob/main/docs/workflow.md for more information.

SUPPORT FOR MULTIPLE PROGRAMMABLE TRAFFIC PATTERNS

Distbench is designed to synthesize network traffic starting from an abstract description of the interactions between multiple services, each of which may be replicated and distributed across multiple physical or virtual nodes. The traffic description includes details of the logical services, physical colocation of services, RPC handler actions (e.g., initiation of dependent RPCs), request and response payload sizes, fan-in/fan-out, and more. The current version of the network traffic pattern description focuses mainly on modeling network transfers, including the correct handling of dependencies between them, but there is also support for simulating simple CPU and memory bandwidth bottlenecks and antagonists that was recently added.

The end goal is for application developers to be able to publish Distbench-compatible traffic pattern configurations as an alternative to releasing and maintaining special versions of their own binaries and datasets for benchmarking purposes. The community can then benefit from benchmarks that are easy to run with results that are more predictive of real application performance.

EXTENSIBLE SUPPORT FOR MULTIPLE RPC LAYERS/IMPLEMENTATIONS

Utilizing an abstract description of network traffic allows Distbench to synthesize that traffic via multiple different underlying RPC layers. The actual RPC layer used in an experiment is simply specified in its configuration, and a single Distbench binary can produce traffic using any supported RPC layer. Distbench currently supports gRPC (with multiple threading models), Homa, and Mercury in the open source version, with Google’s internal version adding support for Stubby and other experimental protocols.

Distbench supports multiple RPC layers via an abstraction called the protocol driver. Protocol drivers are easy to write, so the developer of a new RPC layer can get access to a library of traffic patterns with at most a few days worth of coding by adopting Distbench. The protocol driver abstraction is also designed to support sending traffic through network simulators, meaning that, in the future, Distbench may be able to help run experiments on novel network fabrics before they are built.

COMMON HIGH-FIDELITY FORMAT FOR PERFORMANCE RESULTS

The data formats used to store network benchmark results are often overlooked as an area for innovation, but they are in fact critical to the ability to detect and analyze performance anomalies.

The architecture of Distbench makes comparing N traffic patterns across K RPC layers possible with only O(N+K) development effort instead of the traditional O(N*K). New traffic patterns can be tested against multiple RPC implementations, and new RPC implementations can be tested against a library of traffic patterns with very little incremental effort. However one challenge is that it is not feasible to hardcode support for computing every possible metric with such a wide range of traffic patterns and associated performance metrics. Distbench does produce basic summary statistics of latency and bandwidth, which may be sufficient for experiments with simple traffic patterns, but any deeper analysis must be done offline, which in turn requires storing the raw performance data in some compact lossless format.

Distbench records its raw performance data in a highly-structured format designed to be both rich in detail and space efficient. The design of this format could be its own whitepaper, but the highlights are that it can record timing data, client and server identity, bytes transferred, and the pass/fail results for each individual RPC. It can also track the dependencies between the many RPCs that may arise in a given RPC tree. Distbench collects this data without requiring a dependence on an external RPC tracing service, and, to save space and overhead, it further allows RPC tracing to be sampled periodically.

The Distbench results format can be converted into any format that an existing analysis tool can accept and can be retrofitted into existing benchmarks fairly easily. Tools that accept the Distbench format natively have the advantage of being able to refer to the traffic configuration metadata, which can be used to produce performance dashboards automatically for arbitrary traffic patterns.

CURRENT STATUS AND FUTURE WORK

Distbench can currently synthesize a wide variety of traffic patterns built out of traditional single-request single-response RPCs and simulate simple CPU/memory resource consumption and antagonism. Work is ongoing to calibrate Distbench against existing benchmarks to ensure that it produces consistent results, to add support for additional RPC protocols, and to add support for additional networking paradigms, including streaming RPCs and RDMA. These efforts will add significant capabilities to the tool, but even in its current state, Distbench has already shown significant promise within Google for investigating cloud VM networking performance, novel RPC layers, threading models, and network offloads.

The Distbench source is available for download at http://github.com/google/distbench. this repository is open to contributions.

About the author: Daniel Manjarres is a senior software engineer at Google, where he works on datacenter networking performance measurement and analysis tools. Outside of work he studies world history and searches for the perfect bassline.

Disclaimer: These posts are written by individual contributors to share their thoughts on the Computer Architecture Today blog for the benefit of the community. Any views or opinions represented in this blog are personal, belong solely to the blog author and do not represent those of ACM SIGARCH or its parent organization, ACM.