Overview

Reliability is essential for computing. However, as technology nodes have scaled, there have been several fundamental physical challenges to overcome to provide the abstraction of reliability. One such challenge has been the emergence of marginal defect-driven faults that are difficult to address solely using best practices in manufacturing test and screening. We first frame the problem statement and highlight the importance of fault root causes. We then discuss defects observed, the role of test and design to address this problem, and identify research opportunities.

Background

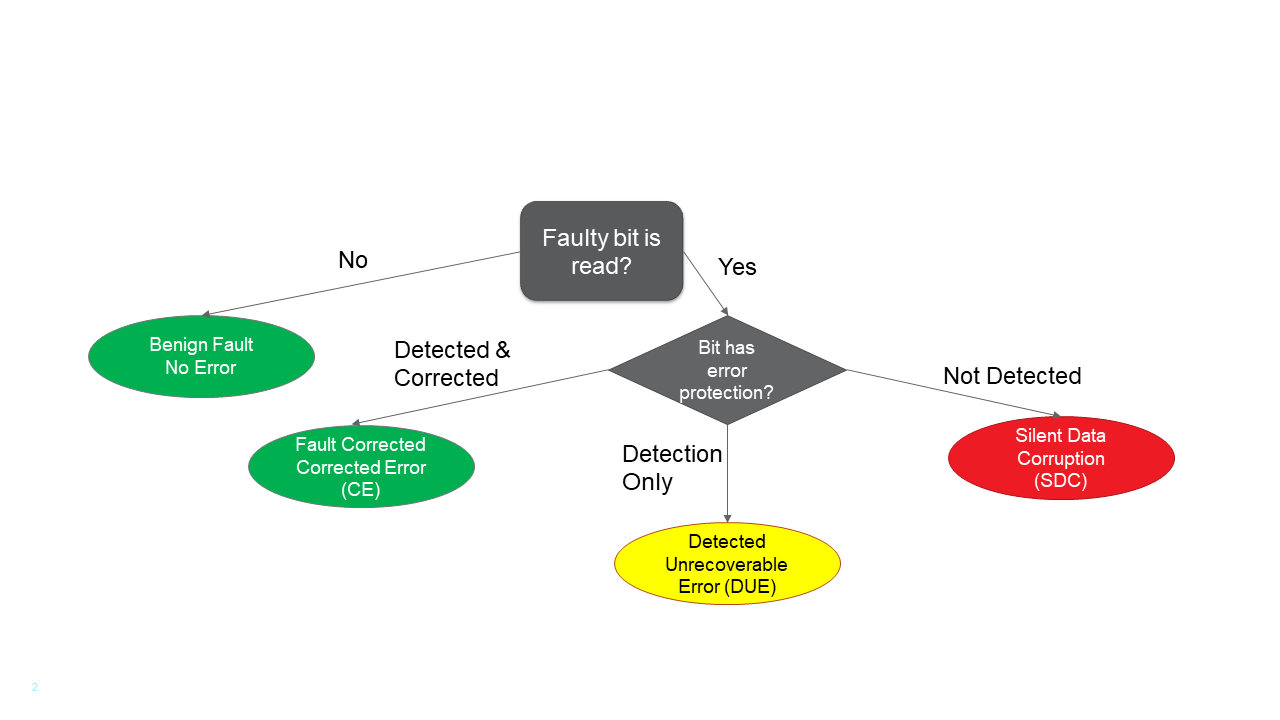

A fault is a condition that causes the inability to meet a specification. An example of a specification is the correct operation of the hardware of a microprocessor. Each fault has a root cause. A well-understood root cause of faults that occur in a processor is a high-energy particle, such a neutron or alpha particle. A silicon defect is another root cause. A fault may be transient, intermittent, or permanent based on how often the fault appears and is closely tied to its root cause. For example, high energy particles tend to produce transient faults, whereas defects can cause intermittent or permanent faults. An error is the symptom of a fault. There are several possible symptoms, which can be understood from the taxonomy shown below, which is adapted from here.

If a fault is never read or accessed, it is said to be benign and does not produce an error. When the fault is read, there are several possible scenarios based on whether the bit has any type of protection. If the fault is detected and corrected, the outcome is said to be a Corrected Error (CE). An example protection technique that can provide this capability is an Error Correcting Code (ECC). If a bit is detected but is not correctable, the outcome is said to be a Detected but Unrecoverable Error (DUE). For example, parity can provide only detection. If the fault is read but goes undetected, the outcome could be Silent Data Corruption (SDC). An SDC is the worst possible outcome of a fault, as it can have an arbitrary impact on the correctness of software running on the hardware.

Therefore, the occurrence of a CE, DUE, or SDC depends on both the fault experienced by the bit and the type of protection (if any) for that bit. Hence, reasoning about errors in a processor requires understanding what types of faults the hardware may experience, which in turn, depends on the root cause of the fault.

Modern high-performance microprocessors are typically designed with specific Reliability, Availability, and Serviceability (RAS) goals that then get translated into protection decisions for each flop or structure where a fault becomes a CE or a DUE, while ensuring that the SDC risk is minimized. While the goals and choice of protection schemes are implementation specific, industry best-practices such as Architecture Vulnerability Factor (AVF) modeling exist to guide these choices.

The Emergence of Defect Driven Faults

A key focus of RAS design has been to cope with particle-induced transient faults (aka “soft errors”). AVF modeling was developed for such faults. Other root causes, such as defects, have been assumed to be caught by techniques such as burn-in and wafer level testing, such as scan, prior to shipping silicon to customers. This has allowed RAS design to focus on faults that cannot be eliminated or sufficiently reduced through these other approaches. Recently, Meta and Google reported the occurrence of SDCs in their fleet, which raised alarm bells that there may be hitherto unknown root causes for faults in modern processors.

Marginal defects are some of the root causes for these faults. A marginal defect can cause a fault under specific conditions of temperature, voltage, frequency, workload patterns, etc. Marginal defects tend to produce intermittent faults. In addition to defects, prior research has also shown that degradation and lifetime reliability are concerns at scaled process technologies and can induce both intermittent and permanent faults over the service life of the processor . Therefore, achieving reliability and resiliency requires being cognizant of multiple root causes, the types of faults they induce, and the errors that result based on where the faults occur in a processor.

Testing is Important

Testing is a way to identify the presence of defects. Knowledge of root causes is important for effective testing. For example, let us consider a type of marginal defect – a Small Delay Defect (SDD). An SDD is a defect that can cause a delay that is smaller than the clock cycle time for a given process node – a delay fault. Automated Test Pattern Generation (ATPG) techniques can be used to test for delay faults by prioritizing paths with minimal timing slack. ATPG can be used to test for other types of defects using appropriate representative fault models.

In addition to advancements in ATPG targeting new defect modes, it is important to improve the methods used to accelerate the activation of faults from defects. Improvements in the evaluation and implementation of burn-in coverage can help in this regard. Finally, in addition to manufacturing test, acceptance testing at the customer will be an important tool in the arsenal for catching defects that may have evaded earlier tests and any potential degradation effects that may occur in the field.

While testing is important, exhaustive testing is challenging given the sheer size of modern processor designs. Testing for marginal defects requires sweeping a wide range of voltages, temperatures, workload patterns, etc., which add to the overall testing challenge. The differences in the environment and conditions between Automated Test Equipment (ATE) and regular server platforms also provide challenges. For example, modern processors use complex power management algorithms when running production workloads. This interaction of power management mechanisms, environmental conditions, and workload behavior can give rise to specific conditions where a marginal defect can induce a fault that may be very challenging to replicate using scan-based testing techniques on ATEs. Therefore, testing alone is unlikely to be the definitive solution to address these new faults.

Supplementing Testing With Resilient Design

Once faults and their root causes are understood, there is the potential to remediate them through resilient design. Enhancing the RAS design of a processor that is cognizant of new faults can also improve the observability of existing system level tests, which in turn, can help catch defects during manufacturing tests. As with testing, the knowledge of root causes drives specific RAS actions. For example, let’s consider an array structure (e.g., a cache). If it is known most faults that occur in that array are particle-induced transients impact a single bit when data is read, a Single-Error Correct-Double Error Detect (SEC-DED) Error Correcting Code (ECC) may be sufficient to provide effective remediation against such faults. However, if it is known that the root cause is a multi-bit transient fault, a stronger ECC may be needed. If permanent faults are a concern, repair mechanisms may be incorporated.

There exist pervasive redundancy techniques to detect faults anywhere within the processor and have commercial implementations (e.g., core lock-stepping). Such techniques tend to entail significant performance/power overheads and can be prohibitively expensive for the broader server market. However, it is imperative that computing be reliable, and the risks posed by faults from marginal defects be addressed. Here, history can be a guide.

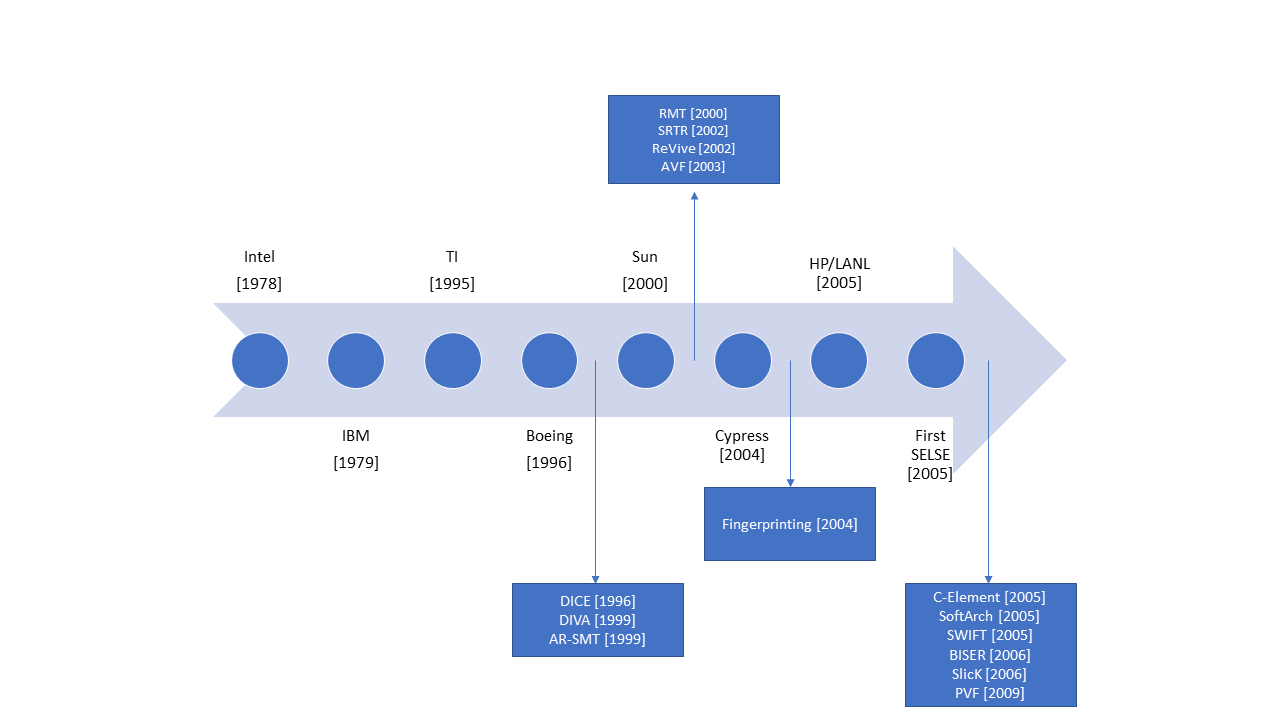

The figure below presents a historical retrospective of the soft error problem as a timeline. The circles denote publicly reported incidents of soft errors by various sources. The data in the figure is synthesized from here. The boxes contain select papers on fault modeling and mitigation and their year of publication.

A pivotal moment was in the early 2000s, where there were several high-profile incidents of failures in large scale systems and from prominent processor manufacturers. Once the failures were root caused to high-energy particles, there was a strong push from both industry and the academic community to explore fault modeling techniques to reason about such faults and designing for resilience.

There were two key conclusions from this research, which have now become industry best practices:

- Understanding the root cause and the associated fault characteristics was important because that then paved the way for systematic and quantitative approaches to fault modeling.

- Targeted protection of the parts of the processor that are vulnerable to the faults allows one to design hardware for a given reliability target.

Opportunities for Research

We are now at a moment in time not unlike the early 2000s. We have new faults, resulting from new root causes. Testing is a key pillar to combat these issues, but a combination of test and RAS is required to address the problem comprehensively. Both test and RAS must be grounded on root causes. Resiliency and reliability must be achieved, but not at the expense of high performance and energy-efficiency.

Therefore, resilient design must be carried out in a thoughtful and targeted way, with quantifiable goals and the means to measure whether the design choices made satisfy those goals. To achieve these outcomes, we believe research is required to develop systematic pre-silicon fault modeling techniques. Being able to ascertain the impact faults from these new root causes can help both improved RAS and test. Research is also required on exploring how one can effectively combine RAS and test and explore the space of resilience techniques that can pave the way for reliable and efficient computing at scale.

About the Authors: Sudhanva Gurumurthi is a Fellow at AMD, where he leads RAS advanced development. Vilas Sridharan is an AMD Senior Fellow and leads the RAS Architecture team. Sankar Gurumurthy is a Director and leads the AMD FailSafe Initiative, covering RAS and test.

© 2023 Advanced Micro Devices, Inc. All rights reserved. AMD, the AMD Arrow logo, and combinations thereof are trademarks of Advanced Micro Devices, Inc. Other product names used in this publication are for identification purposes only and may be trademarks of their respective companies.

AMD Disclaimer

The information presented in this document is for informational purposes only and may contain technical inaccuracies, omissions, and typographical errors. The information contained herein is subject to change and may be rendered inaccurate for many reasons, including but not limited to product and roadmap changes, component and motherboard version changes, new model and/or product releases, product differences between differing manufacturers, software changes, BIOS flashes, firmware upgrades, or the like. Any computer system has risks of security vulnerabilities that cannot be completely prevented or mitigated. AMD assumes no obligation to update or otherwise correct or revise this information. However, AMD reserves the right to revise this information and to make changes from time to time to the content hereof without obligation of AMD to notify any person of such revisions or changes.

THIS INFORMATION IS PROVIDED ‘AS IS.” AMD MAKES NO REPRESENTATIONS OR WARRANTIES WITH RESPECT TO THE CONTENTS HEREOF AND ASSUMES NO RESPONSIBILITY FOR ANY INACCURACIES, ERRORS, OR OMISSIONS THAT MAY APPEAR IN THIS INFORMATION. AMD SPECIFICALLY DISCLAIMS ANY IMPLIED WARRANTIES OF NON-INFRINGEMENT, MERCHANTABILITY, OR FITNESS FOR ANY PARTICULAR PURPOSE. IN NO EVENT WILL AMD BE LIABLE TO ANY PERSON FOR ANY RELIANCE, DIRECT, INDIRECT, SPECIAL, OR OTHER CONSEQUENTIAL DAMAGES ARISING FROM THE USE OF ANY INFORMATION CONTAINED HEREIN, EVEN IF AMD IS EXPRESSLY ADVISED OF THE POSSIBILITY OF SUCH DAMAGES.

Disclaimer: These posts are written by individual contributors to share their thoughts on the Computer Architecture Today blog for the benefit of the community. Any views or opinions represented in this blog are personal, belong solely to the blog author and do not represent those of ACM SIGARCH or its parent organization, ACM.