For decades, memory systems have relied on DRAM for capacity, SRAMs for speed and then turned programmers loose with malloc(), free(), and pthreads to build an amazing array of useful, carefully tuned, composable, and remarkably useful data structures. However, these data structures have been transient — swept away by the next reboot or system crash. To build something that lasts, programmers have worked with clunkier interfaces — open(), close(), read(), write() — to access glacially slow spinning or, lately, solid-state disks.

But things are about to change.

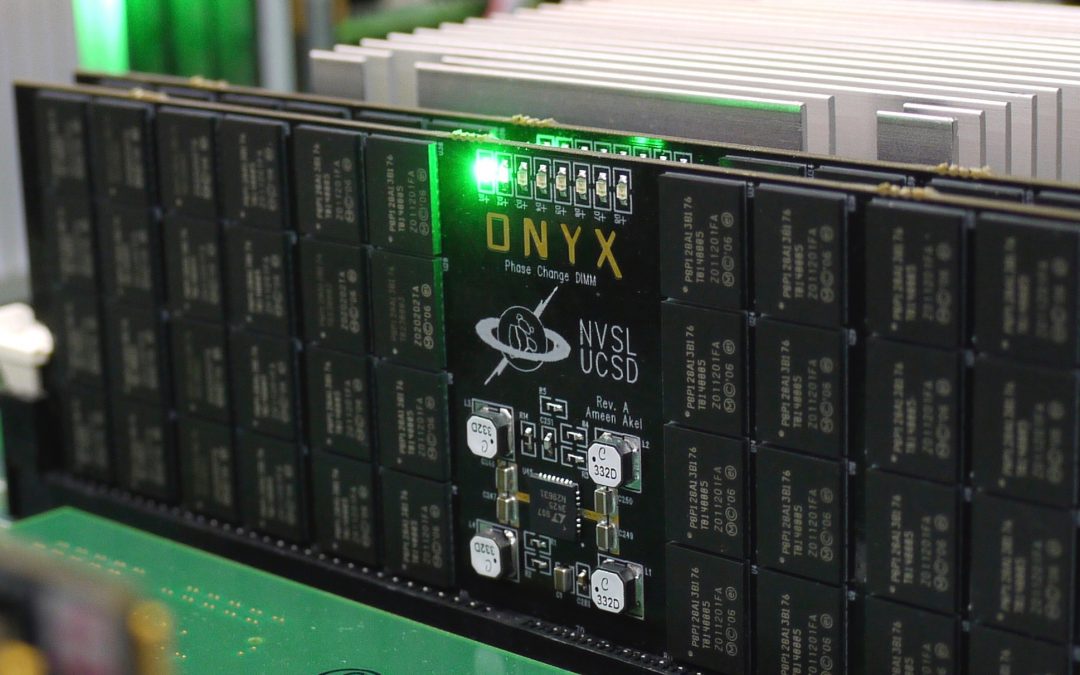

Non-volatile main memory (NVMM) technologies like phase change memory and Intel and Micron’s 3D XPoint memory are coming out of the lab and onto the processor memory bus. When Intel releases 3D XPoint DIMMs next year, they will change memory hierarchies in two ways — one incremental and one revolutionary. The incremental change will be a boost in main memory capacity, since 3D XPoint is denser the DRAM. The revolutionary change is that 3D XPoint is persistent — the bits it stores stay put when power goes away.

Adding persistence to the memory hierarchy will have a transformative impact on nearly all aspects how we design and program computer systems, because memory is central to all aspects of a computer’s operation: Processors operate on it, peripherals DMA in and out of it, programmers manage it, operating systems virtualize it, etc. Persistent memory requires adjustments in how all these pieces work and work together.

Most significantly, persistent main memory will lead to the unification of storage and memory. Since the appearance of semiconductor memories, the orders of magnitude latency difference between memory and disks has kept storage and memory separate. Persistent memory technologies will be a bit slower than DRAM, but they will be fast enough to keep up with processors. As a result, the boundary between storage and memory will become extremely fuzzy.

Merging storage and memory will lead to changes in how computer architects, system programmers, and application programmers deal with persistent state. Over the course of several blog posts, I am going to discuss the problems that these and other groups will grapple with as NVMM becomes more common. We will start by considering the programming interface for NVMM.

How to Program With Persistent Memory?

The changes persistent memory brings to programming won’t happen overnight. Here is a likely roadmap for how they will unfold and the research questions that arise along the way.

Stage 1: Legacy Support and Giant Memory

Neither of the easiest ways to use NVMM are satisfying since they require ignoring either its memory characteristics or its persistence.

DRAM-starved applications will leverage NVMM’s density to increase memory capacity. Since NVMM will be somewhat slower than DRAM, the OS and system libraries will need to let applications choose what to allocate where. Exercising that choice poses interesting data placement problems.

Alternately, IO-intensive applications can use a normal file system to leverage NVMM’s persistence while ignoring the fact that it is a memory. Linux and Windows already allow conventional file systems to run on NVMM-backed block devices (i.e., a persistent RAM disk), providing a performance boost without changing a line of code.

Unfortunately, conventional file systems (e.g., ext4, NTFS) are saddled with 50 years of disk-oriented “optimizations” that add microseconds to common operations. For millisecond disk drives or tens-of-microsecond SSDs, this extra latency is irrelevant, but not for NVMM.

NVMM-specific file systems can do much better. Our group has released NOVA, a NVMM file system that is between 10% and 10x faster than ext4 and XFS on IO-intensive benchmarks.

Stage 2: DAX and Expert Programmers

Fully exploiting NVMM requires more extensive changes to how programs interact with persistent data.

The first step is file system support for direct access (DAX). DAX lets a program map the physical pages that hold a file into its address space with mmap(). DAX mmap differs from conventional mmap in one critical regard: loads and stores affect storage (i.e., NVMM) directly, rather than being buffered in DRAM.

DAX mmap enables something entirely new: A hybrid address space that contains regions of volatile DRAM and regions of non-volatile memory that belong to one or more mapped files.

Hybrid address spaces make it possible to build persistent versions of all the sophisticated data structures of the last 70 years.

Hybrid address spaces also bring new kinds of bugs and new responsibilities for the programmer. Novel bugs arise because pointers between NVMM regions are inherently unsafe as are pointers from NVMM into DRAM. Existing programming languages cannot prevent the creation of these pointers, so the programmer has to “do the right thing” or risk permanently corrupting her carefully crafted data structure. Persistence also makes familiar bugs more dangerous: memory leaks and and pointer manipulation errors are permanent.

The programmer must also build data structures to survive power failures. The most common approach is to identify operations that move the structure from one consistent state to another and then rely on a library, a runtime system, or careful programming to make those operations atomic.

Researchers have already mapped the broad outlines of the challenges of programming in hybrid address spaces, and prototype systems like NVHeaps and NVMDirect let the programmer avoid these bugs and define atomic sections, but neither these nor any comparable competitor is ready for prime time.

For now, Intel has released a suite of lower-level tools at pmem.io that provide basic memory allocation and logging facilities, and if you are brave, careful, and know what you are doing, you can use them to build complex data structures.

Using these tools is still cumbersome and difficult. To put it in perspective, building NVMM data structures is (at least) as hard as building lock-free data structures. Few programmers have the skill or patience to do so without significant help from libraries, languages, and tools. Instead, in the near term a few specialist programmers will build container libraries (e.g., a persistent version of C++’s STL) that programmers can use.

If a normal programmer finds that these data structures don’t fit a particular need, it will be extremely difficult to build a custom alternative.

Stage 3: Democratizing Persistence

Making NVMM programming possible for typical programmers requires integrating the notion of persistence into the programming language, so the compiler and runtime can work together to make NVMM easy-to-use.

The shape of these facilities is an active area of research. It’s unclear what the right solutions are and they will likely vary across languages. However, many of the core challenges are clear:

- The programmer must be able to delineate which data is persistent and which is not.

- The programmer must not be able to create pointers between persistent regions or from persistent regions to DRAM.

- The programmer must be able to define how data structures move between consistent states.

- Programs must not leak memory or create dangling pointers.

- The system be able to repair data structure when media errors occur.

- The system must not sacrifice much of NVMM’s performance.

Techniques developed for transactional memories, databases, garbage collection, managed languages, and type systems will all play a role in meeting these challenges.

Modernist Storage

NVMM will make access to persistent data faster and more tightly integrated with other parts of the system than it has ever been. Providing the means for normal programmers to quickly and easily build the persistent data structures their programs need will make make programmers more productive, programs easier to understand, and IO intensive programs faster.

If NVMM fulfills its promise, in a couple decades persistence support in languages could be as common as garbage collection or strong type systems are today. At that point, accessing persistent data as homogeneous, untyped byte streams will seem as primitive as punch cards seem today.

Steven Swanson is a professor of Computer Science and Engineering at the University of California, San Diego and director of the Non-Volatile Systems Laboratory.

Disclaimer: These posts are written by individual contributors to share their thoughts on the Computer Architecture Today blog for the benefit of the community. Any views or opinions represented in this blog are personal, belong solely to the blog author and do not represent those of ACM SIGARCH or its parent organization, ACM.