Deep Neural Networks have been a major focus for computer architects in the recent past due to the massive parallelism available in computation, combined with the massive amount of data re-use. While the proposed architectures have inspired industry innovations such as the Google TPU, Graphcore, and Volta, these solutions require significant power and energy budgets. Warehouse and server scale computers can afford such budgets, but embedded systems and mobile devices might not be able to afford the high power and energy budgets. Optical computing has been explored as an alternative for neural network feedforward computation. It offers the same capabilities as conventional electronics (high bandwidth, high interconnectivity, and inherently parallel processing), potentially at the speed of light. Moreover, certain important kernels such as Fourier Transform can be done by a photonic chip at virtually no cost, while such an operation in conventional hardware is not trivial.

An optimizable and scalable set of optical configurations that preserves these advantages and serves as a framework for building optical CNNs would be of interest to computer vision, robotics, machine learning, and optics communities.

Optical Computing

Optical computing is the use of photons rather than electrons to do computing. Photons, effectively massless and incredibly fast, are generated using diodes or lasers. The photons take the place of electrons in more traditional computers and are used to represent the flow of data. A key component of optical computing is an optical transistor. It changes the output light amplitude depending on the input light. An advantage of optical transistors is the different kinds of information encoding made possible by different possible frequencies. But the same can also be a drawback when information decoding is considered. Moreover, such optical computing devices are still in nascent stages, with no commercial purely optic-based chip being in existence yet. This has led researchers to adopt a hybrid computation model involving both photons and electrons (Opto-electric) to achieve reasonable success, especially in embedded computing such as image pre-processing.

Optics for CNN

A natural application for such optical computing would be object detection/recognition, given that the raw input format for such applications is light. A breakthrough work in demonstrating an application for optical CNN processing is based on the use of bio-inspired Angle Sensitive Pixels (ASPs). Researchers observe that ASP sensors emulate a response similar to the first layer of convolution in typical CNNs. ASPs replace both image sensing and the first layer of a conventional CNN by directly performing optical edge filtering, thereby saving sensing energy, data bandwidth, and CNN FLOPS to compute. Though the reduction in compute and energy demonstrated by such an approach is promising, a natural drawback of such an approach is the inability to train ASPs to emulate responses of different Neural Network layers. This makes ASP-based methods unsuitable for deep networks with multiple layers, as the benefits diminish with increasing depth.

Opto-Electrical Computing for CNN

Researchers from Stanford have demonstrated a trainable convolutional neural network, designed through an optical correlator that compares two light signals based on the Fourier Transform properties in optical lenses. In linear optical systems, the image formation is often modelled as a spatially invariant convolution of the scene with the point spread function (PSF) of the system. PSF describes the response of an imaging system with respect to a single point source of light.

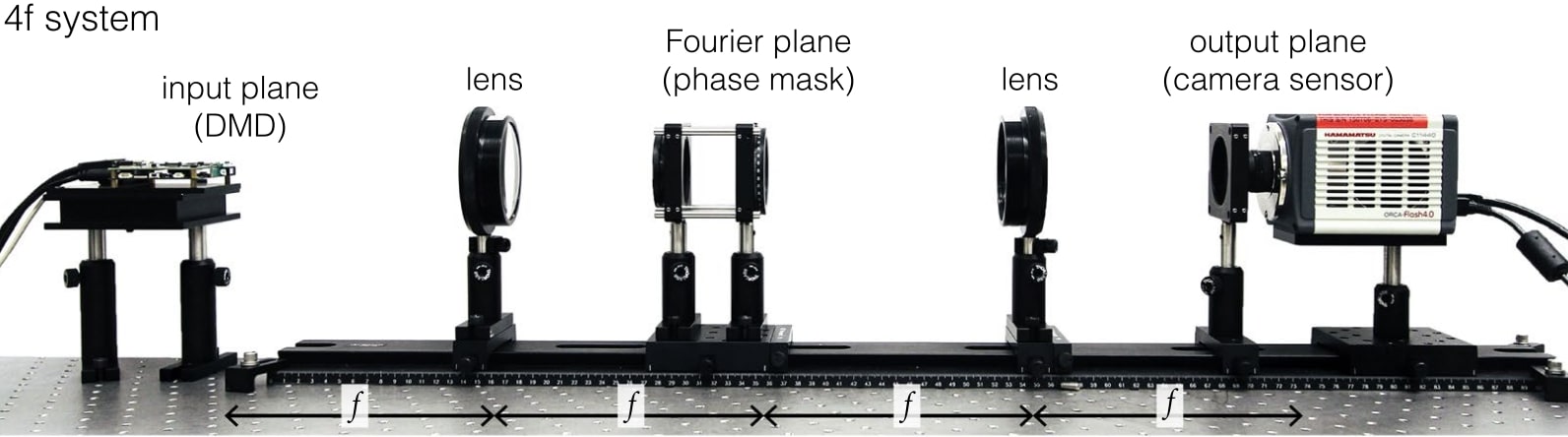

The figure above shows an optical correlator. The lens placed at distance f (focal length) from the input produces the Fourier Transform of the input at 2f, which is referred to as the Fourier Plane. A learned phase mask, corresponding to the kernel weights of a single channel, is placed in the Fourier plane. Depending on the point spread function of the phase mask, the output is changed as per the equation below, due to the correlation between the input transformed into the Fourier plane and the phase mask. The equation denotes multiplication in the Fourier plane, which translates to convolution in the linear domain.

Iout( X, Y ) = Iin( X, Y ) * PSF( X , Y )

The phase mask of such a system can be modulated in amplitude and phase, akin to a bandpass filter in signal processing, which alters the PSF of the system. This modulation can be used to train the PSF of the system, similar to Stochastic Gradient Descent methods. For multiple channels of the kernel, the PSF planes corresponding to the kernel channels are aligned in a 2D fashion in the Fourier plane, wherein input light is correlated parallelly with the different 2D-aligned PSF planes.

The drawback of such a system would be its inability to represent negative weights, given that light cannot have negative intensity. Experimental results on a two-layer CNN (1 optical CNN layer, 1 electronic FC layer) have shown comparable accuracy to that of a digital convolutional layer, but with only 12% of its FLOPS (for CIFAR-10). Computation in the optical domain has no notion of floating-point operations, and hence consumes 0 FLOPS and virtually no power, while the correlation in the Fourier plane happens at the speed of light. Any FLOPS consumed as part of the optoelectronic setup is purely due to the computation in the electronic domain.

Diffractive Deep Neural Network

The above approach figures out how to create a trainable convolutional neural network, but doesn’t demonstrate a large scale CNN with multiple such optical CNNs connected with each other, given that re-alignment of the output of one optical CNN layer into an input for another optical CNN layer was left as future work. Researchers at UCLA meanwhile developed a 3D-printed all-optical deep-learning architecture called Diffractive Deep Neural Network (D2NN). They used a 3D printer to create thin, 8 cm^2 polymer wafers with patterns based on pre-trained weights. Such polymer wafers are not reconfigurable, but the low cost of fabrication (researchers claimed the D2NN they created could be reproduced for less than $50) essentially means replacing some layers or adding additional layers are inexpensive.

Training for such D2NN is the same as the one done in the digital domain for various deep neural networks, but a fabricated D2NN which does CNN computation purely in the photonic domain, essentially consuming 0 power, looks promising for real-time embedded imaging/vision applications such as computational cameras, with no re-training required. Even transfer learning is made possible due to the reproducibility of such wafers.

Implications for Computer Architects

Given that Opto-Electrical converters are expensive, both in terms of operation and area, at the present stage of development in optical computing, it becomes a necessity that for embedded applications, the computing has to be in a purely optical domain. Such optoelectric/purely optic approaches do not implement a nano-scale fabricated photonic chip, but rather just a centimeter-scale 3D printed setup, which has to be rigid. Even though the idea of Fourier Transform and hence convolution happening at 0 costs are compelling, there still needs to be breakthroughs that make such optical operations happen at low area overhead.

About the Authors: Ananth Krishna Prasad is a P.hD. student of the School of Computing at the University of Utah, advised by Prof. Mahdi Bojnordi. His research is focused on novel memory systems and performance acceleration in Machine Learning applications. Mahdi Nazm Bojnordi is an assistant professor of the School of Computing at the University of Utah, Salt Lake City, UT.

Disclaimer: These posts are written by individual contributors to share their thoughts on the Computer Architecture Today blog for the benefit of the community. Any views or opinions represented in this blog are personal, belong solely to the blog author and do not represent those of ACM SIGARCH or its parent organization, ACM.