When it comes to hardware support to mitigate software security issues, there is a significant gap between what is available in products today and known solutions. This article examines the history of architectural support, summarizes research philosophies, and delves into possible reasons for relatively little support for software security in current systems.

A History of Architecture Support for Security

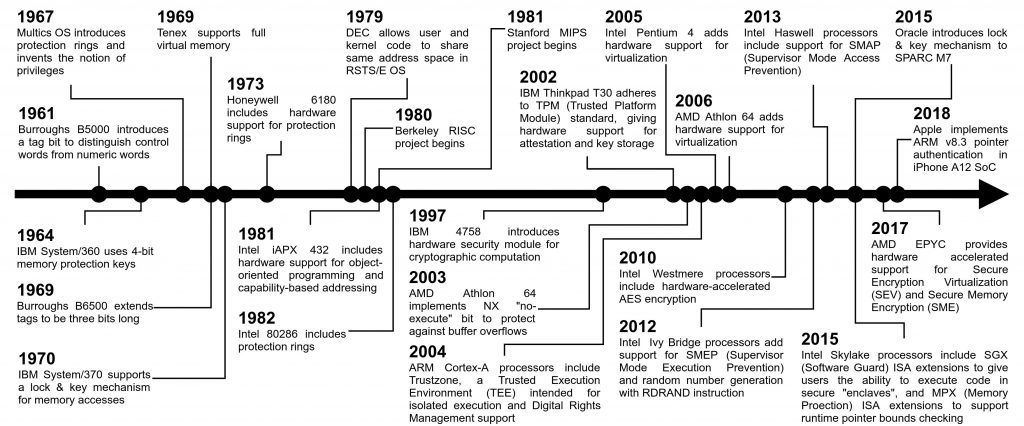

Timeline of ISA support for Security in Commodity Processors.

The figure above provides a timeline of architectural support for practical defenses, as found in commercial products. Most existing security features on the timeline fall into one of four categories:

• Virtualization—Giving code the illusion that it is in an environment other than the one it is actually being executed on. This can be in the form of hardware support for virtual memory (which isolate processes from each other) or hardware support for virtual machines (which isolate entire operating systems from each other).

• Attestation—Providing systems the means to attest or verify the integrity of their components. This typically begins with a root of trust: a small, trusted set of hardware that can 1) verify its own integrity, and 2) use this to extend and verify the integrity of other system components.

• Acceleration—Adding hardware support to reduce the runtime overheads of security features. Typical examples include adding dedicated hardware accelerators to speed up encryption and decryption computations.

• Tagging—Memory locations are “tagged” with metadata, which can signal things like data types or permission levels.

Approaches Towards Security

While industry has picked up a few ways to improve security, there are a broader number of paradigms that have been proposed and developed in the computer architecture research community for achieving security.

• Formal methods—Construct logical proofs to verify or disprove certain properties about a system. For example, a formal verification of a boolean circuit may prove that the circuit never performs an undesirable action for any input that can be supplied by the adversary. This approach can be powerful, but is often limited by 1) assumptions about the system’s environment, 2) the enormous amount of computational power needed to formally verify complex systems, and 3) difficulty in specifying undesirable actions. Also known as a “correct by construction” approach to security.

• Cryptography—Encrypt sensitive information using cryptosystems. Cryptosystems are designed in such a way that makes it virtually impossible for any adversary to decipher the encrypted information (also known as ciphertext) without possessing secret keys. Cryptography forms an essential part of our current best-known practices of protecting confidentiality and integrity. The challenge in using cryptographic techniques for securing the system relate to secure key management, and in many cases, efficient execution.

• Isolation—Keep trusted and untrusted components separate from each other, and carefully monitor any interaction between the two. By isolating, and therefore reducing, the scope and size of trusted components, it becomes easier for systems to verify and extend integrity guarantees about potentially untrusted components. Ideal isolation systems aim to enforce a property known as “non-interference” which loosely speaking means that each program should complete execution without being impacted by any factor that can be controlled by an attacker.

• Meta-data/Information-Flow Approaches—Everything in a system is an object with two types of entities: data and metadata. As computations are applied to data, shadow computations occur on the corresponding metadata, which detect and report illegitimate data modifications. Some of these systems are also capable of detecting illegal modifications to metadata (to an extent). The exact nature of the shadow computations and metadata is determined by specifics of security policies. Policy violations detected by metadata computations should be handled by a higher privileged process. The data in the above definition could also include instructions.

• Moving Target Approaches—The observation that begets this approach is the belief that almost all attacks (will) have defenses, and all defenses (will) have attacks. In other words, in a world where attackers and defenders are constantly trying to better each other, moving target approaches minimize the advantage to the attackers by changing how the system/defenses work over time. The key problems to address are deployment/maintenance considerations with constantly changing systems, and the tradeoffs between rate of change, performance and security.

• Diversification—If each system were distinct, it would force the attacker to prepare bespoke exploits, increasing their work and reducing their profits. An extreme example is one where each computer has a different (secret or non-secret) ISA. Since the ISAs are different, the same exploitation technique and payload cannot be used blindly, thus stemming mass takeovers. The challenges here are deployment/maintenance considerations because of differences between systems.

• Anomaly detection—This paradigm is borne out of the philosophy that systems will always be insecure, irrespective of the presence of other security mechanisms. The goal is to monitor systems for abnormal or unusual behavior, which may indicate an adversarial attack on a system’s security. This can be either signature based detection, which checks whether system behavior matches some model of malicious behavior, or misuse detection, which checks whether system behavior deviates from some model of benign behavior.

In practice, none of these approaches are secure by themselves, and must be combined to achieve reasonable security guarantees. This is troublesome, since commercial support for security only covers a few of these outlined approaches.

Looking back and forward

After a period of very active interest and support for security during the 70s and early 80s, there appears to have been a hiatus in the late 80s and 90s coinciding with the era of frequency wars and RISC architectures. What could explain this lack of security support in commercial products?

Perhaps the mindset among commercial vendors was that a rising tide (performance) lifts all boats. However, higher performance does not necessarily fix the problem. Our paradigm is that security is a fundamental requirement for correct execution (and not the other way around) because insecure execution produces wrong or unexpected results. And generally speaking, no amount of performance gains can compensate for incorrect execution. So while it is true that a rising tide does lift all boats, it does not lift the ones that are doomed to sink!

Another explanation for the lack of support in commercial designs is that security, especially one that is beneficial to the masses, was not critical in the 80s because personal computing and the Internet were still in their nascency, and mass attacks were far and few. However, ISAs have a long life. So we now live life (dangerously) in the 21st century with ISA concepts that were designed for life in the 20th century! Further, the lack of focus on security in the 80s-90s has created bubbles in the human-talent pipeline for architectural security, the effects of which continue to be felt.

It can also be argued that the processor industry has been out of touch with reality in terms of security requirements. While security improvements have been made to legacy ISA systems in the post-frequency wars era, this support has been limited to features that mostly support corporate interests (e.g., Digital Rights Management for games or multimedia, virtualization on the cloud), and very little to directly help the masses (e.g., hardware support for malware detection/prevention). This could be because future processor design requirements are shaped much more heavily by large corporate buyers than everyday people.

New open source ISAs offer a potential way forward out of our current dilemma. However, practically speaking, legacy old ISAs are not going to go away any time soon, and security of systems that contain processors with old and new ISAs reduce to the security of the weaker ISA. So perhaps we need better ways to understand how to retrofit security paradigms into existing ISAs while we redesign the systems for the future.

About the Authors: Adam Hastings is a second year PhD student at Columbia University. Simha Sethumadhavan is an Associate Professor at Columbia University. His website is http://www.cs.columbia.edu/~simha, and his twitter is https://www.twitter.com/thesimha.

Portions of this blog post include original research and have been adapted from class notes on a grad class on architecture (Source) at Columbia University. To reference this article, please cite the class notes linked above. Figure updated on 10/29/2019.

Disclaimer: These posts are written by individual contributors to share their thoughts on the Computer Architecture Today blog for the benefit of the community. Any views or opinions represented in this blog are personal, belong solely to the blog author and do not represent those of ACM SIGARCH or its parent organization, ACM.