Colonial Williamsburg is a “living museum.” Its pseudo-historical buildings mostly date the the 1930s, when history buffs imagined what the early settlement might have looked like and set their guesses into literal stone. The revivalists demolished hundreds of not-quite-old-enough buildings to make way for their colonial approximations. For visitors in 2018, the gestalt lies in an uncanny valley between 1700s authenticity and Depression-era artifice. The town’s in-character hosts convey 21st-century echoes of 20th-century hagiography of 18th-century people.

History was similarly inescapable at ASPLOS 2018. Even talks on hot topics like quantum computing and large-scale machine learning rely on the context of decades-old computing trends. Every 2018 topic, from rampant specialization to dreams of a post-silicon future, seems to point backward to the 20th-century heyday of Moore’s law—and to implicitly acknowledge modern anxiety over its diminishing returns.

AI Fatigue and the Keynotes

In a particularly 2018 theme, the ASPLOS crowd exuded a mixture of enthusiasm and disdain for research on artificial intelligence and machine learning. Emery Berger called the effect AI fatigue in a hallway conversation. Deep learning has generated a hype avalanche that is both justified and exhausting: while it’s impossible to deny the way ML is upending all fields of computing, it can also feel like a bandwagon.

Hillery Hunter’s first-morning keynote bore a decidedly ASPLOSy title, AI Productivity: Better Hardware Doesn’t Work without Better Software. She acknowledged the audience’s AI fatigue: folks may think of AI and ML as marketing buzzwords, but they also contain juicy performance problems for systems researchers. The work she described emphasized evergreen research ideas like supercomputer interconnects and balanced systems.

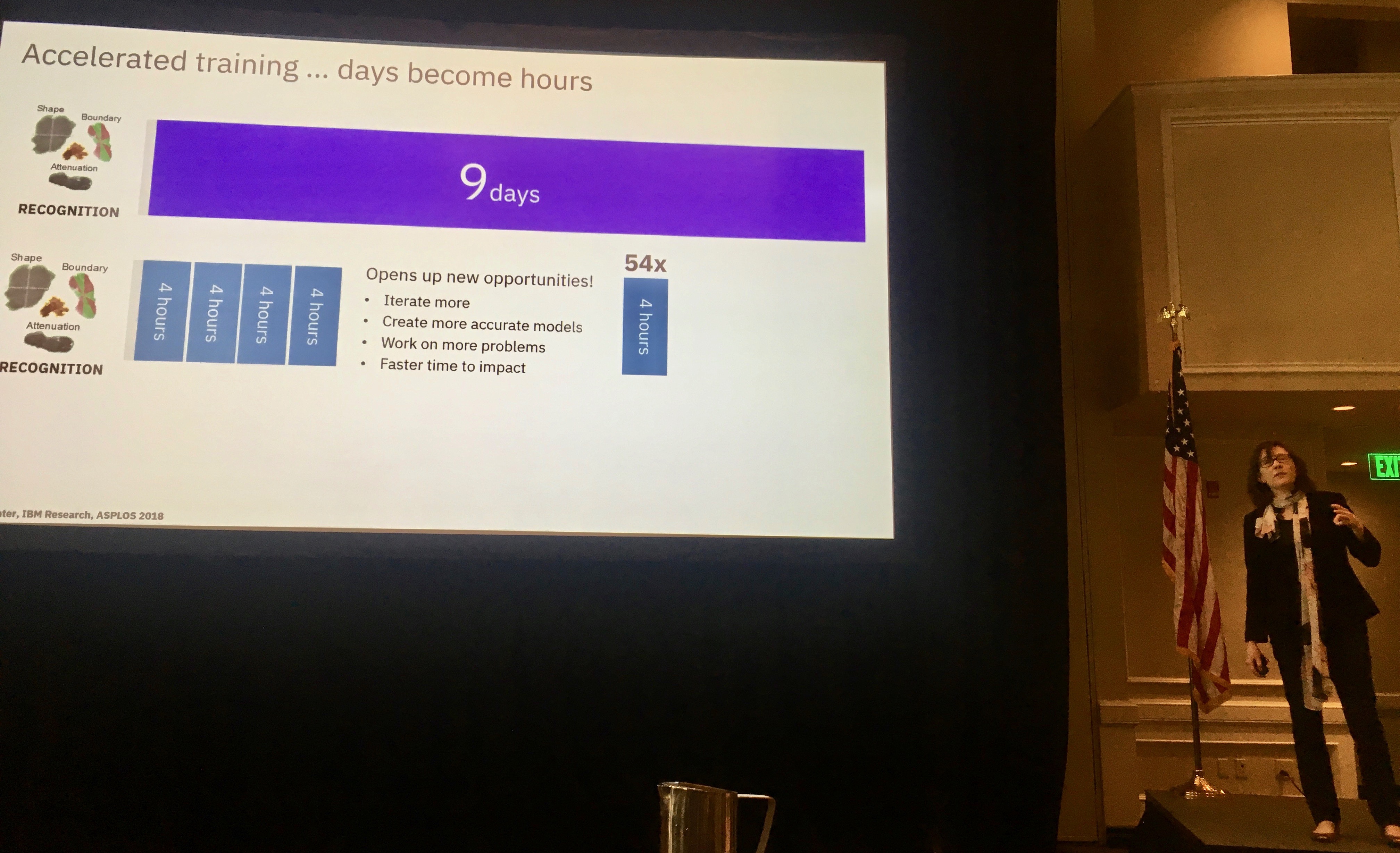

Hunter showed off a strategy for assembling hundreds of GPUs for ML training by carefully allocating inter-GPU bandwidth. She highlighted the qualitative consequences of reducing one model’s training time from 9 days to 4 hours: data scientists had a shot at iteratively improving their predictions, for example, without unmanageable work interruptions. These results emphasize a legitimate reason to board the ML research bandwagon: it’s a domain where performance is a real barrier to getting work done. In an era where marginal efficiency gains can seem abstract or inconsequential, training enormous ML models looks like an attractive target.

Hunter acknowledged a second facet of AI fatigue when she showed results for a logistic regression workload. She reminded us that deep neural networks are not the only kind of machine learning model, a fact the world occasionally seems to forget. Even simple techniques can be effective, given the right data, and they can still exhibit efficiency bottlenecks suitable for ASPLOS-style research. Hunter’s computation fabric running on a many-GPU cluster again yielded a qualitative leap in training time, from 70 minutes to 92 seconds. While articles in the popular press continue to imply otherwise, the ASPLOS audience needs to remember that deep neural networks do not equal machine learning.

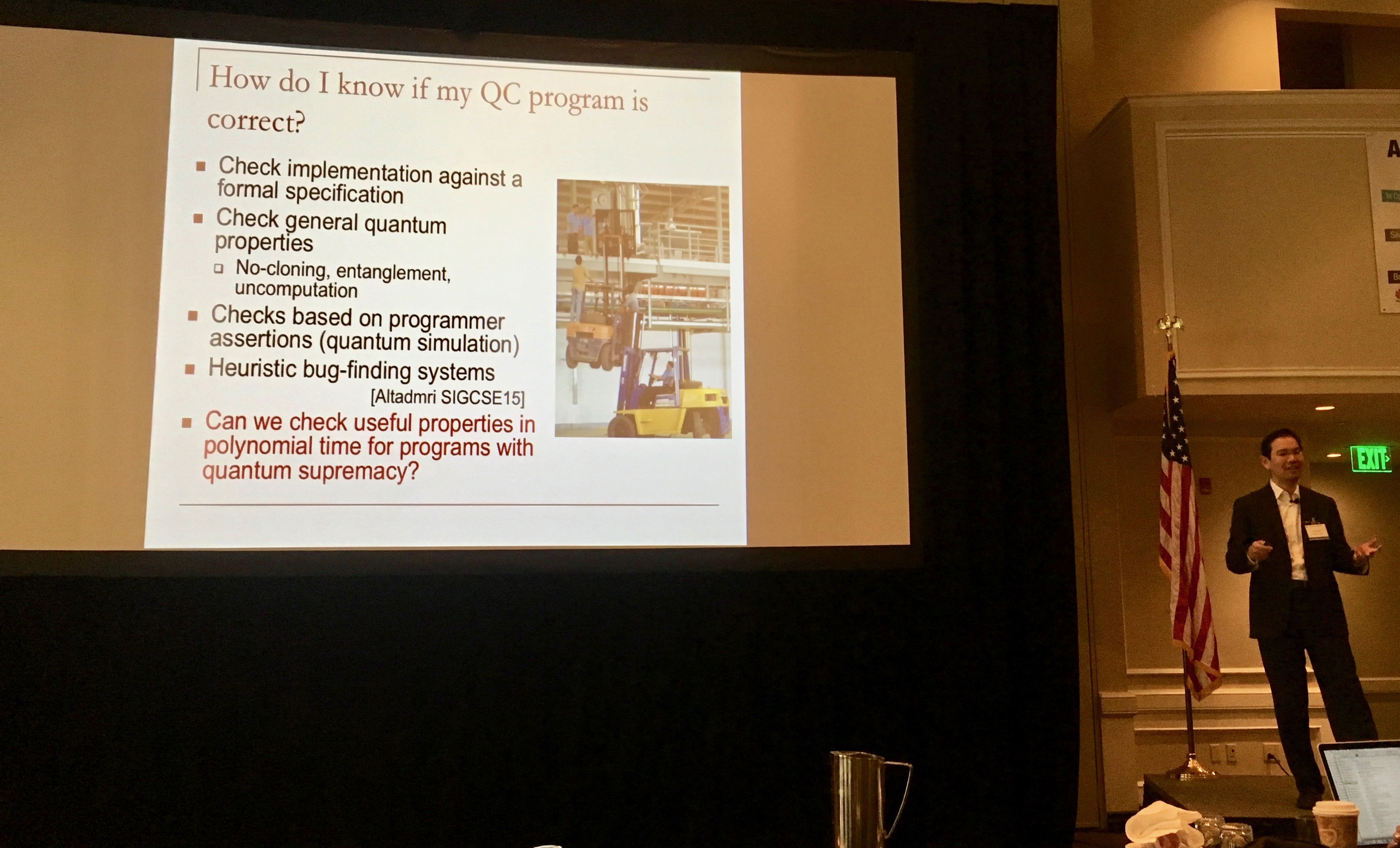

Fred Chong’s keynote was on a topic that should seem unrelated: near-term quantum computing. Chong sold the audience on the need for cross-stack, ASPLOS-style thinking in the upcoming phase of noisy intermediate-scale quantum (NISQ) technology. As IBM, Google, Microsoft, and Intel race to build machines with the 50+ qubits that entail quantum supremacy, analogies to classic AS/PL/OS research problems are sprouting. We need architecture and OS research, for example, to build efficient interoperation between the classical and quantum domains. There are a galaxy of programmability problems embedded in translating algorithms research into real implementations. PL researchers should be particularly concerned with correctness: as Chong put it, “we have programs that are too big to simulate, and we don’t have machines to run them on. So how do we know they’re correct?”

Even the quantum world can’t escape the ML hype/fatigue supernova. After his talk, Chong told me that a primary “marketing” challenge in quantum is patiently explaining that, despite their enormous potential, NISQ’s killer apps do not likely lie in machine learning. Chong describes the ideal problem category for quantum computation as having a short description: a small number of input bits that induce a complex solution space. ML training, of course, is the opposite: it must ingest huge volumes of data. This tension does not stop colleagues (and funding agencies) from fantasizing about exponential speedups on 2018’s hippest workload.

At this point in my hallway-track conversation with Chong, another attendee walked up. As if by fate, the newcomer asked whether quantum machines could offer asymptotic gains on CNN training.

Still WACI After All These Years

ASPLOSers witnessed the twentieth installment of Wild and Crazy Ideas (WACI), the emblematically ASPLOSish tradition that mixes impossible visions, blue-sky solutioneering, and jokes. This year’s WACI chiefs, Michael Carbin and Luis Ceze, established the first-ever WACI test-of-time award. Following their lead, I hereby establish by own award series for this year’s WACI talks:

- Timeliest: Miguel Arroyo used ideas from digital logic and architecture to bring order to a hypothetical highway system dominated by self-driving cars.

- Niftiest: Max Willsey developed a system stack for microfluidic chips that can automate biology experiments.

- Ghastliest: Yuan Zeng introduced the “living neuron machine,” which trained a literal, moist, biological neural network to recognize MNIST with mediocre accuracy.

- Bleakest: Christian DeLozier capitalized on the wild popularity of video game microtransactions in video games and imported them into a new programming language, C$$. Variables are free, but if you like functions, you’d better hope for them in your next lootbox.

- Trashiest: Brandon Lucia urged everyone to donate their old, busted phones to assemble massive compute clusters—actual clouds—attached to solar drones that gracefully drift through the atmosphere to harvest free solar energy.

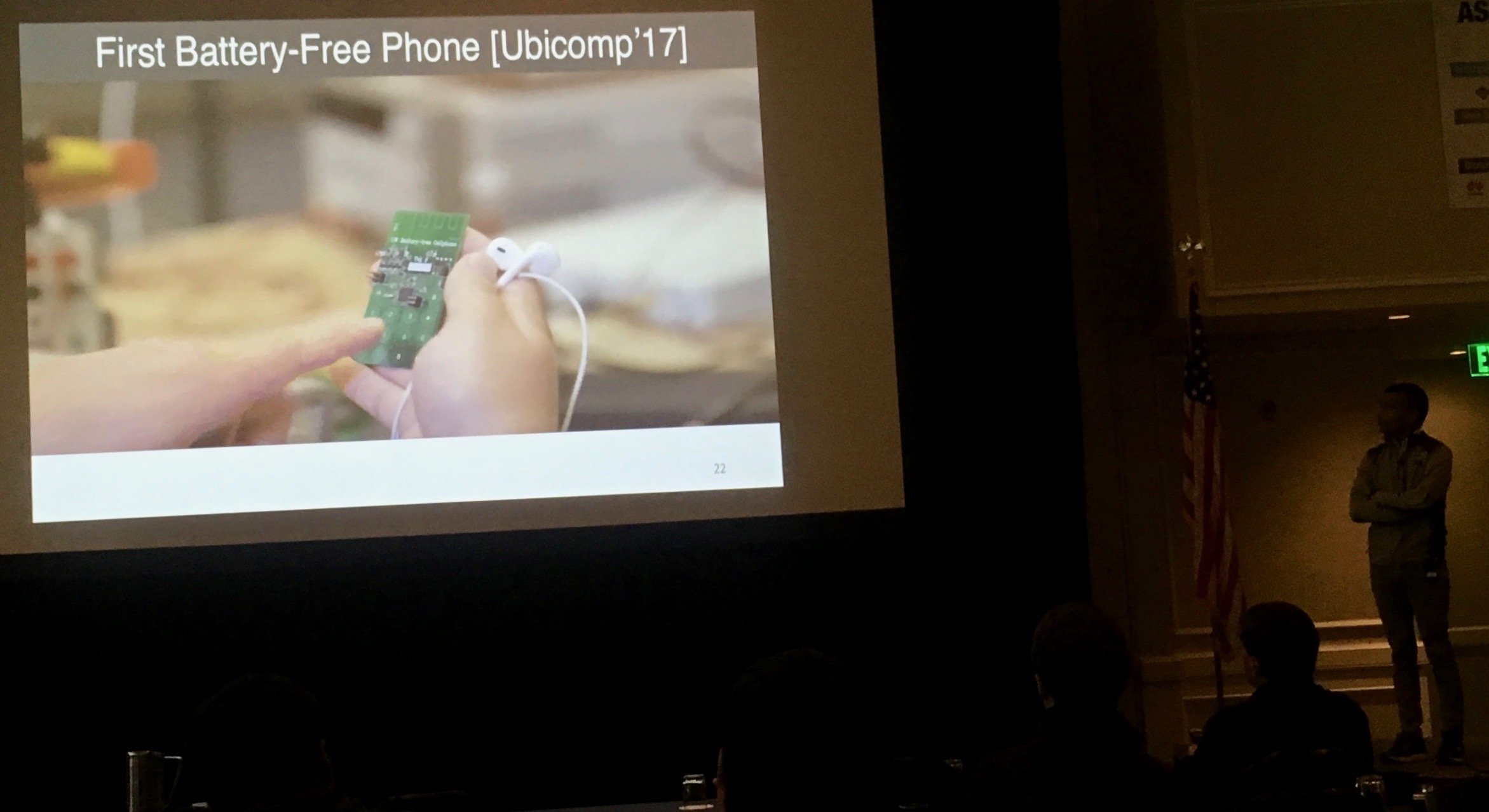

The organizers snagged a doozy for the WACInote: Shyam Gollakota is a “wireless wizard,” in Ceze’s words. Gollakota demonstrated a dizzing cavalcade of battery-free wonders from his lab. He demonstrated small devices powered by ambient RF, backscatter Wi-Fi that lets batteryless nodes join real wireless networks, a dialable phone that transmits voice over backscatter, 3D-printed passive plastic things that use gears and springs to speak Wi-Fi, and a high-res video feed from a battery-free camera. Audible gasps abounded.

Carbin and Ceze established a test-of-time award to recognize WACI’s penchant for presaging real research. The inaugural award recognized a vintage-2006 talk on compostable computers. Dirk Grunwald, along with Fred Chong, proposed to combat computational waste by building machines from materials that biodegrade. The award honored Grunwald, Chong, and current torch-bearer David Wentzlaff, whose group has transmuted this wild idea into proper, top-tier research. Following a WACI talk in 2015, Wentzlaff and collaborators published Architectural Trade-Offs for Biodegradable Computing in MICRO last year. They’ve prototyped real compostable circuits.

The entire WACI session reminded everyone to stay focused on big problems. Ambitious ideas are valuable even when they seem implausible: technological progress marches inexorably on, and creative work gradually surmounts the insurmountable. As my student, Mark Buckler, reflected after the session, we all need a reminder that we should be trying to change the world.

Winners, Old and New

ASPLOS chooses a Most Influential Paper each year from at least ten conferences ago. This year, the SC chose OceanStore, the ASPLOS 2000 paper by John “Kubi” Kubiatowicz and eleven others that envisioned secure, reliable, available storage in globally distributed datacenters. Looking back at the paper, it’s impossible to miss the similarity to what we now call “the cloud.” Kubi recalled David Wood asking at the time, “Why would I ever want to put my data out there,” in an intangible sea made of someone else’s storage infrastructure? It was and remains a good question, but I’m willing to bet that even Wood stores some of his data “out there” today.

The authors of last year’s MIP couldn’t make it to Xi’An, so the organizers also presented a second award. Tim Sherwood and co-authors won for their ASPLOS 2002 paper on basic block vectors. Sherwood recalled the research environment at the time: many groups simultaneously realized the importance of phases in application behavior. But instead of creating destructive competition, the mutual interest engendered a watershed of interconnected research.

The Best Paper Award committee chose two winners: Darwin, an accelerator for genome sequence alignment from Stanford and Nvidia, and Capybara, a reconfigurable energy system for energy-harvesting from CMU. Both reflect the ASPLOS spirit: Darwin intertwines algorithmic advances with hardware support, and Capybara busts a traditional abstraction to expose a new kind of programming.

Til Next Time

ASPLOS 2019 is gearing up. The program chairs, Alvin Lebeck and Emmett Witchel, are assembling the PC while the organizers make plans in Providence, Rhode Island. Meanwhile, James Larus announced that he will general-chair ASPLOS 2020 in Lausanne, Switzerland with Karin Strauss and Luis Ceze as program chairs. The business meeting was uncontentious: major topics included solidifying the SC’s mechanism for tracking PC members’ reputations across years (uncontested) and this year’s reviewing process, which split the PC homogeneously in half for higher discussion bandwidth (opinion was divided).

See you in Providence.

About the Author: Adrian Sampson is an assistant professor in the computer science department at Cornell.

Disclaimer: These posts are written by individual contributors to share their thoughts on the Computer Architecture Today blog for the benefit of the community. Any views or opinions represented in this blog are personal, belong solely to the blog author and do not represent those of ACM SIGARCH or its parent organization, ACM.