There is a battle ongoing in the realm of secure caches. Cache side-channels are a serious security problem as they allow an attacker to monitor a victim program’s execution and leak sensitive data like encryption keys, confidential IP, etc. A potent class of such attacks is the Prime+Probe attack, where a spy uses its addresses that conflict on cache sets shared with a victim’s addresses to learn the victim’s data access pattern. Randomized caches have emerged as a promising defense against such attacks as they randomize the locations of set conflicts and make it harder for the attacker to learn a victim’s access pattern. This area has recently seen rapid advances in both attacks and defenses, with more than 10 papers published in top security and computer architecture conferences in the last 3 years. As defenses have become sophisticated, attacks have become more creative, which in turn has improved the understanding of the problem and encouraged more principled defenses. This article summarizes the prolific research on attacks and defenses fueling this arms race in randomized caches.

Recent works largely focus on randomizing last-level caches (LLC) because they are commonly shared between multiple physical cores (belonging to different users and security domains in the cloud) and such sharing of LLC is critical for performance; hence, this article also focuses on randomized LLCs.

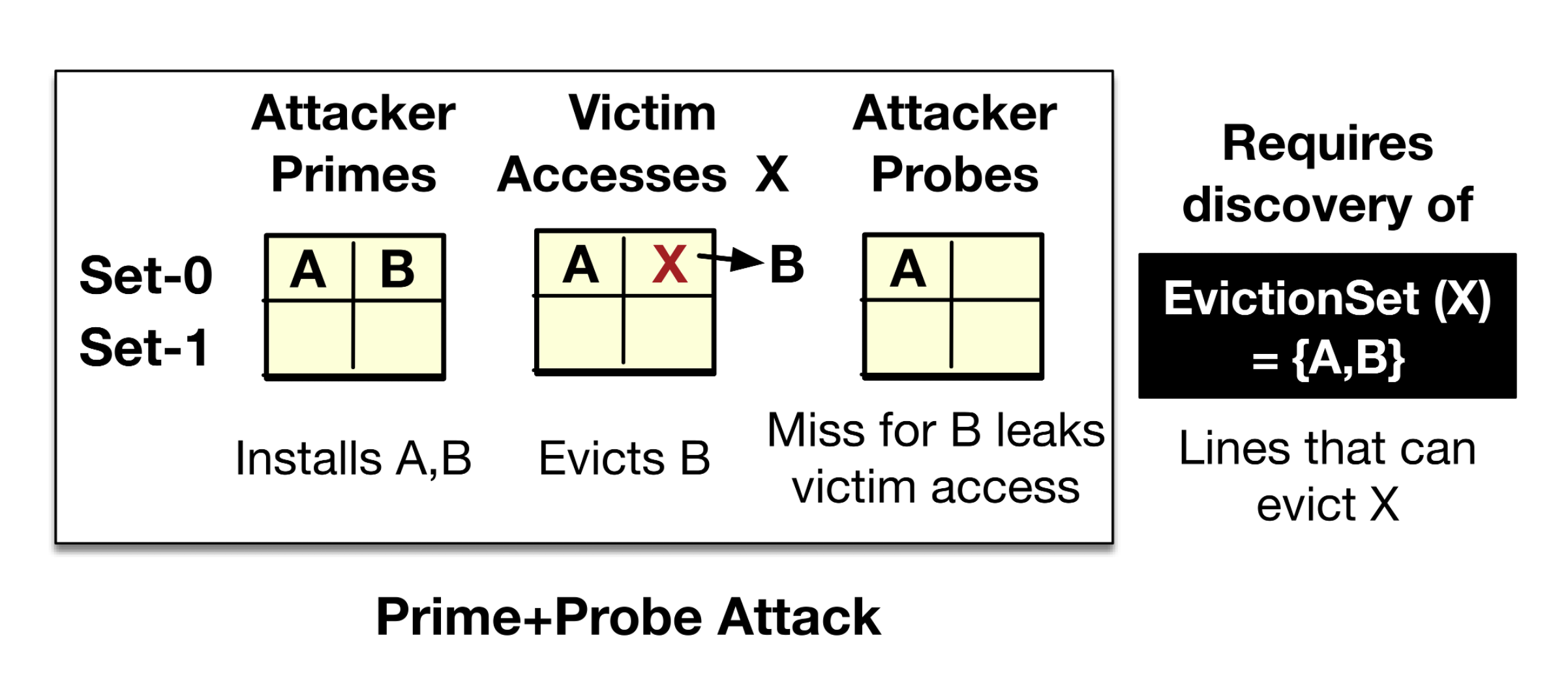

The Prime+Probe attack shown above works as follows: the attacker primes a cache set with its addresses, then allows the victim to execute and evict an attacker’s address due to a set conflict. Later, the attacker probes the addresses to check if there is a miss, to infer that the victim accessed that set. In these attacks, the first step is to discover a set of addresses – called an eviction-set – which map to the same cache set as the victim address and evict it from the cache. In 2015, Liu et al. (SP’15) first showed that Prime+Probe attacks are practical on LLCs by discovering eviction-sets on an LLC with n cache lines in O(n2) accesses (for typical LLCs, an eviction-set is discoverable in less than a second).

A Brief History of Randomized Cache Defenses and Attacks

Randomized caches were originally pioneered by RPCache (ISCA’07) and NewCache (MICRO’08). These provided cache randomization using remapping tables and were geared towards small L1 Caches. It was not until 2018 that randomization was first explored for LLCs, triggering multiple waves of randomized cache defenses and new attacks.

First Wave:

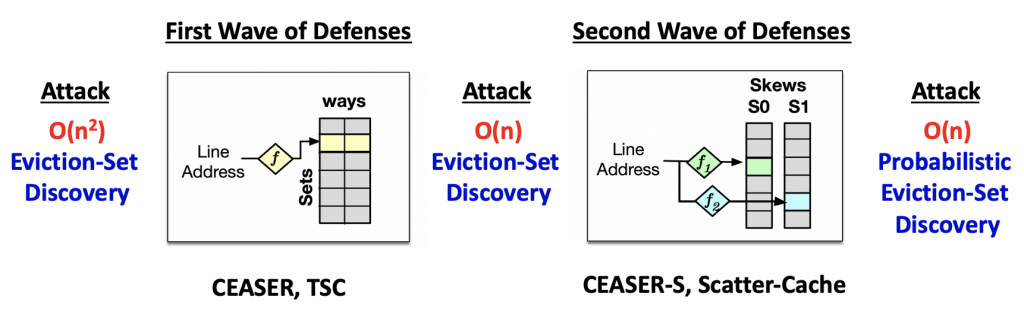

Defense: In 2018, CEASER (MICRO’18) first proposed randomized LLCs, using a cipher to encrypt cache line addresses and randomize the mapping of addresses to cache-sets. Before the adversary discovers an eviction-set, CEASER dynamically changes the mapping by changing the encryption key. In parallel, TSC (DAC’18) similarly used hash functions to randomize the mapping of addresses to sets to make attacks harder.

Attack: As CEASER changed the address mapping before eviction-set discovery in O(n2) accesses, this prompted new interest in attacks capable of faster eviction-set discovery. Qureshi (ISCA’19) and Vila et al. (SP’19) soon discovered new attack algorithms where eviction-sets could be discovered in just O(n) memory accesses (in milliseconds). Song and Liu (RAID’19) further increased the attack speed by a constant factor, using multi-threaded execution. All such attacks were able to discover eviction-sets before CEASER re-randomized the cache. To defend against these attacks, CEASER would require increasing its re-randomization rate by 30x causing considerable slowdown, thus prompting the need for more sophisticated defenses.

Takeaway-1: A randomized mapping of addresses to cache sets alone is not robust to fast O(n) eviction-set discovery. Subsequent defenses used more sophisticated techniques to introduce randomization of set conflicts.

Second Wave:

Defense: As attacks got better, a wave of new defenses appeared, rising to the challenge. An intuitive way to defend against faster attacks is to increase the search space for attacks, by increasing the number of random locations an address can map to. CEASER-S (ISCA’19) and Scatter-Cache (SEC’19) used skewed associative designs to probabilistically select the way (or group of ways called a “skew”) where an address is installed and used different cryptographic functions in each skew to ensure addresses map to different random sets in each skew. Similarly, PhantomCache (NDSS’20) randomly selected the set an address is installed in from a group of sets. These defenses increase the search space for the attacks, making it hard for attack algorithms to discover the exact eviction set for a victim address.

Attack: However, with probabilistic defenses, there emerged new probabilistic attacks. Purnal et al. (SP’21) and Song et al. (SP’21) discovered new attacks showing probabilistic set-conflicts were still possible on these defenses, and probabilistic eviction-sets could still be constructed in O(n) accesses. By accessing a sufficient number of attacker addresses from such sets, the victim may still get evicted with high probability. Song et al. proposed increasing the re-randomization rate to defend against these attacks, but CASA (MICRO’20) recently showed that gradual re-randomization itself could accumulatively leak information over multiple epochs. So, dynamic re-randomization itself may not be a future-proof solution.

Takeaway-2: Defenses that incrementally increase attacker effort are likely to get broken by newer attacks. Ideally, defenses should aim to target the root cause of attacks.

Takeaway-3: Re-randomization is a useful defense-in-depth strategy, but a randomized cache that does not require frequent re-randomization would provide a more robust defense.

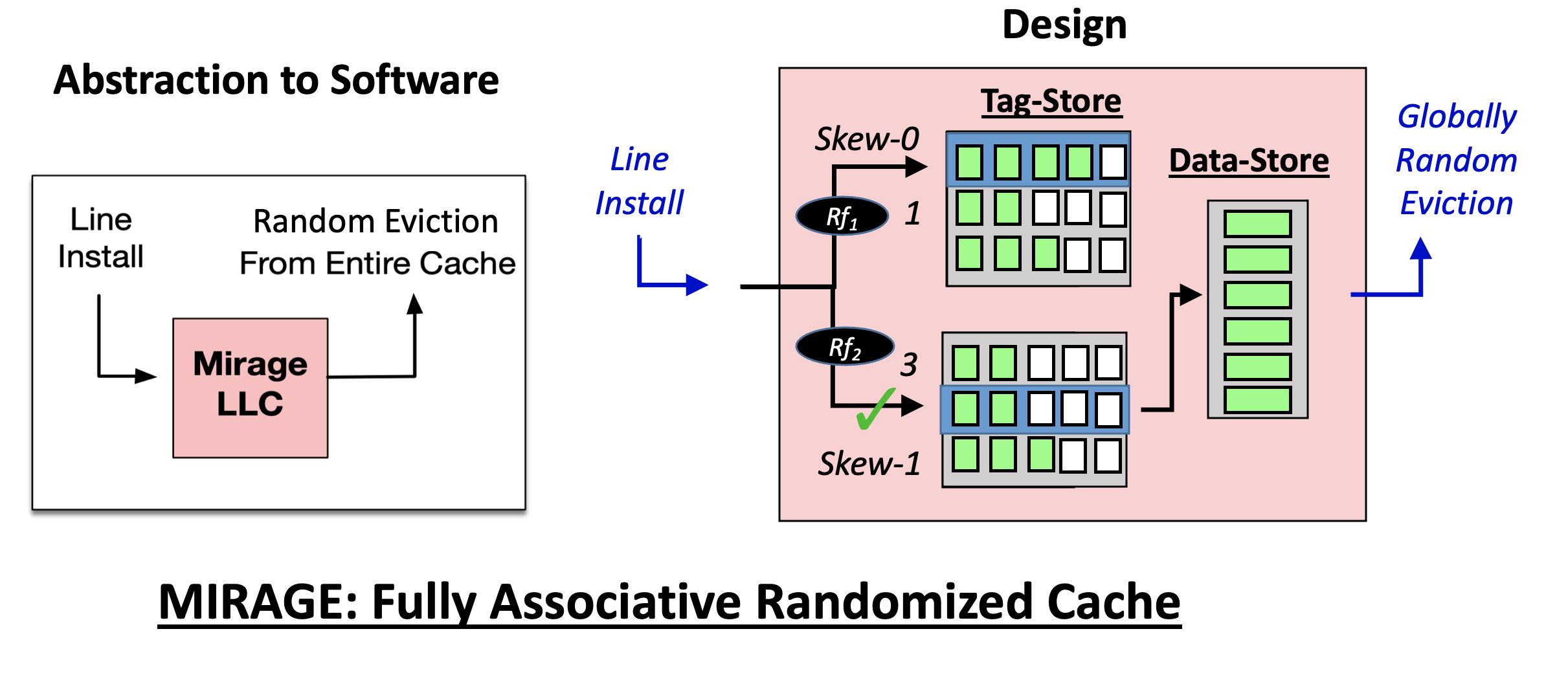

Third Wave:

Defense: To defeat all conflict-based attacks (old and new), Saileshwar and Qureshi recently presented MIRAGE (SEC’21) to eliminate set-conflicts, the root cause of these attacks. MIRAGE provides the abstraction of a fully associative randomized LLC with random-replacement, where evicted lines are independent of installed lines and thus leak no information. It achieves this by provisioning invalid tags in the LLC and using load-balancing techniques inspired by Power of 2 Random Choices to ensure the availability of invalid tags. This allows new lines to be installed in invalid tags without set conflicts and evicted lines to be selected randomly in a fully associative manner leaking no information. MIRAGE thus ensures set-conflicts are unlikely in the lifetime of the universe and eliminates conflict-based attacks.

Attack: So, is this the end of the line for attacks on randomized caches? If history serves right, maybe not. While we wait for new attacks to possibly emerge, there are already several attacks that are not covered by current randomized caches. For instance, current randomized caches mitigate attacks like Flush+Reload, but newer attacks like the LOTR attack (SEC’21) exploiting contention on the LLC ring interconnect, or cache-occupancy-based attacks like Shusterman et al. (SEC’20, SEC’21) are outside the threat model of current randomized caches. While cache-partitioning solutions like Catalyst (HPCA’16), DAWG (MICRO’18), MI6 (MICRO’19), Jumanji (MICRO’20), or even our recent Bespoke Cache Enclaves (SEED’21) which provides customizable cache partitions, prevent occupancy-based attacks, they require considerable operating-system support. We expect future randomized caches to incorporate insights from these defenses to prevent a broader class of attacks.

Takeaway-4: As sophisticated defenses eliminate a class of attacks, new attacks are likely to emerge that were outside the scope of previous defenses.

Lastly, all randomized caches are only as secure as the cryptographic functions they use for randomizing the address to set mappings. So it is imperative that randomized caches use constructions with standardized cryptographic functions like QARMA or PRINCE which have undergone cryptanalysis, and avoid custom constructions, which may be prone to weaknesses as noted by BRUTUS (CAL’20) and Purnal et al. (SP’21).

What’s Next for Randomized Caches?

Fully associative randomized caches like MIRAGE provide principled security of eliminating set conflicts, but such approaches incur added storage costs. So one research problem for future works is how to reduce storage and power overheads of randomized caches while maintaining security? Another research problem is formally verifying the security of randomized caches. For instance, CASA (MICRO’20) proposes a transmission channel model to analyze the security of randomized caches, He and Lee (MICRO’17) propose a “Probability of Attack Success” metric for secure caches, while Deng et al. (HaSS’19, ASPLOS’20) propose a three-step model for attacks on secure caches. But how do we formally prove the absence of attacks on new randomized cache defenses? Lastly, with new attacks exploiting contention for cache space or interconnects, how do we adapt randomized cache defenses to prevent such attacks? And as we develop better randomized-cache defenses, can we use the understanding to devise new attacks?

In this author’s opinion, this is just the beginning of the battle for secure caches. But even as this cycle of attack and defense continues, the security of our systems only increases with each iteration.

Acknowledgments: Many thanks to Moin Qureshi and Mengjia Yan for useful feedback on early drafts of this article.

About the Author: Gururaj Saileshwar is a graduating (Spring 2022) Ph.D. student at Georgia Tech, Atlanta, USA, advised by Prof. Moinuddin Qureshi, exploring hardware security. He is interested in a wide variety of topics at the intersection of computer architecture and system security, including cache side-channels, transient execution attacks and defenses, memory integrity, memory safety, and others. Gururaj will be seeking a faculty position in 2021-2022.

Disclaimer: These posts are written by individual contributors to share their thoughts on the Computer Architecture Today blog for the benefit of the community. Any views or opinions represented in this blog are personal, belong solely to the blog author and do not represent those of ACM SIGARCH or its parent organization, ACM.