[Editor’s Note: I’m very happy to announce that Christina Delimitrou of Cornell University will be serving as the blog’s Associate Editor. Thank you, Vijay Janapa Reddi, for your amazing service in this role for the last three years!]

Chip vendors face significant challenges with the continued slowing of Moore’s Law causing the time between new technology nodes to increase, skyrocketing manufacturing costs for silicon, and the end of Dennard scaling. The slowing of Moore’s Law makes it increasingly difficult to pack more functionality on a single chip. If transistor sizes stay constant, more functionality could be integrated via larger chips. However, larger chips are undesirable due to significantly higher costs. Verification costs are higher. Manufacturing defects in densely packed logic can dramatically reduce the wafer yield. Lower yield translates into higher manufacturing cost.

In the absence of device scaling, domain specialization provides an opportunity for architects to deliver more performance and greater energy efficiency. However, it has been difficult to make a financial case for domain specialization given the market sizes for specific compute functionality coupled with the high manufacturing costs and high non-recurring engineering costs associated with developing a customized piece of silicon. Recently, machine learning as a killer app has made the case for specialization but what about other less popular application areas? The increasing popularity of chiplet-style designs offers an economically viable path towards realizing custom solutions tailored to different domains and market segments.

What are chiplet-based systems?

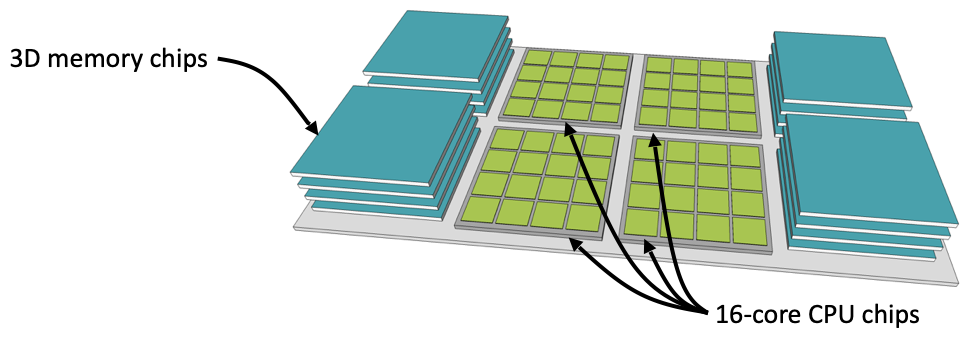

Chiplet-based systems propose the integration of multiple discrete chips within the same package via an integration technology such as a multi-chip module or silicon interposer. Figure 1 shows a hypothetical chiplet-based system composed of 4 CPU chiplets and 4 memory stacks integrated via a silicon interposer. Chiplet-based design approaches have seen growing commercial adoption by companies such as Intel, AMD, and Xilinx. Xilinx was an earlier adopter of chiplets, stacking multiple die horizontally on a passive silicon interposer. Intel’s Foveros takes a 3D approach to stacking its chiplets with the base piece of silicon akin to an active interposer. In recent years, AMD has produced several chiplet designs built as MCMs.

Figure 1: Hypothetical chiplet-based system featuring 4 CPU chiplets and 4 stacks of memory.

What are the benefits of using chiplets?

Current chiplet architectures provide a number of benefits that make them attractive to build. These include cost, flexibility, and sustainability.

Cost: Traditionally, manufacturing a design as a single monolithic chip was cheaper — fewer parts to assemble and no area overhead associated with communicating between chips. With chiplets, we reintroduce multiple parts and require some silicon area to be dedicated to communication between chiplets. However, cost reduction can be attributed to improved manufacturing yield and reduced engineering cost. Smaller chips have lower manufacturing costs. Manufacturing defects that render large die inoperable lead to lower yield rates than smaller die. Cost is also reduced by virtue of fitting more small die on a large circular wafer.

A monolithic design requires all functionality in a system-on-chip (SoC) to be implemented in the same process technology. However, not all functionality in an SoC will benefit from moving to the bleeding edge technology process. For example, I/O and analog components get a lower shrink factor when moving to a smaller node when compared to digital logic and SRAM. Forcing all components into the same advanced process node incurs added cost to re-engineer and verify those designs. Allowing some components to stay in an older process facilitates design reuse and avoids these added costs if there are no measurable performance or energy efficiency benefits from migration.

Flexibility: Small simple chiplets can be more easily repurposed across market segments. To move from mobile to desktop to server may simply require increasing the number of chiplets integrated into a package. This ease of movement between market segments ultimately translates into additional cost savings. Chiplets also offer flexibility in overcoming the reticle limit — bigger systems can be built by integrating more small chiplets than would be feasible using a monolithic piece of silicon.

Sustainability: As the impacts of climate change grow more alarming, improving sustainability in computer systems requires new innovations and approaches. In Bobbie Manne’s recent MICRO 2020 keynote, she highlighted the improved sustainability associated with chiplets. As noted above, some chiplets in an SoC will remain in older process technologies. As a process technology matures, the manufacturing process itself becomes more efficient. From a carbon emissions standpoint, it is preferred to continue manufacturing chips in an older process rather than transition to the bleeding edge node. Chiplet-based systems may help move the needle towards more sustainable computing solutions by leveraging this improved manufacturing efficiency.

Some Key Challenges

For a chiplet solution to be viable, the cost of communication between chiplets needs to be similar to the cost of communication within a monolithic system. Ideally, from a communication perspective, moving from a monolithic design to a chiplet design should be completely hidden from the user; in reality, software changes to manage non-uniform communication latencies might be required. Bandwidth and latency may be more constrained through the interposer or package substrate; clock crossings must be dealt with.

To date, chiplet designs primarily come as full stack designs from a single vendor such as AMD. Current single-vendor chiplet solutions allow one company to customize all aspects of the system to maximize performance and reduce power consumption. However, as a future vision, chiplets enable the design of bespoke systems by mixing and matching chiplet IP from different vendors based on the needs of the customer. To realize a vision of bespoke systems, we must move away from proprietary interconnect fabrics. Open fabrics and interfaces will enable integration across multiple vendors. To this end, the Open Compute Project has a subcommittee on the Open Domain Specific Architecture. As part of this work, they are exploring the standards necessary to facilitate the integration of chiplet IP from a range of vendors into a single custom system including the interfaces between chiplets and the protocol stack for communication between different IP. This vision is also espoused by the DARPA Common Heterogeneous Integration and Intellectual Property (IP) Reuse Strategies (CHIPS) program.

Smaller chiplets are cheaper to design and manufacture which will lower the barrier to entry for a wider range of domain-specific accelerators. Furthermore, the ability to integrate small pieces of custom silicon in different technology nodes will likely make it more economical for vendors to develop domain specific IP solutions.

To realize multi-vendor chiplet systems, modularity must be a key goal. Each vendor should be able to customize their IP for the target workload and allow seamless integration without the system enforcing too many rules on routing, interconnect topology, etc. Flexible interconnects to accommodate a range of sizes and configurations of chiplets without sacrificing performance must be designed.

Chiplets offer many exciting opportunities for architectural innovations and provide an opportunity to scale systems beyond the limitations of Moore’s Law.

About the author: Natalie Enright Jerger is the Canada Research Chair in Computer Architecture at the University of Toronto.

Disclaimer: These posts are written by individual contributors to share their thoughts on the Computer Architecture Today blog for the benefit of the community. Any views or opinions represented in this blog are personal, belong solely to the blog author and do not represent those of ACM SIGARCH or its parent organization, ACM.