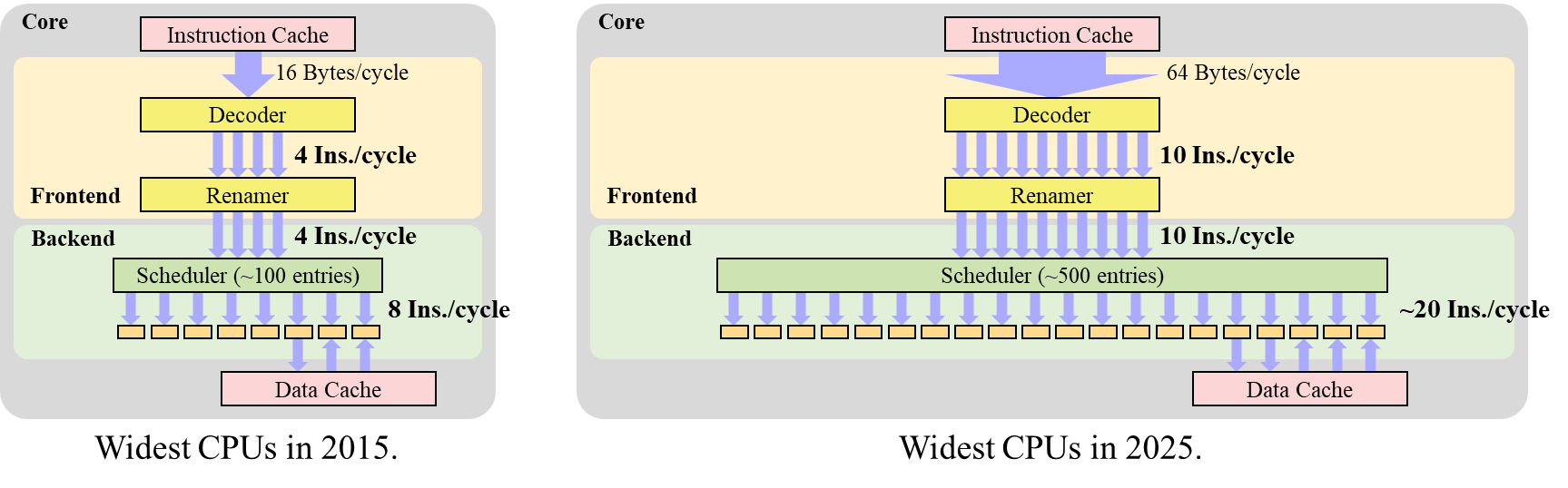

CPU cores have become significantly wider over the past decade. Ten years ago, the highest-performance CPUs could decode only up to four instructions simultaneously and execute up to eight instructions. However, top-tier CPUs released in recent years have grown to nearly twice this width. For example, Apple’s M4 processor, released in 2024, can decode 10 instructions simultaneously and execute up to 19 instructions. ARM’s Cortex-X925 processor, also released in 2024, can decode 10 instructions simultaneously and execute up to 23 instructions. Furthermore, these processors can dynamically schedule around 700 to 900 instructions out of order. Given the rapid increase in scheduling capacity, it is likely that processors capable of managing over 1000 instructions out-of-order will emerge in the near future.

Figure 1: Comparison of widest CPUs in 2015 and 2025.

To create a CPU core that can execute a large number of instructions in parallel, it is necessary to improve both the architecture—which includes the overall CPU design and the instruction set architecture (ISA) design—and the microarchitecture, which refers to the hardware design that optimizes instruction execution.

Historically, CPU evolution has primarily been driven by microarchitecture innovations, since discarding backward compatibility and changing the fundamental structure of an instruction set is commercially unfeasible. From an academic perspective, extensive research has focused on reducing complexity by organizing scheduling windows hierarchically or clustering them—typical microarchitectural approaches for building wider CPUs [WIB, LTP]. Researchers have also explored hybrid designs that integrate in-order principles into out-of-order pipelines [FXA, Ballerino].

However, microarchitecture innovations alone have their limits. To enable CPUs to execute more instructions in parallel (i.e., to extract more instruction-level parallelism), it is also important to revisit the ISA.

One of the most notable recent developments in instruction sets is RISC-V. RISC-V is an open instruction set that has attracted attention from both academia and industry, built upon decades of RISC research. RISC-V is often considered a definitive RISC instruction set, as it was carefully designed to avoid past pitfalls, such as reliance on specific hardware characteristics (e.g., delay slots), which have limited the scalability of previous architectures. As a result, many people may think that research into instruction sets for general-purpose CPUs has come to an end, thanks to RISC-V.

Challenges in Register Management

As refined as RISC-V is, it still inherits certain fundamental challenges common to RISC architectures. In particular, the complexity of register management remains a major bottleneck in RISC architectures, including RISC-V, and we will discuss this issue in more detail later. This problem is becoming increasingly serious, especially as CPU designs continue to scale up, a trend mentioned earlier.

Both CPUs introduced at the beginning of this article can execute a certain maximum number of instructions simultaneously, yet they can only decode roughly half as many instructions per cycle. This limitation stems from the register renaming process that follows decoding. Register renaming maps logical registers to physical ones to eliminate false dependencies caused by logical register reuse.

Register renaming requires highly multi-ported memory, which incurs significant chip area and power costs, making it challenging to simply scale up the rename width. Furthermore, managing the mapping table between logical and physical registers is inherently complex and challenging. For instance, when a branch misprediction occurs, all renames associated with the mispredicted instructions must be undone, requiring the mapping table to be restored to its previous state. As the reorder buffer (ROB) size increases, the associated cost scales accordingly.

Distance-Based Instruction Set

Our solution to these issues is a distance-based instruction set. In a typical instruction set, operands are specified by register numbers, as in R1 <- R2 + R3. By contrast, in a distance-based instruction set, each operand is specified based on how many times ago a result was produced. For example, an instruction that adds the results produced two and three instructions earlier could be written as add ^2 ^3.

With this approach, values produced by instructions are never overwritten, eliminating false dependencies. This is conceptually similar to the static single assignment (SSA) form, an intermediate representation (IR) widely used in compilers, where each variable is assigned exactly once. However, while SSA is effective as a compiler IR, directly applying this approach to an instruction set is insufficient to fully represent a program. To overcome this limitation, we introduce specific mechanisms.

To apply our concept to real architecture, we have proposed the following distance-based instruction sets, each designed with distinct characteristics and target applications:

- STRAIGHT: This approach represents a source operand by ‘how many instructions earlier’ the value was generated. It is the simplest form, eliminating the need for register renaming and significantly reducing the size of the scheduler, resulting in a more lightweight hardware implementation.

- Clockhands: This method introduces multiple register groups. This approach represents a source operand by specifying ‘which group’ and ‘how many writes earlier within the group’. While it slightly increases hardware complexity compared to STRAIGHT, it provides greater flexibility in machine code by efficiently handling both short-lived and long-lived values.

- TURBULENCE: Designed specifically for GPUs, this instruction set adopts a hybrid approach that allows both distance-based and traditional register-based referencing. By dynamically selecting the appropriate method, TURBULENCE achieves the benefits of distance-based specification with minimal overhead.

![RISC-V example: bne a1, a5, .L3; STRAIGHT example: bne [1], [4], .L2; Clockhands example: bne t[2], s[3], .L3; TURBULENCE example: bne ^1, R2, .L3](https://www.sigarch.org/wp-content/uploads/2025/03/image-928.png)

Figure 2: Comparison of four ISA assemblies corresponding to the same C code

These distance-based instruction sets eliminate the need for register-renaming hardware, thereby resolving the bottlenecks associated with traditional register-based architectures. As a result, we believe this could be a highly promising approach for CPUs capable of executing more than 20 instructions per cycle. In addition, for GPUs where out-of-order execution has not been practical, introducing a distance-based instruction set will make performance gains from out-of-order execution more realistic.

Compiler Technology

Specifying operands by distance solves various problems—it’s a simple idea, but developing a compiler for this approach is a challenging task. The primary difficulty arises from the presence of branches in programs, as the distance to a referenced value depends on the execution path taken. To address this, we developed compilers that ensure correct execution by either inserting NOPs to equalize distances across different paths or introducing copy instructions to relay values and adjust distances accordingly.

Our STRAIGHT compiler, built on LLVM, has reached a level where it can compile and correctly execute all benchmarks from SPEC CPU2017, a widely used standard for evaluating CPU performance. Although the distance-based representation is novel, this has demonstrated that the ease of programming remains comparable to conventional register-based representations.

Hardware Implementation

Implementing and verifying a proposed architecture in actual hardware presents a significantly greater challenge than simulation-based validation. Yet, successfully seeing it operate is an immensely rewarding experience.

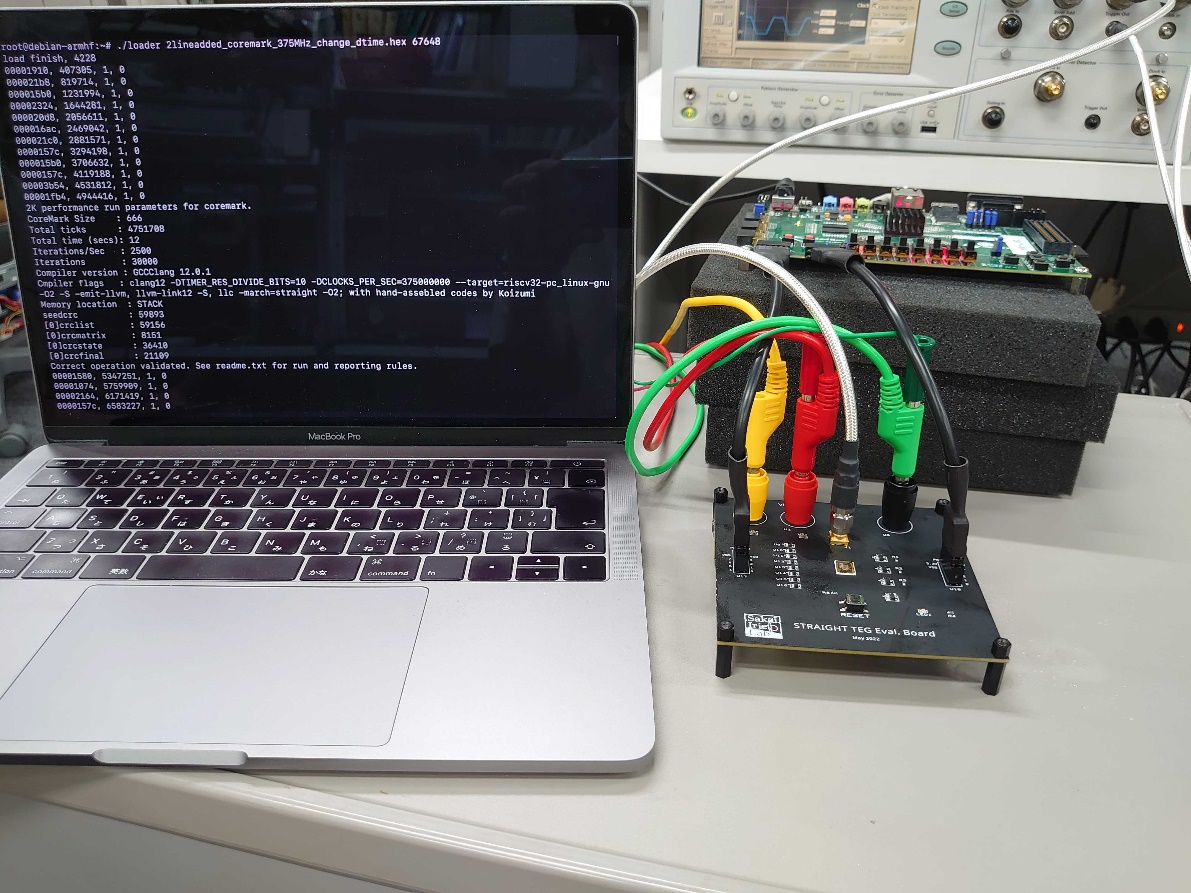

We have already designed the STRAIGHT CPU and Clockhands CPU, both functionally equivalent to RV32IM, using SystemVerilog. Through this implementation and verification on FPGAs, we confirmed that these architectures achieve a reduction in circuit area. Furthermore, we have successfully fabricated the STRAIGHT CPU as a physical chip twice, demonstrating configurations comparable to cutting-edge CPUs, including eight-instruction simultaneous fetch.

Figure 3: A STRAIGHT chip on a testbed, just finished running CoreMark correctly.

Distance-based instruction sets are no longer a theoretical thing; there are already working chips!

A Distance-Based GPU in the Near Future?

The era of processors adopting distance-based instruction sets might be closer than you think. While implementing such an instruction set in commercial CPUs could take time—due to the need for modifications to libraries, operating systems, and various software stacks—the situation is quite different for GPUs. Unlike CPUs, GPU programs are typically distributed in an intermediate representation (such as PTX for NVIDIA GPUs or SPIR-V for Vulkan), which is then translated by drivers into the appropriate internal instruction set for the hardware. This flexibility allows GPU vendors to modify their internal instruction sets relatively easily. Since distance-based instruction sets retain all standard instruction behaviors aside from operand specification, they can seamlessly replace existing GPU instruction sets without major disruptions.

Given this adaptability, the introduction of distance-based GPUs may be closer than expected. Historically, GPUs have achieved a high price-performance ratio by leveraging simpler architectures compared to CPUs and relying on mature process nodes. However, as semiconductor fabrication technology has advanced, the era of automatic performance gains from process scaling is coming to an end. This shift means that architectural innovations will play an increasingly crucial role in improving GPU performance.

TURBULENCE, a distance-based instruction set designed specifically for GPUs, is an architecture that directly addresses this need, paving the way for more efficient and high-performance GPUs in the near future.

Conclusion

In this article, we introduced distance-based instruction set architectures, a novel approach to general-purpose instruction sets. While many might assume that CPU and GPU instruction sets have already been thoroughly explored—unlike those for specialized AI processors—exciting innovations continue to emerge in this field. One of the biggest questions today is whether CPUs can keep scaling indefinitely or if we are approaching a fundamental limit. This remains one of the most pressing topics in computer architecture today.

Amid these discussions, distance-based instruction sets present a compelling path toward significantly enhancing CPU and GPU performance. Are you ready to stay ahead in the next-generation processor revolution? Let’s explore shaping the future of computing together!

About the Authors

Toru Koizumi is an assistant professor at Nagoya Institute of Technology. Research centers on hardware-software co-design and spans instruction set architecture, enhancements to out-of-order execution using advanced predictors, compiler construction for novel ISAs, arithmetic circuit design, and fast and accurate floating-point algorithms. Multiple works have been published on these topics.

Ryota Shioya is an associate professor at the University of Tokyo, specializing in processor microarchitecture. His work addresses diverse aspects of computer architecture and system software. He has also participated in research and development projects on AI hardware and RISC-V GPUs, as well as RISC-V and ARM CPUs, in collaboration with several companies.

Hidetsugu Irie is a Professor at the University of Tokyo. His research interests span computer architecture and human-computer interaction. His extensive research on ILP architectures includes struggling on instruction-block-based, clustered, tile-based and cache management strategies as well as various predictors. He is a Fellow of the Institute of Electronics, Information and Communication Engineers.

Disclaimer: These posts are written by individual contributors to share their thoughts on the Computer Architecture Today blog for the benefit of the community. Any views or opinions represented in this blog are personal, belong solely to the blog author and do not represent those of ACM SIGARCH or its parent organization, ACM.