The Moore’s Law engine that we have come to depend upon is sputtering. It is encouraging architects to innovate in alternative ways to keep the industry moving forward. The most widely accepted approach is using domain specific architectures, and as such, in recent years, we have seen the industry shift its focus from CPUs to GPUs, and we are currently in the era of TPUs. Sometime soon we will have to worry about which letter is not used yet for your xPU design. Unsurprisingly, Hennesey and Patterson say in their distinguished 2018 Turing Award lecture that it is “A New Golden Age for Computer Architecture: Domain-Specific Hardware/Software Co-Design, Enhanced Security, Open Instruction Sets, and Agile Chip Development.” But are domain specific architectures a new thing?

In this article, I share a mobile computing perspective on domain-specific architectures. The mobile industry has been making pioneering advancements in domain-specific architectures (DSA) for over a decade. These remarkable technological advancements have mostly gone unnoticed by the architecture research community. So, in this blog post, I attempt to reconnect the architecture research community with the DSAs we take for granted daily.

A Typical Smartphone SoC

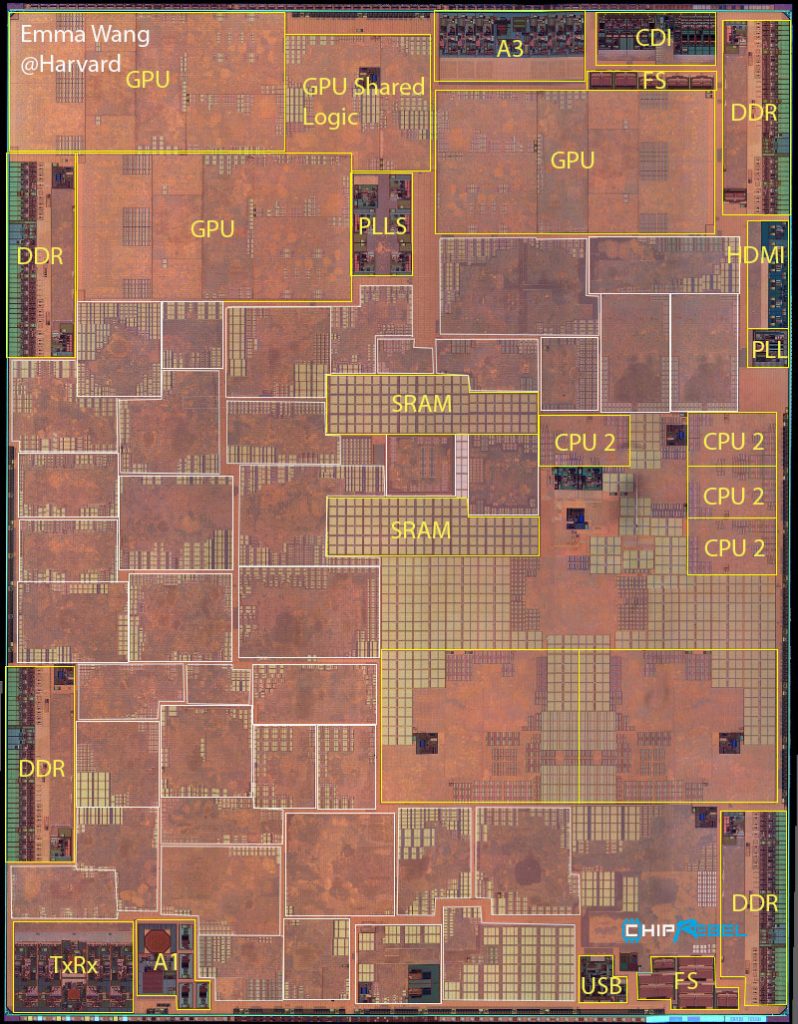

Let’s take a smartphone SoC for discussion. It can contain more than 30 hardware agents. In addition to the CPU and GPU, which take up less than one-third of the total silicon real estate, the other agents include the IPU, MDSP, CDSP, ADSP, ISP, G2DS, JPEG, VENC, VDEC, VPU, Modem, Security, Display, Multimedia, Sensors … the list goes on. The white boxes in the figure below, which exclude the CPU and GPU, are all IPs in a state-of-the-art SoC.

Accelerating application use cases with domain-specific IPs gives an order of magnitude improvement in performance and power efficiency. For instance, the Pixel Visual Core in Google’s new smartphones offers up to 5x increase in performance for HDR processing at one-tenth the power consumption compared to a more traditional mobile SoC solution that is based on programmable IPs such as the CPU and DSP.

Mobile architects have long been forced to work with accelerators since mobile devices must operate under strict power and thermal budgets (TDP of 3 Watts). How else would it be possible for us to enjoy 18 hours of “video playback” on a single charge? So, while server systems have just arrived at the exciting new age of TPUs from CPU and GPU computing, mobile SoCs are far and long ahead in the domain-specific architecture race.

What lessons can we learn from mobile computing? There are many. I focus on two lessons based on challenges that are at the extreme ends of a real-world spectrum. At one end of the spectrum is practicality or a feasibility issue that hinders the widespread adoption of SoCs. At the other end of the spectrum is a technical programmability related issue that impedes the proliferation of the SoCs. Both of these observations are based on my recent industry experience at Google. While I talk about these issues specifically in the context of mobile computing, they are broadly applicable to future domain-specific SoCs that fall outside the scope of traditional mobile computing.

Widespread Adoption Challenge

Not everyone is entirely a fan of domain-specific architectures. The worst of this lot is probably the mobile application developer. Application developers like to write code once and not (re)tune/port the code for a variety of different chipsets. Unfortunately, there are over two billion mobile devices across the planet, consisting of over 100 different SoC chipset variations that are released into the consumer market each year by different SoC vendors.

So, many mobile application developers fear that while some SoCs offer excellent performance, they do not cover enough of the market segment. For instance, a developer may use the Qualcomm Hexagon DSP to accelerate vision processing or machine learning, but the Hexagon DSP is not available on all chipsets. It is only available on the Qualcomm chipsets. Similarly, the Pixel Visual Core is only available on Google phones, not on all Android devices.

For application developers, uniform user experience across devices is one of the ten commandments for mobile computing. Developers seek to run their code evenly well on many different chipsets. So the developers face a dilemma. Should they optimize their code for the best possible features available in the latest mobile devices, or should they compromise and settle for less? Optimizing code for the best-case, high-end devices only is not an option for many developers. It is an impractical decision because building a robust application that performs well on many devices is costly. So, since a large portion of the world still uses “old” smartphones, developers typically choose to optimize their applications for some performance that is “good enough” across many mobile chipsets.

So, while we perceive the benefits of DSA for performance and power efficiency improvements in the post-Dennard scaling and Moore’s Law era, there is a strong chance that there may be “push back” from the developers for SoCs.

Ease of Programmability Challenge

Even if we set aside the issue of uniform adoption of IPs into SoCs made by different chipset vendors, mobile application developers still resist the idea of using accelerators because programming domain specific accelerators is hard. Each IP tends to come with the quirkiness that requires a developer to learn how to write code in a domain-specific language (DSL) and utilize it via custom interfaces. For instance, to program a mobile GPU to do any bit of general purpose computing a developer must learn to write code in OpenGL ES; CUDA is nonexistent on mobile devices and OpenCL is not widely supported. Similarly, to use the Hexagon DSP, a developer must learn the FastRPC protocol and the DSP’s interface description language (IDL). The Pixel Visual Core is no different. The developer must learn the Halide digital image processing language to utilize the Image Processing Unit (IPU).

In general, it seems that application developers must learn a new language that is often harder than mainstream languages (C, Java, Python) to use domain-specific IPs. I just gave an example of four programmable IPs (and their custom languages or interfaces): CPU (C++), GPU (OpenGL ES), DSP (IDL), IPU (Halide). Often, the developer also needs to know many hardware details as compared to programming CPUs. These programmability challenges are the source of much frustration for application developers and, hence, they resist domain-specific accelerators.

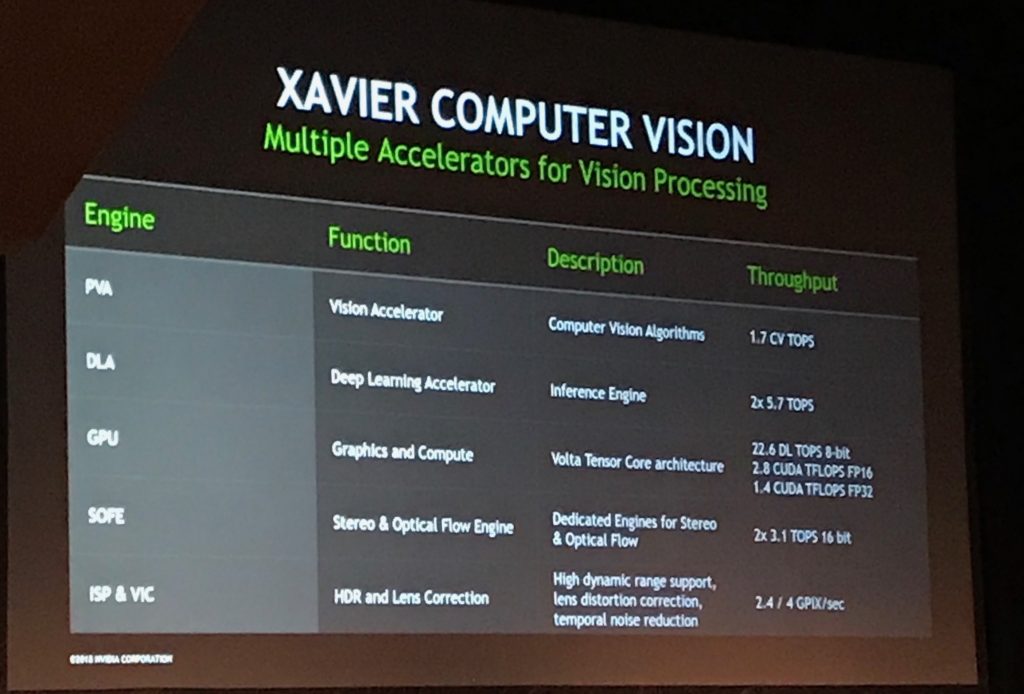

So, while we tout the performance and energy efficiency improvements of domain-specific accelerators, we need to keep the compromise in mind. What’s that compromise? The compromise is that we introduce programmability challenges. Take a peek at the latest NVIDIA Xavier SoC presented at HotChips 2018. It touts “multiple accelerators for vision processing.” It includes a new PVA, DLA, SOFE, ISP and VIC accelerator, in addition to the CPU and GPU. It is an impressive SoC. But I wonder how easy (or difficult) it is to program its suite of vision processing accelerators.

NVIDIA Xavier SoC IPs for computer vision processing, presented at HotChips 2018.

Summary

Domain-specific architectures are fantastic for performance and power efficiency. But we must pay attention to the complexity they introduce into the software. How to manage adoption, programmability, performance variability across different chipsets due to hardware (un)availability, flexibility, etc. are all questions that are just at the tip of the iceberg. I asked Hennessy and Patterson at their Turing Award lecture what they thought was new and different about domain-specific architectures today given that mobile systems have existed for a long time (i.e., the point of this article). Their response was “programmability.” Programmability is key to the widespread adoption of SoCs.

Acknowledgments

The author is an Associate Professor at Harvard University, currently at Google as an “intern” on his sabbatical, prior to which he was an Associate Professor at The University of Texas at Austin. He extends his gratitude to Mark Hill (Gene M. Amdahl Professor) from Univ. of Wisconsin, Paul Whatmough (Machine Learning Architecture and Hardware) from Arm Research/Harvard and the Google SoC Performance Architects for their feedback on this post.

Disclaimer

The views, thoughts, and opinions expressed in the text belong solely to the author, and not necessarily to the author’s current employer, organization, committee or other group or individual.

Disclaimer: These posts are written by individual contributors to share their thoughts on the Computer Architecture Today blog for the benefit of the community. Any views or opinions represented in this blog are personal, belong solely to the blog author and do not represent those of ACM SIGARCH or its parent organization, ACM.