The Cancellation of the Intel Optane Product Line

Recently, Intel announced the cancellation of all Optane products, including both Optane SSDs and Optane Persistent Memory. The news came all of a sudden but was not totally unexpected, as Micron sold the 3D XPoint fab back in 2021 while Intel already canceled the consumer-grade Optane SSDs. Commercially, this decision was likely made to reduce the loss in the Optane product line. While the cancellation does not put an immediate stop to all Optane related products and researches (Intel still plans to release 3rd generation Optane persistent memory), it is time for computer architects and system researchers to rethink the future of persistent memory1In this blog post, we use persistent memory and non-volatile memory interchangeably to refer to memories that are byte-addressable, low latency, and can maintain data on power loss, such as PCM, STT-RAM, and ReRAM. We use “Optane persistent memory” to refer to Intel’s persistent memory product. research.

In this article, we would like to show our perspective on what this cancellation means for our colleagues in the computer architecture and systems fields, and what the future might look like for persistent memory research.

DRAM Scaling Challenges

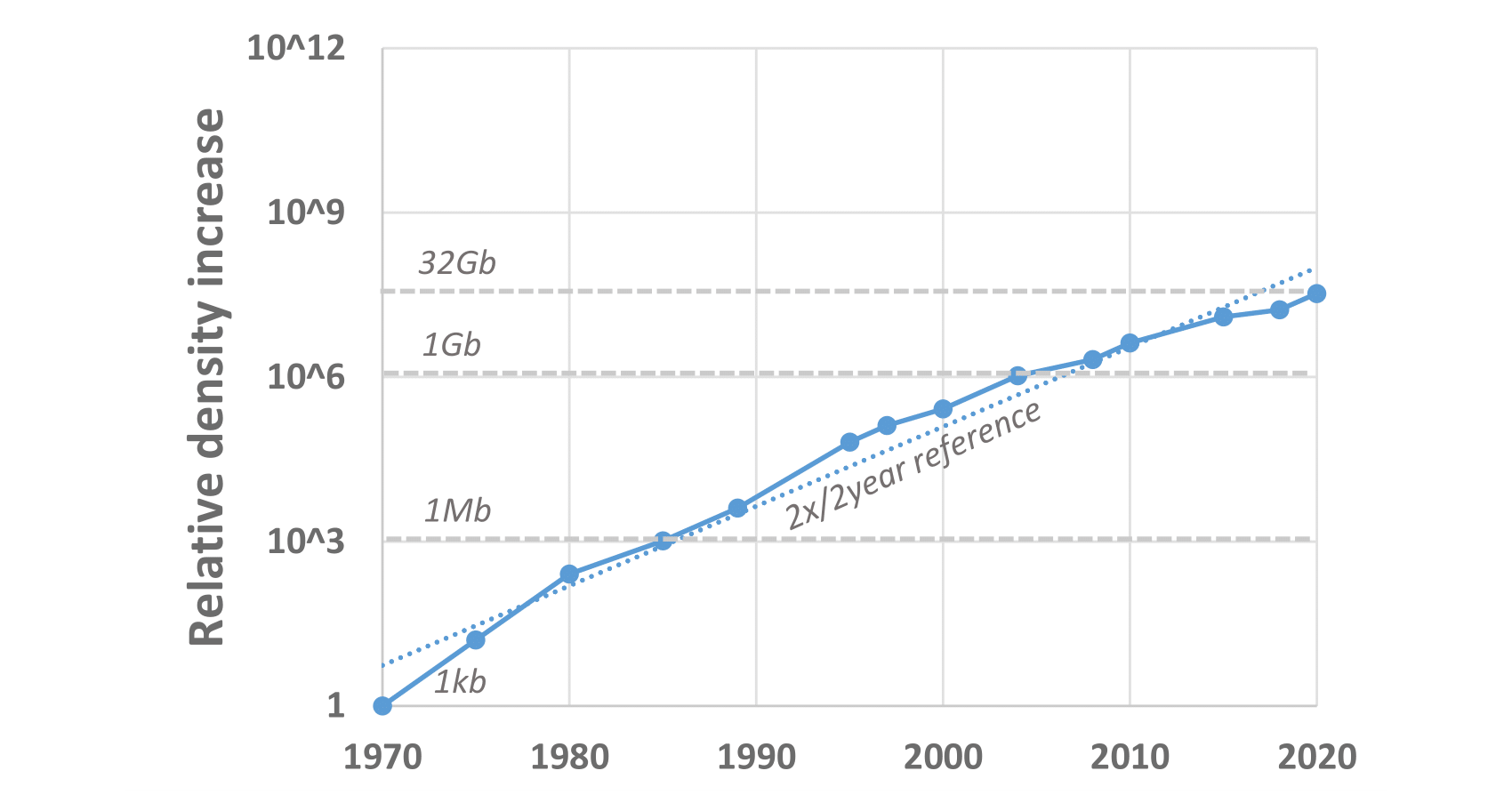

DRAM is the most commonly used technology for the main memory. Since its invention in 1967, the growth of DRAM chips has largely benefited from semiconductor technologies scaling driven by Moore’s Law, resulting in an capacity increase of more than six orders of magnitude. However, since the last decade, this incredible growth in capacity has been slowing down. At the same time, the volume of data produced globally is growing at an exponential rate. As a result, emerging big data workloads such as data analytics, machine learning, and in-memory caching are putting ever-increasing pressure on memory capacity. Even though advancements such as 3D-stacked memory can temporarily mitigate capacity limitations, the fundamental scaling limitation for main memory remains unsolved.

DRAM density trends over time, which has been below the doubling-every-2-years scaling curve since 2010 (source: Prospects for Memory).

To overcome the scaling limitation, alternative memory technologies have been developed, one of which is persistent memory. In addition to its higher density, persistent memories also provide data persistence, making unifying the memory and storage tiers possible.

Research in the Pre-Optane Era

In 2019, Intel released Optane DC Persistent Memory. That being said, the community has already been actively studying persistent memory well before Optane persistent memory’s release. At the device level, the community proposed several persistent memory technologies, such as PCM, STT-RAM, and ReRAM. In 2009, architectural enhancements that improve persistent memory latency, energy consumption, and endurance have emerged (Lee et al. [2009]). Right after that, several areas of research to improve persistent memory programmability appeared, including programming interfaces (NVHeap, Mnemosyne) and memory consistency/persistency models (Pelley et al. [2014], Joshi et al. [2015], Lu et al. [2014], Kolli et al. [2016]). Systems specifically designed for persistent memory such as file systems (BPFS, PMFS, NOVA) and database indexes (B+-Tree, NV-Tree, BzTree, FPTree) demonstrated persistent memory’s potential to provide both high performance and large capacity.

Research after Optane Persistent Memory Release

The release of Optane persistent memory further boosted research efforts in the area. Profiling and benchmark studies such as Yang et al. [2018] and Izraelevitz et al. [2019] revealed the unique device characteristics of Optane persistent memory, some of which were not anticipated in the pre-Optane era. Based on these real device characteristics, the community quickly improved pre-Optane era designs and proposed systems optimized for Optane persistent memory, including file systems (SplitFS), database indexes (LB+-Trees, uTree, DPTree, ROART, PACTree), and consistency/persistency models (Kokolis et al. [2021]). Due to the complex nature of persistent memory programming, programmability continues to be an important topic (Pronto, AutoPersist, go-pmem). At the same time, testing frameworks have been developed to ensure correct failure-recovery from persistent data (PMTest, PMFuzz, XFDetector).

Besides the persistence properties, researchers have also looked into adopting persistent memory as an expansion of the main memory, including works in transparent memory offloading [hemem, klocs, nimble], data center workloads (Sage, Gill et al. [2020], Bandana, Psaropoulos et al. [2019]), and scientific computing (Peng et al. [2020], Ren et al. [2021]).

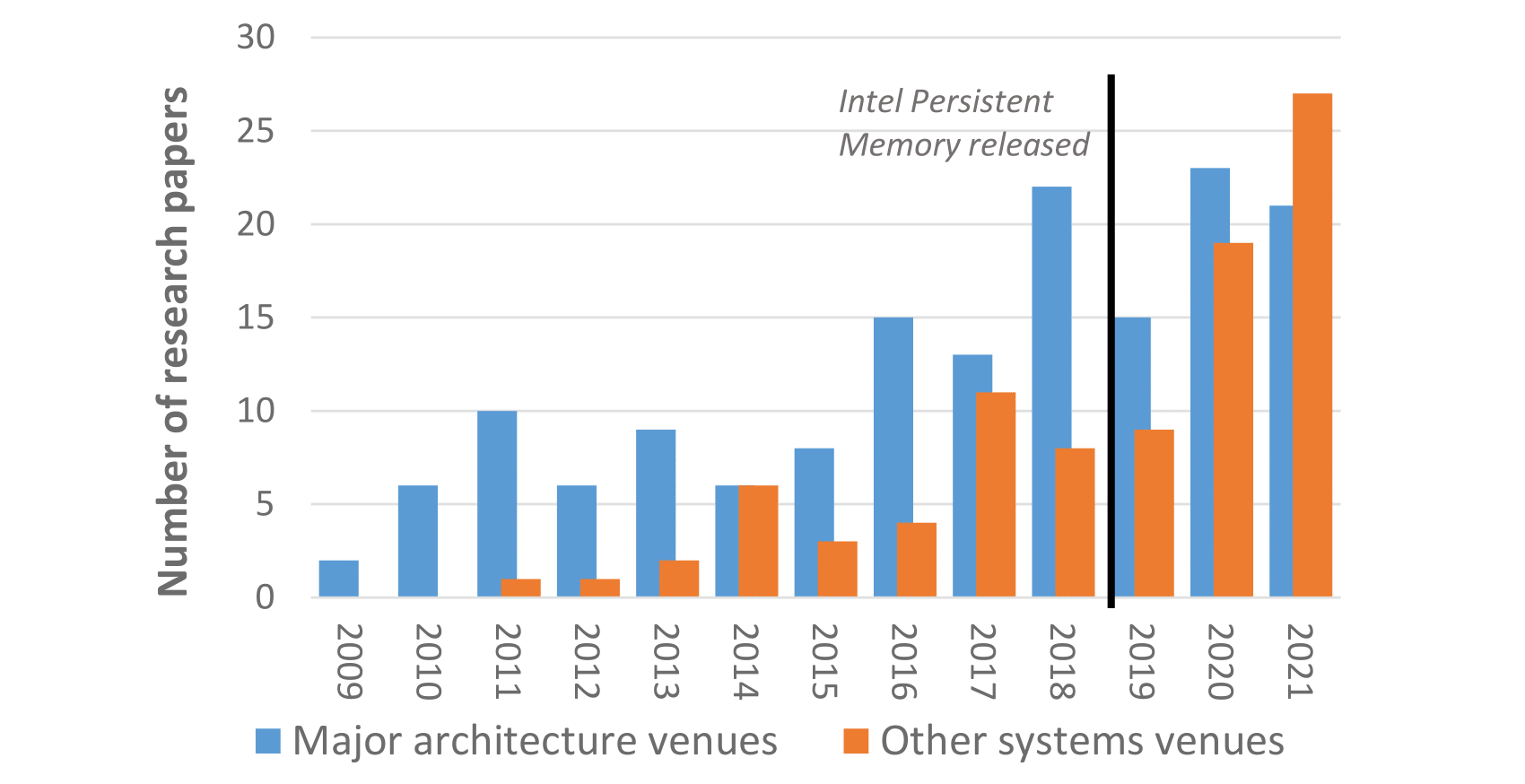

We collect the number of publications in major architecture and other system venues starting from 2008. Year 2022 is not included as some conference proceedings have not been released. Persistent memory architecture papers started to appear earlier than system papers, while the number of system-related papers exploded in 2020 after the release of Optane persistent memory in 2019.

The number of persistent memory related publications in major architecture and system venues. Major architecture venues include: ISCA, MICRO, ASPLOS, HPCA. Other system venues include: OSDI, SOSP, ATC, EuroSys, FAST, SIGMOD, VLDB.

Looking beyond Optane Persistent Memory

While the Intel Optane product line has been discontinued, the problems it originally aimed to address, such as DRAM capacity scaling and slow persistence, remains unsolved, suggesting the community to look for other alternatives. Today, there are several exciting directions for future research:

- Compute Express Link (CXL): CXL is an industry standard cache-coherent interconnect running on top of PCIe. In particular, the CXL.mem protocol enables memory expanders such as the Samsung 512GB module to increase the system memory capacity without consuming DIMM slots. Although there are no commercially-available CXL memory devices yet, early prototypes from Microsoft (Li et al. [2022]) and Meta (TPP) show the great potential of the technology. Further research is required to understand how upcoming CXL devices will interact with today’s workloads.

- Memory tiering: While using lower-tier memory devices to alleviate capacity pressure from DRAM is not a new concept (e.g., Linux swap), the emergence of new workloads and memory devices reveals new opportunities and challenges in memory tiering. While one popular approach is to transparently perform data movement based on hotness (Lagar-Cavilla et al. [2019], TMO, HeMem), its effectiveness against applications that e.g. does not have good locality remains to be investigated. As a result, how to intelligently move data to best utilize the performance tradeoffs of different memory devices requires further innovation.

- In terms of data persistence, upcoming storage class memory devices from Kioxia and Everspin may become suitable alternatives to Intel Optane persistent memory. In addition, future storage devices with memory semantics such as Samsung’s CXL SSD have the potential to fill in the performance gap between memory and storage similar to what Optane persistent memory did.

Last but not least, we cannot forget about our protagonist, Optane persistent memory. While it has been discontinued, Optane persistent memory remains one of the most mature storage class memory devices in the community. Thus, researchers can utilize it to provide performance estimations for future lower-tier memory devices. Alternatively, CXL researchers can use remote NUMA nodes to emulate CXL memory devices (details in TPP). Of course, simulators are always an option as well.

That being said, we must learn from past lessons. In the pre-Optane era, researchers used DRAM-based emulators and simulators to obtain insightful results. However, later works such as Yang et al. [2018] reveal that several assumptions made by pre-Optane emulators/simulators are untrue, making the resulting conclusions invalid. Thus, we must take extra caution when using emulation/simulation today.

Closing Thoughts

Should research be closely tied to the industry? Yes, research should be relevant to real use cases, but insights should ideally avoid close ties with a specific product line such that they can remain valuable. While the cancellation of Optane persistent memory certainly has disrupted many research efforts in our community, several exciting directions are just over the horizon.

About the Authors:

Xinyang (Kevin) Song is a second year research Master’s student at the University of Toronto under Prof. Gennady Pekhimenko. His research focuses on heterogeneous memory systems for emerging big data applications.

Sihang Liu received his PhD degree from the University of Virginia in 2022. He will be joining the School of Computer Science at the University Waterloo as an Assistant Professor in 2023. His research interests lie in computer systems and architecture. His work has been recognized by the 2019 MICRO Top Picks–Honorable Mention, the final list of NVMW 2020 memorable paper award, and a Google Ph.D. fellowship award.

Gennady Pekhimenko is an Assistant Professor at the University of Toronto, CS department and (by courtesy) ECE department, where he is leading the EcoSystem (Efficient Computing Systems) group. Gennady is also a Faculty Member at Vector Institute and a CIFAR AI chair. Gennady is a recipient of Google Scholar Research Award, VMware Early Career Faculty Grant, Amazon Machine Learning Research Award, Facebook Faculty Research Award, and Connaught New Researcher Award. His research interests are in the areas of computer architecture, compilers, and systems for machine learning.

Disclaimer: These posts are written by individual contributors to share their thoughts on the Computer Architecture Today blog for the benefit of the community. Any views or opinions represented in this blog are personal, belong solely to the blog author and do not represent those of ACM SIGARCH or its parent organization, ACM.

- 1In this blog post, we use persistent memory and non-volatile memory interchangeably to refer to memories that are byte-addressable, low latency, and can maintain data on power loss, such as PCM, STT-RAM, and ReRAM. We use “Optane persistent memory” to refer to Intel’s persistent memory product.