A point cloud literally means a collection of points. One can think of a point as a sample of a surface, and each point is represented by the [x, y, z] coordinates in the 3D space. A point could have other attributes, such as normal, RGB color, albedo, and Bidirectional Reflectance Distribution Function (BRDF) that are useful in downstream tasks such as modeling, rendering, scene understanding, etc.

Point cloud data is by no means a new concept. It has been widely used in fields such as graphics and physics simulation for decades, but is becoming ever more relevant, mainly because of two trends: 1) the prevalence of cheap and convenient point cloud acquisition devices, and 2) the emergence of applications that make use of point clouds (e.g., cultural heritage modeling and rendering, Augmented Reality, autonomous vehicles).

This post showcases how point clouds are powering some of the most exciting applications today, and discusses challenges for computer systems and hardware architecture.

Point Cloud Acquisition

Point clouds are most commonly acquired using the time-of-flight (TOF) principle. Typical TOF acquisition devices use laser as the light source as is in today’s LiDARs. While traditional mechanical LiDARs are expensive (upwards of $100,000), solid state LiDARs (based on Single-Photon Avalanche Diode) have the potential to significantly lower that price. For instance, the most recent iPhone 12 Pro has a solid state LiDAR, albeit with a shorter sensing range and thus is mostly used indoors.

Alternatively, point clouds could be obtained using trigonometry, which is what (structured light) stereo cameras (e.g., Intel’s RealSense camera) and classic laser scanners operate on. These devices generate a depth map usually through triangulation. The depth map is then converted to the point cloud, pixel by pixel. Among many examples, 3D models of Michelangelo sculptures were obtained using this approach in the early 2000s.

Figure 1: Different point cloud acquisition devices and use cases. (left) The structured light stereo system used to digitally model Michelangelo’s Florentine Pieta; (middle) LiDAR on Waymo’s self-driving car (source); (right) LiDAR on iPhone 12 Pro (source).

Probably the biggest source of point clouds in large-scale 3D modeling (e.g., cultural heritage sites) these days is through photogrammetry, which is also based on trigonometry and leverages a variety of computer vision techniques, such as Structure from Motion, to get dense point clouds from images. Photogrammetry really exploded lately with 1) high quality high resolution images available from (even smartphone) cameras, and 2) drones that enabled complete sites/buildings to be modeled.

Sometimes a combination of different techniques are needed to obtain point clouds in complex scenes. In the Digital Elmina project that reconstructs the Ghana coast structures, which are part of a UNESCO World Heritage Site, photogrammetry is the primary tool to obtain point clouds. Laser scanning, however, is the only recording method in many scenes such as rooms populated with bat colonies and rooms with whitewashed walls too reflective for photogrammetry.

Point Cloud Applications

Rendering and Modeling. Typically 3D objects are modeled using polygon meshes, and polygons are the rendering primitives in the graphics pipeline. However, representing all objects with point sampling allows easy mixing of objects in a scene without specialized algorithms for different geometry types. While it is possible to generate a mesh from a point cloud (through point set triangulation), why bother if we could treat points directly as the rendering primitives?

Using points as the rendering primitive, i.e., point-based graphics (PBG), goes back as far as the 1985 paper by Marc Levoy and Turner Whitted, and has been studied for decades. PBG is particularly useful in rendering real-world, large-scale scenes that are obtained from 3D scanning, e.g., cultural heritage sites and artifacts.

Historically, PBG didn’t quite take off perhaps because the point-based rendering pipeline is incompatible with modern GPU hardware, which is optimized for mesh-based operations. However, PBG is gaining momentum recently, primarily due to neural point-based rendering, where the rendering pipeline is essentially learned through a neural network. Thus, neural PBG could readily take advantage of modern GPUs and specialized deep learning hardware.

Depth Sensing. Point clouds provide quick, direct, and precise depth information, which is crucial for many emerging visual applications. Autonomous machines such as robots, Augmented Reality devices, and self-driving cars rely on depth to localize and navigate. Depth also powers computational photography tasks such as Auto Focus and portrait mode in high-end smartphones, especially at night when depth is hard to obtain using conventional cameras but is readily available from a LiDAR.

Perception. Point clouds augment the conventional camera perception for autonomous machines. Point cloud data provides another modality that helps the vehicle detect objects, generate environment maps, and localize itself (egomotion). Virtually all high-profile self-driving cars use LiDAR. Of course, it would be entertaining to see who outlasts whom, Elon Musk or LiDAR.

Scientific Computing. Many physics simulations in gaming and scientific computing are based on the particle system, which is essentially a point cloud. Numerical methods (e.g., partial differential equation solvers) are moving toward meshfree approaches (e.g., SPH), which treat data points as physical particles — again a point cloud.

Critically, while point cloud-based algorithms traditionally use “hand-crafted” features, they are increasingly moving towards learned features using deep learning in virtually all application domains, ranging from physics simulations, perception in autonomous vehicles, and graphics.

Systems and Architectural Challenges

Today’s computer systems are well-optimized for processing images and videos. Point cloud algorithms challenge existing hardware systems due to their irregularities, which come from the fact that the compute and memory access patterns of point cloud applications are known only at runtime. In contrast, image and video processing can be statically compiled and scheduled — think of stencil computation (e.g., convolution).

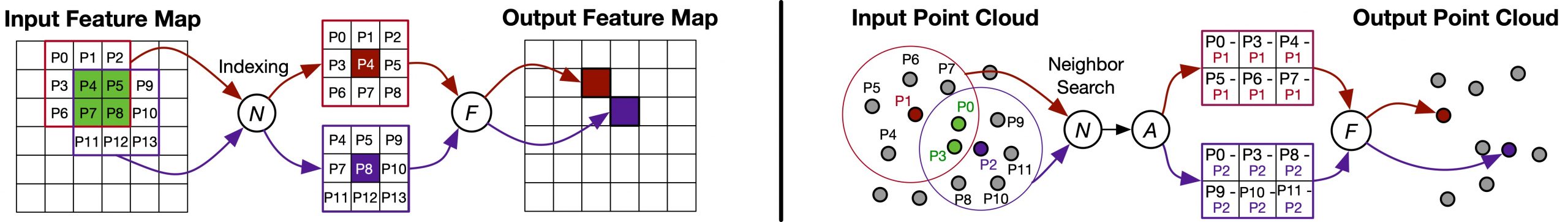

The main irregular kernel in point cloud applications is neighbor search, which exists in virtually all point cloud applications, whether they are based on DNNs or not. Why do neighbors search? Intuitively, this is because the properties/behavior of a point, such as the normal of a point on a surface or the velocity of a point in a particle system, depends on its interactions with its neighbors. Point cloud applications usually iteratively operate on a small neighborhood of input points, similar to how a convolution layer in a CNN (and more generally stencil operations) extracts local features of the input image through a sliding window, as shown in the figure below.

Figure 2: Comparing a stage in a conventional stencil pipeline (e.g., CNN) and a stage in a point cloud algorithm (source).

Critically, “neighbor search” in stencil pipelines is manifested as indexing a fixed-sized stencil/filter that is statically known; neighbor search in point cloud applications, however, depends on run-time information, hence the irregularity. Not only the neighbor search itself is irregular, it also necessarily leads to irregularity in subsequent stages in the processing pipeline. For instance, the data reuse between two “neighborhoods” is known only at run time, rather than the fixed reuse that can be analyzed offline for stencil pipelines.

Open Questions

Recent results show that point cloud applications lead to orders of magnitude higher compute and memory accesses than conventional image/video processing (e.g., CNN) under the same input resolution, calling for efficient systems and hardware support.

One might wonder, hasn’t neighbor search been extensively studied for decades? Yes, but the same goes with matrix multiplication and convolution, recent research on which is fueling the penetration of DNN-based applications. The challenge, as always, lies in optimizing for end-to-end applications rather than (just) individual kernels.

Key research questions remain in this new space:

- To provide efficient hardware support for point cloud applications, should we augment existing GPU/DNN hardware or start from a clean slate?

- Can point cloud algorithms be regular by design?

- How to design HLS tools for point cloud applications, much like how accelerators for DNNs and image processing can now be automatically generated?

- Many emerging vision algorithms fuse sensory information from both the cameras and the LiDARs. How do we build systems to support both regular image/video algorithms and irregular point cloud algorithms?

About the Authors: Yuhao Zhu is an Assistant Professor of Computer Science at University of Rochester. His research group focuses on applications and computer systems for visual computing. Holly Rushmeier is the John C. Malone Professor of Computer Science at Yale University. Her main research interests are computer graphics and computational tools for cultural heritage.

Disclaimer: These posts are written by individual contributors to share their thoughts on the Computer Architecture Today blog for the benefit of the community. Any views or opinions represented in this blog are personal, belong solely to the blog author and do not represent those of ACM SIGARCH or its parent organization, ACM.