Processing-in-Memory (PIM) is a computing paradigm that aims to overcome the data movement bottleneck (i.e., wasted execution cycles and energy, resulting from the back-and-forth data movement between memory units and compute units) by making memory (and storage) systems compute-capable. Explored over several decades since the 1960s, PIM systems are now becoming a reality with the advent of the first commercial products and prototypes: a number of startups (e.g., UPMEM, Mythic) and major vendors (e.g., Samsung, SK Hynix, Micron, Alibaba) have presented real PIM chips and system prototypes in the past several years.

In 2023, we started a series of tutorials on real-world PIM systems that took place at four major computer architecture conferences: ISCA, ASPLOS, MICRO, and HPCA. These tutorials highlighted the practical challenges and opportunities associated with PIM technologies, offering attendees hands-on experience with commercial and prototype systems. In the 2023 editions of our real-world PIM tutorial, attendees had the opportunity to learn about and program a real PIM architecture, i.e., the UPMEM PIM system. During the hands-on part of our tutorial, we first introduced the UPMEM PIM architecture, its programming model, and SDK. Then, we guided the attendees on the software development cycle and performance profiling for different workloads. This allowed the attendees to understand how the performance of the UPMEM PIM system varies with different software implementation decisions (such as data types, thread-level parallelism, scratchpad management, and synchronization primitives). A unique aspect of these events was their strong engagement with both academia and industry, featuring invited talks from leading researchers, engineers, and startups that have been driving innovation in PIM. All our PIM tutorials have been livestreamed on Youtube (and their recordings can be found here for the ISCA 2023 edition, here for the ASPLOS 2023 edition, here for the MICRO 2023 edition, and here for the HPCA 2023 edition) to broaden participation.

In 2024, we revamped our PIM tutorials to be more focused on (1) educating the community on the basics and adoption challenges of PIM, including a historical view of PIM systems, (2) efforts from academia for novel PIM systems, and (3) solutions that improve commercial PIM architectures. The 2024 edition of our PIM tutorials was heavily based on the agenda of our PIM course at ETH Zurich, which happens (almost) every semester (and materials can be found here for the Spring 2021 semester, here for the Fall 2021 semester, here for the Spring 2022 semester, here for the Fall 2022 semester, here for Spring the 2023 semester, and here for the Fall 2024 semester). The course provides bachelor’s, master’s, and even PhD students the opportunity to work closely on hands-on research projects on PIM. The tutorials happened in HEART 2024 (YouTube recording can be found here), ISCA 2024 (YouTube recording can be found here), and MICRO 2024 (YouTube recording can be found here), and consisted of both lectures taught by academic researchers and invited talks from academia and industry.

The MICRO 2024 edition of our PIM tutorial garnered one of the largest attendances, with roughly 60 people attending at a given time in person, and many more over our YouTube livestream. We covered a vast range of topics related to PIM, including PIM taxonomy, processing-using-DRAM and processing-near-DRAM architectures, infrastructures for PIM research, and storage-centric computing for important workloads like genomics and metagenomics. From all these topics, in particular, we were very excited to share our past and current research on processing-using-DRAM architectures, from both theoretical and experimental studies on the subject. Processing-using-DRAM architectures leverage the analog operating principles of DRAM cells and DRAM circuitry to implement different operations. On the theoretical side, we have shown in our previous works that DRAM chips are (1) capable of implementing a vast range of different operations, including data copy & initialization [1], bulk-bitwise Boolean [2] & arithmetic operations [3], data reorganization [4], true random number generation [5, 6], and (2) can operate as very wide single-instruction multiple-data (SIMD) [3] and multiple-instruction multiple-data (MIMD) engines [7]. During our tutorial, we discussed the system integration challenges that processing-using-DRAM architectures face, including our current solutions for challenges for code generation [7], and data allocation/alignment [7]. On the experimental side, fascinatingly, many of these operations can already be performed in real unmodified commercial off-the-shelf (COTS) DRAM chips, by violating manufacturer-recommended DRAM timing parameters. During the MICRO 2024 tutorial, we discussed recent works showing that COTS DRAM chips can perform (1) data copy and initialization [8, 9, 10], (2) three-input bitwise MAJ and two-input AND & OR operations [9, 11, 12], (3) bitwise NOT operation [11], (4) up to 16-input bitwise AND, NAND, OR, & NOR operations [11], (5) true random number generation & physical unclonable functions [5, 6, 13]. Our recent invited paper in the AI Memory focus session of the IEDM 2024 conference, “Memory-Centric Computing: Recent Advances in Processing-in-DRAM,” summarizes current theoretical and experimental developments on processing-using-DRAM architectures. We also happily hosted two invited talks in our MICRO 2024 tutorial, where Dr. Brian C. Schwedock (from Samsung) talked about architectures and programming models for general-purpose near-data computing, and Dr. Christina Giannoula (from the University of Toronto) talked about her works on system software and libraries for sparse computational kernels in PIM architectures.

Figure 1: A photograph from the well-attended PIM tutorial at MICRO 2024.

The next edition of our PIM tutorial will take place as a half-day tutorial on March 1st, 2025 at PPoPP (in Las Vegas, co-located with HPCA and CGO). In this edition, we will focus on the programmability aspects of both academic and industrial PIM architectures.

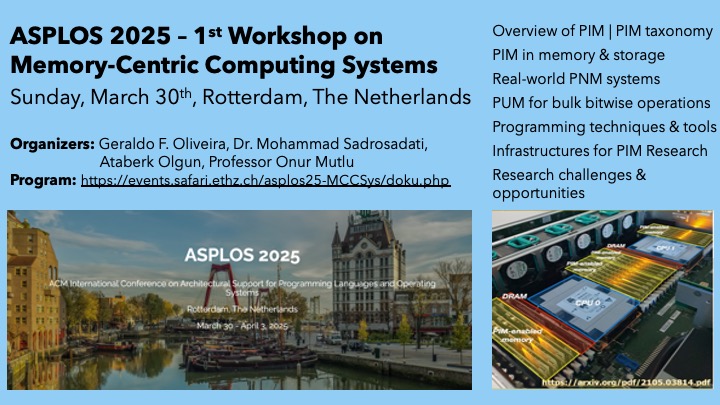

We are also excited to share that we are organizing a new PIM workshop to broaden participation and enhance scientific discourse around PIM systems: the 1st Workshop on Memory-Centric Computing Systems at ASPLOS 2025. This combined tutorial and workshop will focus on the latest advances in PIM technology, spanning both hardware and software. We invite the broad PIM research community to submit and present their ongoing work on memory-centric systems. We aim to include invited talks from the broad community that bring new insights on memory-centric systems or novel PIM-friendly applications, address key system integration challenges in academic or industry PIM architectures, outline design and process challenges affecting PIM systems, new PIM architectures, or system solutions for state-of-the-art PIM devices. There are a limited number of slots for the invited talks. If you would like to deliver such a talk on related topics, please contact us via our workshop website by submitting a short extended abstract of your talk by February 15, 2025, 23:59 AoE.

Figure 2: First workshop on memory-centric computing systems to be held with ASPLOS 2025.

PIM is a paradigm that can enable significant benefits in performance, energy, and sustainability, but comes with many adoption challenges. It requires even more effort and rethinking across the computing stack, which has been dominated by processor-centric thinking. We invite the community (both academic and industrial) to join efforts to enable the PIM paradigm. Our updated paper “A Modern Primer on Processing in Memory” provides an overview of the PIM research and discusses many open challenges in the area, and we warmly welcome feedback from the community.

About the Authors:

Geraldo F. Oliveira is a soon-to-graduate Ph.D. candidate at ETH Zürich, working with Prof. Onur Mutlu. His current broader research interests are in computer architecture and systems, focusing on memory-centric architectures for high-performance and energy-efficient systems.

Juan Gómez-Luna is a Senior Research Scientist at NVIDIA Research. His research interests include GPU and heterogeneous computing, processing-in-memory, memory and storage systems, and the hardware and software acceleration of medical imaging and bioinformatics.

Onur Mutlu is a Full Professor of Computer Science at ETH Zurich. His current broader research interests are in computer architecture, systems, hardware security, and bioinformatics, with a special focus on memory & storage systems and emerging technologies.

Disclaimer: These posts are written by individual contributors to share their thoughts on the Computer Architecture Today blog for the benefit of the community. Any views or opinions represented in this blog are personal, belong solely to the blog author and do not represent those of ACM SIGARCH or its parent organization, ACM.