Recent progress in quantum computer (QC) systems has been impressive — state-of-the-art devices offer increasing memory size (qubit count) and reliability (decoherence time). A most recent example is from IBM’s announcement on their six new superconducting QC devices with record computational power (of quantum volume 32). To realize that power, much attention needs to be paid in the quantum compiling process, if we want the qubits to perform well – e.g., to compute with high success probability and low resource cost. As such, it is not uncommon that we re-think about the architectural design choices we made for classical computers, under the unique constraints in quantum computer systems. In this blog, we illustrate how re-thinking about memory has led to interesting new research directions across the systems stack.

Think Quantumly About Memory

Quantum memory management is critical to any quantum computer system where the quantum program (sequence of quantum logic gates and measurements) requires the bulk of resources from the system, e.g., demanding nearly all of the qubits available. This problem is pervasive in quantum compiling, since typical quantum algorithms require a significant number of qubits and gates, as they usually make use of a key component called quantum oracles, which implements heavy arithmetic with extensive usage of ancilla qubits (scratch memory).

Much like a classical memory manager, the goal of a quantum memory manager is to allocate and free portions of quantum memory (qubits) for computation dynamically. But, unlike a classical memory manager, its quantum counterpart must respect a few characteristics of quantum memory, such as:

- Data has limited lifetime, as qubits can decohere spontaneously.

- Data cannot be copied in general, due to the quantum no-cloning theorem.

- Reading data (measurements) or incoherence noises on some qubits can permanently alter their data, as well as data on other qubits entangled with them.

- Data processing (computation) is performed directly in memory, and quantum logic gates can be reversed by applying their inverses.

- Data locality matters, as two-qubit gates are accomplished by interacting the operand qubits.

These characteristics of quantum memory profoundly influence the design of quantum computer architectures in general, and complicate the implementation of a memory manager.

A Special Type of Shared State

One of the fundamental limitations of a quantum computer system is the inability to make copies of an arbitrary qubit. This is called the no-cloning theorem due to Wootters and Zurek. In classical computing, we are used to making shared state of data when designing and programming algorithms. The no-cloning limitation prevents us from directly implementing a quantum analog of the classical memory hierarchy, as caches require making copies of data. Hence, current quantum computer architecture proposals follow the general principles that transformations are applied directly to quantum memory, and data in memory are moved but not copied. However, we are allowed to make an entangled copy of a qubit. This type of shared state has the special property that the state of one part of the memory system cannot be fully described without considering the other part(s). Measurements (Reads) on such systems typically result in highly correlated outcomes. As such, reads and writes on entangled states must be handled with care. In classical memory systems, reads and writes must follow models of cache coherence and memory consistency to ensure correctness on shared states. In a quantum system, we can no longer easily read from or write to the quantum memory, as read is done through measurements (which likely alter the data) and write generally requires complex state preparation routines.

Garbage Collection Done Strategically

Relying on programmers to keep track of all usage of qubits is hardly scalable nor efficient. So design automation plays a crucial role, in order to make programming manageable and algorithms practical. Several techniques have been developed to save the number of qubits used by quantum programs. The effectiveness of memory manager can impact the performance of programs significantly; a good memory manager fulfills the allocation requests of a high-level quantum circuit by locating and reusing qubits from a highly constrained pool of memory.

When a program requires new allocations of qubits, the memory manager faces a decision: whether to assign brand-new unused qubits or to reuse reclaimed qubits. It may seem that reusing reclaimed qubits whenever available is the most economical strategy, for it minimizes the number of qubits used by a program. However, qubit reuse could potentially reduce program parallelism. Operations that could have been performed in parallel are now forced to be scheduled after the last usage of the reclaimed qubits. This additional data dependency could potentially lengthen the overall time to complete the program. Hardware constraints, such as reliability and locality, can also impact the allocation decisions. Some qubits might be more reliable than the others. It could be beneficial to prioritize qubits that are more reliable and balance the workload on each qubit. Some qubits might be closer than the others. Multi-qubit operations performed on distant qubits can therefore induce communication overhead. Ideally, an efficient qubit allocator must make decisions based on program structures and hardware constraints and reuse qubits discretely.

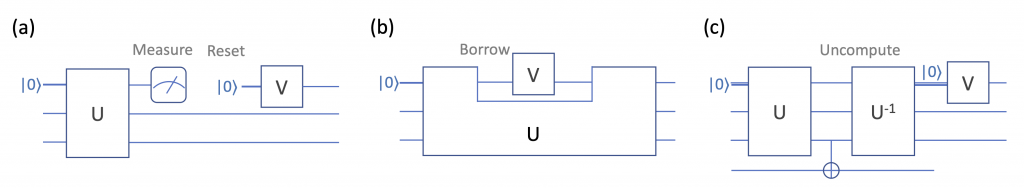

Quantum circuit diagrams for three qubit reuse techniques: (a) measurement-and-reset, (b) qubit borrowing, and (c) uncomputation.

- Measurement and Reset — When some qubits are disentangled from the data qubits, we can directly reclaim those qubits by performing a measurement and reset. We can save the number of qubits, by moving measurements to as early as possible in the program, so early that we can reuse the same qubits after measurement for other computation. Prior art has extensively studied this problem and proposed algorithms for discovering such opportunities. This measurement-and-reset (M&R) approach has limitations. First, today’s NISQ hardware does not yet support fast qubit reset, so reusing qubits after measurement could be costly or, in many cases, unfeasible. The state-of-the-art technique for resetting a qubit on a NISQ architecture is by waiting long enough for qubit decoherence to happen naturally, typically on the order of milliseconds for superconducting machines, significantly longer than the average gate time around several nanoseconds. Fault-tolerant (FT) architectures have much lower measurement over- head (that is roughly the same as that of a single gate operation), and thus are more amenable to the M&R approach. Second, qubit rewiring works if measurements can be done early in a program, which may be rare in quantum algorithms—measurements are absent in many program (such as arithmetic subroutines) or only present somewhere deep in the circuit. Unlike the uncomputation approach, M&R does not actively create qubit reuse opportunities.

- Qubit Borrowing — Another strategy for reusing qubits involve temporarily borrowing a qubit for computation and return the qubit to its original state when completed. This technique is sometimes called the “dirty borrowing” of qubits, because the qubits we borrow can be in an arbitrary unknown quantum state; this is to be contrasted with the uncomputation technique we will introduce next, in which the qubits to reuse are always clean ancilla (i.e., qubits initialized to a known state such as 0). Dirty borrowing opportunities depends highly on the structures in quantum circuits; the reason is two-fold. First, we need to return the borrowed qubits to their original states timely, otherwise the original computation has to be stalled. Second, computation of the borrowing circuit is restricted — as it performs computation on borrowed qubit without knowing its exact state. For example, one typically cannot perform measurements on the borrowed qubits, due to their entanglement with other qubits. This technique has been applied to speed up implementations of arithmetic circuits.

- Uncomputation — is to recycle ancilla qubits for future reuse through a process called “uncomputation”. This can be thought of as analogous to the concept of garbage collection in classical computing. Reclamation comes with a gate cost, as it is accomplished by first storing the output to a safe space and then undoing part of a computation so as to reset the ancilla qubits to their initial value and remove the entanglement relationship with the output qubits. It is critical to perform this uncomputation step, otherwise directly reusing ancilla could also change the value stored in the output qubits. Identifying the appropriate points for reclamation is not an easy task, especially for programs with hierarchical structures. Finding the optimal strategy for programs with general data dependency graphs are shown to be PSPACE complete. The reversible pebbling game strategy demonstrates that uncomputation gate cost can be reduced if a divide and conquer approach is used. In a more realistic setting, a heuristic approach strategically fulfills qubit allocation and reclamation, taking into account information such as program structures, data locality, and qubit/gate noise.

To Learn More about Systems Research in QC:

Our new synthesis book Quantum Computer Systems introduces the general principles in designing practical quantum computing systems, while emphasizing the research opportunities and challenges for Noisy Intermediate-Scale Quantum (NISQ) computers.

About the Authors:

Fred Chong is the Seymour Goodman Professor of Computer Architecture at the University of Chicago. He is the Lead Principal Investigator of the EPiQC Project (Enabling Practical-scale Quantum Computation), an NSF Expedition in Computing and a member of the STAQ Project.

Yongshan Ding is a 4th-year graduate student on the EPiQC Project advised by Fred Chong. He is co-author (with Fred Chong) of the Synthesis Lecture in Computer Architecture — Quantum Computer Systems: Research for Noisy-Intermediate Scale Quantum Computers. He will be on the academic job market this year.

Many ideas from this blog stem from conversations with the rest of the EPiQC team: Ken Brown, Ike Chuang, Diana Franklin, Danielle Harlow, Aram Harrow, Andrew Houck, Margaret Martonosi, John Reppy, David Schuster, and Peter Shor.

Disclaimer: These posts are written by individual contributors to share their thoughts on the Computer Architecture Today blog for the benefit of the community. Any views or opinions represented in this blog are personal, belong solely to the blog author and do not represent those of ACM SIGARCH or its parent organization, ACM.