TL;DR: Heterogeneous hardware innovation is held back by language support. Compiler researchers need to rethink their role and embrace ideas from Software Engineering, Natural Language Procesing and Machine Learning

A 50 year contract

For more than 50 years we have enjoyed exponentially increasing computer power. This has transformed society, heralding today’s computer-age.

This growth is based on a fundamental contract between hardware and software that, until recently, has rarely been questioned. The contract is: hardware may change radically “under the hood”, but the code you ran on yesterday’s machine will run just the same on tomorrow’s – but even faster. Hardware may change, but it looks the same to software, always speaking the same language. This common consistent language, the ISA, allows the decoupling of software development from hardware development. It has allowed programmers to invest significant effort in software development, secure in the knowledge that it will have decades of use. This contract is beginning to fall apart, putting in jeopardy the massive investment in software.

The reason for the breakdown is well known – the end of Moore’s Law , forcing new approaches to computer design. The cost of maintaining the ISA contract is enormous. If we break the contract and develop specialized hardware, there is massive performance gain available. For this reason, it is clear that in future, hardware has to be increasingly specialized and heterogeneous. Currently, however, there is no clear way of programming and using such hardware.

As it stands, either hardware evolution will stall as software cannot fit or software will be unable to exploit hardware innovation. Such a deadlock requires a fundamental re-think of how we design, program and use heterogeneous systems. What we need is an approach that liberates hardware from the ISA contract and efficiently connects existing and future software to the emerging heterogeneous landscape.

What about DSLs?

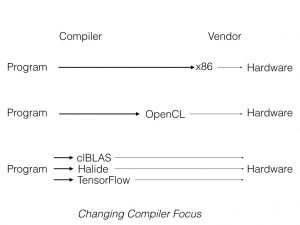

These observations have been made by many and there has been a blizzard of activity to tackle this. One historically typical response to hardware paradigm shifts is: invent a new language! Indeed, one of the most popular approaches has been to develop new domain specific languages. They promise to better exploit application knowledge: directly connecting users to hardware, bypassing the ISA. OpenCL is a great example of this. Hardware vendors can regularly change GPU ISAs under the hood without affecting user code – OpenCL is the new contract.

While new languages are attractive in the short term, it means developers have to rewrite their code. With the proliferation of new languages, clearly not all will survive. There is no guarantee that a language will be supported on future hardware. Uncertainty undermines software investment. The DSL approach means that new hardware vendors must either develop a new DSL and convince people to use it or port existing ones that don’t quite match, limiting innovation.

We have a chicken and egg problem, new programming language compilers don’t exist for non-existent hardware and new hardware is not developed because there’s no easy way to use and program it. It is this software gap that limits hardware progress.

APIs and DSLs are the new ISA

What we need is a mechanism to match new hardware to existing (and future) software automatically, without having to develop a new compiler and language every time. Ideally, given just the signature of a hardware API we need to work out what it does, match it to existing software and then replace. This should be as automatic as possible for a wide domain of APIs. If we can do this, then we no longer care about the hardware ISA – the API is the new ISA. Similarly, if the new specialized hardware is instead programmed with a DSL what we need is the ability to automatically translate existing code to the new DSL – automating programming.

Once we have the ability to match code to heterogeneous hardware, we can now invert the problem and ask: how would a new proposed heterogeneous design fit existing or future programs? If we can do this, then hardware vendors are free to explore new hardware, because they know it will fit with existing code, and programmers can continue to invest because they know it will work on future hardware.

For this to work, we need to change our mindsets as compiler writers to be more agile. We need to move from a very fixed world where we invest time in hand-developing tools to one where there is constant change requiring automation. Instead of translating general purpose language down to a single narrow low-level fixed ISA, we match many and varying high level APIs and DSLs to existing code automatically. In fact, rather than asking programmers to rewrite their code in a new DSL or use an API, it is now the compiler writers’ job. We move from a place of code generation to program generation. In a sense for compiler writers, APIs and DSLs are our new ISA.

Magical thinking – looking beyond

It is one thing to have ambition – but can we deliver or is this just magical thinking?

This undoubtedly creates an enormous challenge for compiler writers and we may fail to deliver. However, help is available elsewhere. If we look to the software engineering community, there is a large body of work concerned with refactoring code and mapping it to new APIs. Here they leverage ideas from natural language processing, which allows unsupervised translation between different languages to automatically upgrade software. This relies on modeling the structure of large corpora of programs. In fact the rise of Big Code to match Big Data means that we can think about using machine learning to learn how to translate code from one setting to another. Developments elsewhere in programming-by-example, program synthesis and neural synthesis mean that we can learn what new hardware does by behavior or even text descriptions allowing it to be migrated automatically.

The challenge for our community is to take diverse ideas from a wide range of settings and make them work for real systems. Demand for performance in the 50s led to automatic coding with the Fortran compiler; maybe with the rise of heterogeneity we see our focus change to automatic programming?

Opportunity Knocks!

This is an exciting time for compiler researchers. We need to rethink how we connect software and hardware by a more flexible language interface which can change from one processor to the next. This will allow existing software to use future hardware and allows hardware innovation to connect to new and emerging applications. If we rethink our role and look beyond our community, we will help usher in an era of change in systems design where, rather than deny and fear the end of Moore’s law, we embrace and exploit it.

About the Author: Michael O’Boyle is a Professor of Computer Science at the University of Edinburgh. He is an EPSRC Established Career Fellow and a founding partner of the HiPEAC network. He is director of the ARM Research Centre of Excellence in Heterogeneous Computing at Edinburgh.

Disclaimer: These posts are written by individual contributors to share their thoughts on the Computer Architecture Today blog for the benefit of the community. Any views or opinions represented in this blog are personal, belong solely to the blog author and do not represent those of ACM SIGARCH or its parent organization, ACM.