Puzzle made of fantasy CPU. Conceptual technology 3d illustration

Quantum Computers (QCs), once thought of as an elusive theoretical concept, are emerging. Today’s quantum devices, however, are still small prototypes since their computing infrastructure is in its early stages. Recent industry roadmaps have started to propose a modular approach to scaling these devices. In this article, we focus on superconducting technology and discuss the factors that favor this approach. We highlight some of the differences in these factors between quantum and classical settings, and we will see that bandwidth and fidelity between quantum chips is much more comparable to that within chips than we would expect.

Background

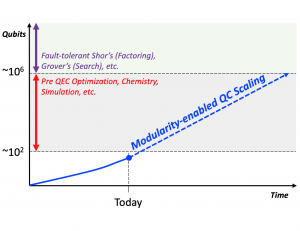

Many technology platforms, especially those built from superconducting devices, are starting to show similar trends as researchers search for architectures that are favorable for QC scaling. While we are approaching system thresholds required for near-term quantum optimization, chemistry and simulation, Fig. 1, much work is needed to create QCs of scale for quantum error correction, which encodes logical quantum bits (qubits) in many physical qubits.

| Fig. 1: Qubits vs. time showing qubit thresholds for critical quantum computing milestones. |

Observations from the Quantum Industry

The size of today’s quantum hardware limits the amount of information that can be stored, and limited capacity during information processing is a leading cause for existing QCs to be experimental rather than practical devices. There have been preliminary demonstrations of quantum advantage, but more qubits are required for quantum computation with impact. Major quantum industry players including IBM, Rigetti, Intel, and Quantinuum are establishing long-term plans to expand quantum research and development. Growing quantum initiatives have produced numerous technology roadmaps that feature a common theme for future quantum architectures: modularity.

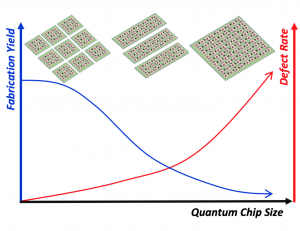

| Fig. 2: Scaling trends shown as fabrication yield and defect rate vs. quantum chip size. |

Challenges with Building Bigger Quantum Systems

Many challenges prevent today’s physical qubits from reaching the scale necessary for meaningful quantum computation. To start, an obvious roadblock is that more qubits need a larger host substrate. Unfortunately, as monolithic QCs expand in footprint, they require greater design investment, higher material costs, and increased verification complexity. Even if these problems were overcome, and a sea of qubits were available for processing, the number of qubits on-chip is not the primary feature that determines QC compute power. Today’s qubits commonly feature high gate error and limited compute windows that hinder QC performance. For QCs to be useful, new solutions are needed to achieve a higher quantity of quality qubits.

Qubit quality issues often trace to the same source. QC fabrication is imperfect, and unexpected device variation resulting from processing mistakes introduces unfavorable QC properties that compromise computation. Fabrication procedures must closely follow targeted QC design specifications for QCs to operate as intended. Unfortunately, the tools used to manufacture quantum devices have limited precision at the microscopic scale, making each physical qubit unique in the amount it varies from its ideal properties. Imprecision associated with fabrication, specifically in superconducting devices, prevents the fabrication of perfectly identical qubits and QCs, critically impacting reliability. Further, as chip area increases, fabrication yields decrease since each QC has a greater chance of a fabrication error, or defect, that severely degrades performance or renders the quantum chip unusable. Yield and defect rate trends for quantum chips are featured in Fig. 2.

The number of qubits per device on today’s QCs is limited because monolithic chip size and total defects on-chip are directly related. Even if a chip contains qubits that all meet baseline criteria in terms of fidelity, the physical differences among today’s qubits inject well documented inter- and intra-chip variation in performance during runtime. For quantum computing to be viable, more and higher quality qubits are necessary. Further, cross-chip processing must produce uniform outcomes, regardless of the qubits used during computation.

Lessons Learned from Classical Computation

Scaling challenges associated with building a single system on a chip (SoC), especially those related to device fabrication, are not new. For example, while classical monolithic electronics have benefits that stem from the simplicity of being entirely self-contained, larger SoCs come at the expense of reduced yield and increased fabrication costs. Additionally, since monolithic devices must be constructed with the same processing techniques and materials, they demonstrate greater inflexibility in terms of hardware customization and specialization, which limits domain-specific acceleration. Fortunately, chiplet-based architectures have been shown to alleviate some of these problems. In a chiplet design, multiple smaller and locally-connected chips replace a larger, monolithic SoC.

Chiplet architectures are often referred to as multi-chip modules (MCMs), and their benefits include reduced design complexity, lower fabrication costs, higher yields, greater isolation of failures, and more flexibility for customization as compared to monolithic counterparts. Chiplet-based classical computation offers many advantages that promote system scaling, however, simplified scaling does not come for free. Communication bottlenecks, often in the form of latency and error in link hardware that unifies chiplets, must be properly evaluated as a tradeoff for the reduced design and resource costs. Because of higher-cost linking, MCMs sometimes demonstrate performance loss as compared to their monolithic counterparts, but the benefits associated with modularity, especially in terms of device yields, are often overwhelmingly worth the cost. Further, proper hardware utilization via software can enable intelligent program mapping, scheduling, and multithreading that minimizes the use of the more expensive link hardware. Because of modularity-enabled advances in classical computer architecture, QC designers are motivated to pursue modular design for quantum scaling.

Scaling Quantum Computers via Modular Design

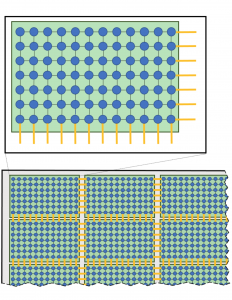

Quantum compute demand is increasing as the quantum industry moves toward QCs as a commodity cloud service. Unfortunately, the continued scaling of QCs with monolithic architectures will constrict device yield and performance. Modular architectures based on quantum chiplets present a potential solution, and this observation has caused an uptick in proposals calling for quantum MCMs, Fig 3. Maximizing the benefits of quantum modularity requires tools capable of guiding the modular implementation of multi-core QCs, providing a methodologies for evaluating the design space tradeoffs in yield, chiplet selection for system integration, and interconnect quality. As the quantum industry begins to make modular quantum implementations a reality, these critically-needed tools will be required to model the complex dynamics associated with QC fabrication, assembly, and operation to be effective at accelerating the scaling of manufacturable quantum machines.

| Fig. 3: Quantum Chiplets placed within an MCM. Interconnects linking chiplets are indicated in yellow. |

It is important to note that when pursuing a modular approach, quantum systems do not suffer from the same linking problems as classical systems. In classical MCMs, inter-chip transmissions are often significantly more expensive than those inter-chip in terms of speed and error. Conversely, latency and noise are not excessively high when transmitting quantum information chip to chip. The reasoning behind this is that superconducting QC chips, which have been experimentally demonstrated to be linked with high fidelity, feature qubits built from circuits take more space on-chip as compared to transistors encoding classical bits. Qubit size creates an architectural constraint that forces physical qubit communication to be fixed between nearest neighbors, illustrated in Fig. 3, and this property prevents inter-chip links from suffering from data bandwidth constraints that are orders of magnitude worse than those on-chip. Further, smaller quantum chiplets offer the benefit of maximizing QC yield, and post fabrication selection for MCM integration coupled with quantum MCM reconfigurability lays the groundwork for the discovery of chiplet architectures that vastly outperform their monolithic counterparts in terms of desirable chip properties, such as gate fidelity, and application performance.

About the Authors:

Kaitlin N. Smith is a Quantum Software Manager at Infleqtion. From 2019-2022, she was a CQE/IBM Postdoctoral Scholar within EPiQC at the University of Chicago under the mentorship of Prof. Fred Chong. Kaitlin is on the academic job market this year.

Fred Chong is the Seymour Goodman Professor of Computer Architecture at the University of Chicago. He is the Lead Principal Investigator of the (Enabling Practical-scale Quantum Computation), an NSF Expedition in Computing and a member of the STAQ Project.

Many ideas from this blog stem from conversations with the rest of the EPiQC team: Ken Brown, Ike Chuang, Diana Franklin, Danielle Harlow, Aram Harrow, Andrew Houck, John Reppy, David Schuster, and Peter Shor.

Disclaimer: These posts are written by individual contributors to share their thoughts on the Computer Architecture Today blog for the benefit of the community. Any views or opinions represented in this blog are personal, belong solely to the blog author and do not represent those of ACM SIGARCH or its parent organization, ACM.