Much of the recent explosion in interest in quantum computing has been driven by the arrival of the “Near-term Intermediate Scale Quantum” era (abbreviated “NISQ”), in which several experimental groups in academic and industrial labs are now able to implement quantum computers on the scale of around 50 qubits. These systems are imperfect – they are too small and too noisy to run well-known quantum algorithms such as Shor’s or Grover’s algorithm. Indeed, the major challenge in the NISQ era is algorithmic – we need to understand the power and potential applications of these near-term quantum systems.

John Preskill first described the coming of a watershed event in which a quantum computation experiment performs a well-defined task much faster than any classical computer for the first time. We’ve known since the early 90’s with the discovery of Shor’s algorithm that quantum computers are a (potentially) feasible model of computation that are capable of solving certain specific problems exponentially faster than any classical computer. More formally, this means that quantum computation violates the so-called “Extended Church Turing” thesis – the foundational principle of computation stating that all feasible models of computation can be simulated by the classical Turing Machine with at most a polynomial time slow-down. Until now, we’ve only had theoretical evidence that this is the case – since experimentalists have yet to implement Shor’s algorithm on a large scale.

Excitingly, claims of the first experimental violation of the Extended Church-Turing thesis have already started to surface: the group of John Martinis at Google/UCSB has announced the first such demonstration using their “Sycamore” experiment comprising of 53 superconducting qubits. Their findings have recently been published in the journal Nature.

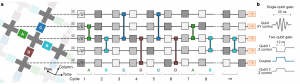

Figure 1: An example of the randomly generated quantum circuit that would be difficult to classically simulate. (source)

The task Martinis implements is known as “random circuit sampling”: pick a quantum circuit randomly (from a suitable distribution over quantum operations) and sample from the output distribution of this circuit. This is a task that has a straight-forward quantum algorithm: the quantum experiment simply runs the random quantum circuit and measures each qubit, producing a sample. However, it is not at all obvious that this should be a hard task for a classical computer. For one thing, the sort of computational tasks normally studied in complexity theory are decision problems: given an input, output yes or no. By contrast, the random circuit sampling task is a sampling task, in which the goal is to output samples from some complicated distribution over n bit strings. Moreover, by the so-called “Porter-Thomas property” of random quantum circuits, we expect the outcome probabilities of the circuit to be somewhat flat, but not totally uniform. Due to the effects of quantum interference, some output probabilities will be slightly heavier than others. If we run this random quantum circuit and measure we’ll almost certainly see many of these “heavy” outcomes: those with probability mass greater than typical in the outcome distribution. On the other hand, if we gave the description of the randomly chosen quantum circuit to a classical computer, sampling from the distribution or even listing several heavy outcomes would seem to require simulating the quantum circuit by explicitly computing outcome probabilities, which is believed to be a hard task for classical computation. This intuition can be formalized in a certain sense: recent work shows that computing an outcome probability of random quantum circuits is as hard as computing the output probability of an arbitrary quantum circuit, and is therefore intractable classically, under suitable complexity theoretic assumptions. Complementary evidence for classical hardness of random circuit sampling was given by Aaronson and Chen, who (roughly speaking) show a connection between outputting a list of heavy measurement outcomes and estimating the output probabilities of random quantum circuits. A major open question is to extend such quantum “hardness of sampling” proofs to work in a setting with realistic experimental noise in which we can only sample from an outcome distribution that is close to the ideal outcome distribution.

A second important issue is how to use experimental outcome statistics to verify that the outcome distribution of the noisy quantum experiment is close to the ideal outcome distribution. This is a highly nontrivial problem – unlike, say, decision problems in the complexity class NP, we do not expect a general efficient method for classically checking the correctness of this sampling problem. To make matters worse, due to the previously mentioned “flatness” of the ideal outcome distribution, we would have to run the experiment a very large number of times to have any chance of seeing the same outcome twice. Google’s verification procedure is based on a statistical test called “linear cross-entropy” that takes samples from the experiment and outputs a numerical score. If the score is sufficiently high we are to have confidence that the experiment is working properly. Linear cross-entropy essentially works by assigning high scores to outcome samples that occur with higher than typical probability in the outcome distribution of the random circuit. Under sufficiently strong assumptions about the experimental noise, such as if the noise is depolarizing, we can prove that scoring well in linear cross entropy certifies more traditional “closeness metrics” such as fidelity. Additionally, using concentration of measure arguments, we can show that linear cross-entropy can be computed using a reasonably small number of samples from the experimental device. The downside of linear cross entropy is that computing it requires computing the ideal output probabilities of the experimentally observed samples. Doing this is a hard computational task whose difficulty scales exponentially in the size of the system. This is a compromise we can afford to make for intermediate size quantum systems, but will not be acceptable for considerably larger system sizes.

Looking forward, rather than thinking of one watershed moment defined by a single experiment, it is more likely there will be a series of experiments that will gradually remove all uncertainty, or “loopholes”. For now, the most pressing loophole involves better characterizing the noise in these experiments and understanding the extent to which the theory – both the classical hardness and verification – can be made resilient to this noise. Finally, as we develop more confidence in our experiments, the next step is to find practically useful applications. Currently the most promising candidate application has been proposed by Scott Aaronson, who has developed a protocol that uses random circuit sampling experiments to generate random numbers whose randomness can be proven without trusting the quantum device. Such “certifiable randomness” is desirable in many cryptographic applications where random bits are often used as secret keys. We of course hope that this will be the first of many applications to arise from this new era in quantum computing.

In conclusion, we are currently at a critical juncture in the field of quantum computing in which quantum computational theory and experiment are being synergized in a revolutionary new way. The Google/UCSB result is certainly impressive and is evidence of significant technical progress. Even if it would take only 2.5 days rather than 10,000 years to simulate with a classical supercomputer, it is still the case that a small, 53-qubit machine is competitive with the largest classical supercomputer currently under construction (costing $0.5B). A vibrant quantum computing industry is emerging, with Google focused on pushing the boundaries of experimental prototypes and IBM developing a significant user base by deploying more manufacturable machines in its quantum cloud service. While it is unclear exactly what the future holds, it is quite clear we are well on the exciting road to a coming quantum age.

Bill Fefferman is an assistant professor in the computer science department at the University of Chicago. His primary research interest is quantum computing, focused on developing tools that provide the foundation for building the next generation of useful quantum algorithms, with implications for cryptography, physics, and computational complexity theory. Fefferman received his doctorate in computer science at Caltech and held research positions at the University of California at Berkeley and at the University of Maryland/National Institute of Standards and Technology (NIST).

Fred Chong is the Seymour Goodman Professor of Computer Architecture at the University of Chicago. He is the Lead Principal Investigator of the EPiQC Project (Enabling Practical-scale Quantum Computation), an NSF Expedition in Computing.

Disclaimer: These posts are written by individual contributors to share their thoughts on the Computer Architecture Today blog for the benefit of the community. Any views or opinions represented in this blog are personal, belong solely to the blog author and do not represent those of ACM SIGARCH or its parent organization, ACM.