At the dawn of the 21st century, Mark Weiser envisioned a world where computers would weave themselves into the fabric of everyday life, becoming indistinguishable from it. This prophecy of ubiquitous computing has not only materialized but has evolved beyond Weiser’s initial predictions, particularly with the advent of Tiny Machine Learning (TinyML).

At the dawn of the 21st century, Mark Weiser envisioned a world where computers would weave themselves into the fabric of everyday life, becoming indistinguishable from it. This prophecy of ubiquitous computing has not only materialized but has evolved beyond Weiser’s initial predictions, particularly with the advent of Tiny Machine Learning (TinyML).

TinyML sits at the intersection of machine learning and embedded systems. It is the application of ML algorithms on small, low-power devices, offering powerful AI capabilities like low-latency decision-making, energy efficiency, data privacy, and reduced bandwidth costs at the extreme edge of the network.

TinyML models already impact how we interact with technology. Keyword-spotting systems activate assistants like Amazon’s Alexa or Apple’s Siri. TinyML also offers a variety of bespoke, innovative solutions that contribute to people’s welfare. In hearing aids, TinyML enables filtering noisy environments to assist patients with hearing impairments live more engaging lives. TinyML has also been used to detect deadly malaria-carrying mosquitoes, paving the way for millions of lives to be saved. These applications merely scratch the surface of TinyML.

This blog post provides an overview of TinyML. By exploring the evolution of TinyML algorithms, software, and hardware, we highlight the field’s progress, current challenges, and future opportunities.

Evolution of TinyML Algorithms

Early TinyML solutions consisted of simple ML models like decision trees and SVMs. But since then, the field has progressed to deep-learning-based TinyML models, typically small, efficient convolutional neural networks. However, there are still challenges to overcome. Small devices’ limited memory and processing power constrain the complexity of models that can be used. Tiny Transformers (e.g., Jelčicová and Verhelst, Yang et al.) and recurrent neural networks are used less frequently due to memory constraints. However, emerging applications are pushing this area of research.

To enable efficient model design without large engineering costs, many models are automatically tailored via Neural Architecture Search for various hardware platforms (e.g., MicroNets, MCUNetV1&2, µNAS, Data Aware NAS). Additionally, TinyML models are frequently quantized to lower-precision integer formats (e.g., int8) to reduce their size and leverage fast vectorized operations. In some cases, sub-byte quantization, such as ternary (3-bit) or binary (2-bit), is used for maximum efficiency. While TinyML primarily targets inference, on-device training offers continual learning and enhanced privacy, so research along these lines has started to emerge (e.g., PockEngine, MCUNetV3).

Evolution of TinyML Software

The ML software stack of embedded systems is often highly tuned and specialized for the target platform, making it challenging to deploy ML on these systems at scale. Various chip architectures, instruction sets, and compilers are targeted at different power scales and application markets. Therefore, TinyML frameworks must be portable, seamlessly compiling and running across various architectures while providing an easy path to optimize code for platform-specific features to meet the stringent compute latency requirements.

Frameworks like TensorFlow Lite for Microcontrollers (TFLM) attempt to address these issues. TFLM is an inference interpreter that works for various hardware targets. It has little overhead, which can be avoided via compiled runtimes in severely constrained applications (e.g., microTVM, tinyEngine, STM32CubeAI, and CMSIS-NN). But this comes at the cost of portability. TinyML systems also use lightweight real-time operating systems (OS) (e.g., FreeRTOS), but given the tight constraints, applications are often deployed bare metal without an OS.

Evolution of TinyML Hardware

Initial efforts in TinyML focused on running algorithms natively on existing, stock MCU hardware. The limited on-chip memory (~kBs) and often non-existent off-chip memory made this challenging and drove research on efficient model design. ARM Cortex-M class processors (e.g., Zhang et al., Lai et al.) are a popular choice for many TinyML applications, offering attractive design points for low-power IoT systems from the slim Cortex-M0/M0+ to the more advanced Cortex-M4/M7 with SIMD and FPU ISA extensions. Running TinyML on DSPs has been another early approach due to the low-power consumption and optimized ISAs for signal processing (e.g., Gautschi et al.).

As ML algorithm complexity has increased, accelerator hardware for embedded devices is emerging. The ARM’s Ethos-U microNPU family of processors, starting with Ethos-U55, can deliver up to 90% energy reduction in about 0.1 mm2. Other examples include Google’s Edge TPU and Syntiant’s Neural Decision Processors. The latter can perform inference for less than 10mW in cases and offer 100x efficiency and 10-30x throughput compared to existing low-power MCUs. TinyML solutions are now integrated into larger systems, such as SoCs like the always-on, low-power AI sensing hubs in mobile devices.

Advancing TinyML: Opportunities for Future Innovations

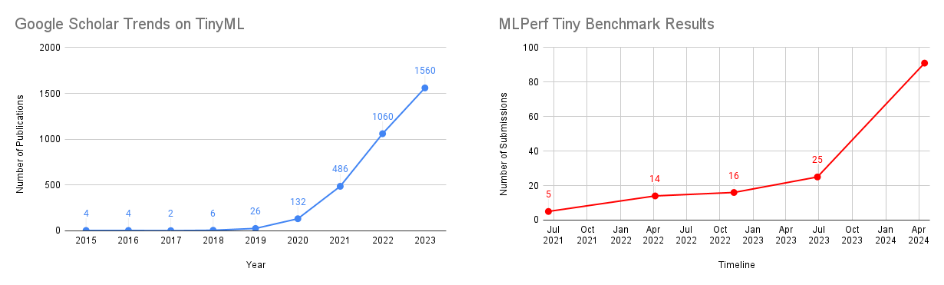

The growth of TinyML is evidenced by the increasing number of research publications, including hardware and software (extracted from Google Scholar), as shown below. The graph exhibits a noticeable “hockey stick effect,” indicating a significant acceleration in TinyML research and development in recent years. This rapid growth is further supported by the increasing number of yearly MLPerf Tiny performance and energy benchmark results, indicating the growing availability of commercialized specialized systems and solutions for consumers.

TinyML is growing and there are numerous challenges. Addressing them is crucial for the widespread adoption and practical application of TinyML in various domains, from IoT and wearables to industrial sensors and beyond.

Emerging Applications

Augmented and virtual reality are budding application spaces with intense performance demands requiring even smaller devices to run more complex ML applications with new abilities (e.g., EdgePC, Olfactory Computing). Orbital computing is also an exciting area of research for TinyML because local data processing is needed to avoid massive communication overheads from space (e.g., Orbital Edge Computing, Space Microdatacenters).

Energy Efficiency

Energy harvesting, intermittent computing, and smart power management (e.g., Sabovic et al., Gobieski et al., Hou et al.) are necessary to reduce power and make “batteryless” TinyML standards. Moreover, brain-inspired, neuromorphic computing is being developed to be extremely energy-efficient, particularly for tasks like pattern recognition and sensory event data processing. This approach contrasts with traditional Von-Neumann architectures (e.g., NEBULA, TSpinalFlow), and companies like BrainChip have already brought neuromorphic computing to commercial applications.

Cost-Efficiency

Cost is another critical element. Non-recurring engineering costs can be non-starters for TinyML applications. There has been initial work to see how Generative AI can be used for TinyML accelerator design to help minimize these expensive overheads. Other works exploring more economical alternatives to silicon, such as printed and flexible electronics (e.g., Bleier et al., Mubarik et al., FlexiCores), have demonstrated the ability to run simple ML algorithms using emerging technologies. Not only do flexible electronics provide ultra-low-cost solutions, but their form factor and extremely lightweight nature are suitable for many medical applications. However, brain-computer interface research (e.g., HALO, SCALO) has highlighted the current challenges of running complex processing algorithms (including ML-based signal processing) to decode or stimulate neural activity due to extreme constraints.

Privacy and Ethical Considerations

Privacy is critical as TinyML devices become widespread, especially in applications dealing with personal information. To address this, the paradigm of Machine Learning Sensors segregates sensor input data and ML processing from the wider system at the hardware level, offering increased privacy and accuracy through a modular approach. This then introduces the need for datasheets for ML Sensors that can provide transparency about functionality, performance, and limitations to enable integration and deployment. But even then, it is important to consider the materiality and risks associated with AI sensors, such as data privacy, security vulnerabilities, and potential misuse, to ensure the development of trustworthy and ethically sound TinyML systems.

Environmental Sustainability Challenges

Is TinyML Sustainable? It would be remiss not to mention that TinyML could lead to an “Internet of Trash” if we do not consider the system’s environmental footprint when developing billions of TinyML devices. The growth of IoT devices and their disposability can lead to a massive increase in electronic waste (e-waste) if not properly addressed. Moreover, the carbon footprint associated with the life cycle of these devices can be significant when accounting for their large-scale deployments. Therefore, assessing the environmental impacts of TinyML will become more important. This situation is analogous to the issue of plastic bags: while a single bag or TinyML device might have a negligible effect on the environment, the cumulative impact of billions of them could be substantial.

Getting Started with TinyML

TinyML-specific Datasets: Conventional ML datasets are designed for tasks that exceed TinyML’s computational and resource constraints, so the TinyML community has curated specialized datasets to better fit the tasks currently performed by TinyML. Notable examples include the Google Speech Commands dataset for keyword spotting tasks, the Multilingual Spoken Words Corpus containing spoken words in 50 languages, the Visual Wake Words (VWW) dataset for tiny vision models in always-on applications, and the Wake Vision dataset, which is 100 times larger than VWW and specifically curated for person detection in TinyML.

TinyML System Benchmarks: MLCommons developed MLPerf Tiny to benchmark TinyML systems. FPGA frameworks such as hls4ml and CFU Playground take this further by integrating MLPerf Tiny into a full-stack open-source framework, providing the tools necessary for hardware-software co-design. These benchmarks enable researchers and developers to collaborate, share ideas, and drive TinyML forward through friendly competition.

Educational Resources: Several universities, including Harvard, MIT, and the University of Pennsylvania, have developed courses on TinyML. Even massively open online courses (MOOCs) on Coursera and HarvardX exist. The open-source Machine Learning Systems book introduces ML systems through the lens of TinyML; it is open for community contributions via its GitHub repository. Collectively, these resources cover the entire MLOps pipeline in a classroom setting, widening access to applied machine learning, especially through TinyML4D, which supports TinyML in developing countries.

The TinyML Community: At the heart of all this activity is the TinyML Foundation, a vibrant and growing non-profit organization with over 20,000 participants from academia and industry, spread across 41 countries. The foundation sponsors and supports the annual TinyML Research Symposium. to nurture research in this burgeoning area. They also support an active TinyML YouTube channel featuring weekly talks and host annual competitions to bootstrap the community.

The TinyML Community: At the heart of all this activity is the TinyML Foundation, a vibrant and growing non-profit organization with over 20,000 participants from academia and industry, spread across 41 countries. The foundation sponsors and supports the annual TinyML Research Symposium. to nurture research in this burgeoning area. They also support an active TinyML YouTube channel featuring weekly talks and host annual competitions to bootstrap the community.

Conclusion

As we look towards the future of TinyML, it’s intriguing to consider how these technologies might reshape our daily lives. Qualcomm’s video, released on April Fools Day in 2008, offers a lighthearted yet thought-provoking glimpse into a world where TinyML, coupled with deeply embedded systems, could open up some wildly new possibilities.

Throughout history, we have seen powerful examples of small things making a significant impact. The transistor revolutionized electronics. The Network of Workstations in the 1990s demonstrated how hundreds of networked workstations could surpass the capabilities of centralized mainframes. Similarly, billions of TinyML models may one day replace our current insatiable trend toward increasingly large ML models. Our cover image serves as a visual metaphor for this potential, depicting many small fish overpowering a larger fish, illustrating the power of TinyML.

TinyML presents a unique opportunity. While tech giants are currently focused on large-scale, resource-intensive ML projects, TinyML offers a vast and accessible playground where individuals and small teams can innovate, experiment, and contribute to advancing machine learning in ways that directly impact people’s lives.

As we continue to develop new TinyML solutions, one thing is clear—the future of ML is tiny and bright!

About the Authors: Shvetank is a third-year Ph.D. student focused on open-source and flexible TinyML hardware architectures. Emil and Colby are third- and fifth-year Ph.D. students, respectively, focused on TinyML benchmarks, datasets, and model design. Matthew is a postdoc focused on the ethical and environmental implications of TinyML deployments in the real world. Vijay is John L. Loeb Associate Professor of Engineering and Applied Sciences at Harvard University who is passionate about TinyML and believes that the future of ML is (indeed) tiny and bright.

Disclaimer: These posts are written by individual contributors to share their thoughts on the Computer Architecture Today blog for the benefit of the community. Any views or opinions represented in this blog are personal, belong solely to the blog author and do not represent those of ACM SIGARCH or its parent organization, ACM.