Context and Continuation

In Part 1 of this two-part post, I looked at some of the existing and possible avenues for computer architecture research relating to tracking uncertainty in computations, using the blackscholes benchmark from the PARSEC suite of benchmark applications as a working example. The previous post ended with the question “Can Computer Architectures Make This Easier or Faster?” My goal with this second post is to provide context and insight to get you closer to thinking the answer is “yes”. In this post, I’ll outline some existing and possible future paths for computer architects in computation with uncertainty. Just as architectural support and microarchitectural implementations of floating-point number representations improved the ease of implementation of real-valued computations, architectural and microarchitectural support for representations of uncertainty could enable new approaches to trustworthy computation on empirical data. I’ll start with a brief continuation of the PARSEC blackscholes benchmark example and will then use Independent Component Analysis (ICA) as a complementary example to introduce the challenge of dealing with time- and ensemble-correlated values.

One Alternative: Processor Architectures and Microarchitectures for Efficient Automated Monte Carlo Evaluation

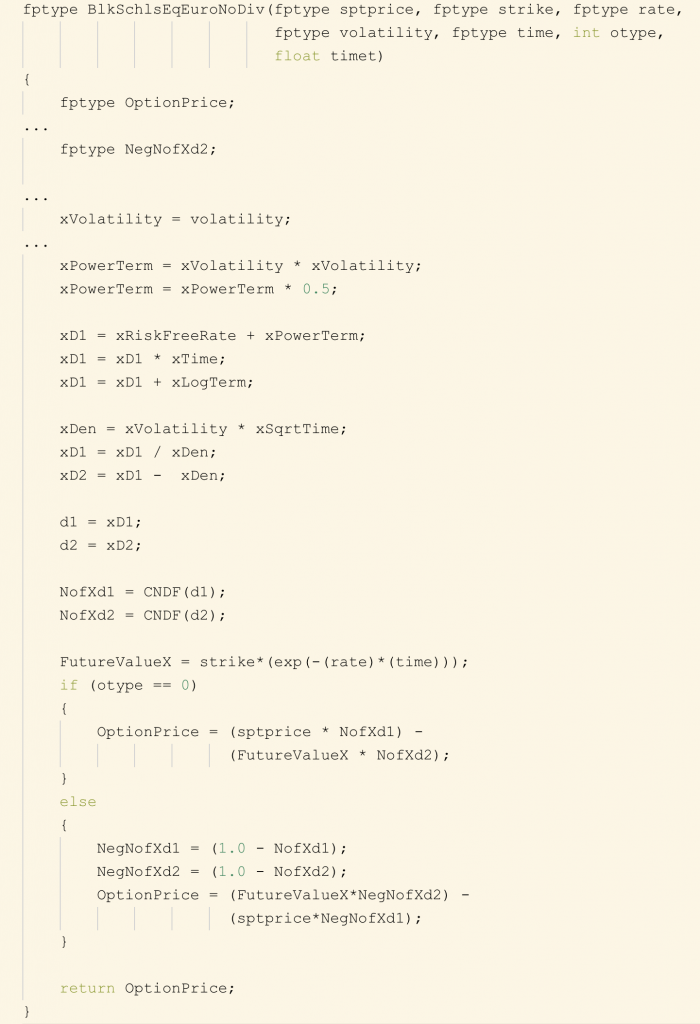

In Part 1, the method I used to study how uncertainty in the inputs to the PARSEC blackscholes benchmark propagated through its implementation, used repeated evaluation of the benchmark, each time feeding it with inputs drawn from the input distribution we wanted to propagate through the blackscholes benchmark. To make all of this more concrete, here is the function from the PARSEC blackscholes benchmark that implements the closed-form solution to the option price calculation. In the same way that you will often want to evaluate a floating-point implementation of the function BlkSchlsEqEuroNoDiv rather than one where the parameters are constrained to integer values, it could be useful to evaluate BlkSchlsEqEuroNoDiv with parameters such as volatility taking on distributions representing uncertainty in their empirical estimation.

An abridged version of the function

An abridged version of the function BlkSchlsEqEuroNoDiv for calculating the option price from the PARSEC [3] blackscholes benchmark. The ellipsis denote lines of code I have deleted to abridge the example to highlight arithmetic steps involving the input variable volatility. If the input volatility is a distribution of values, then the arithmetic operations in the body of the function will lead to a distribution for the result OptionPrice.

Architectures to speed up Monte Carlo evaluation could take many forms and there is already widespread use of FPGA-accelerated computation kernels in domains ranging from quantitative finance to molecular dynamics modeling. An argument you could make against repeated evaluation of the complete program is that not all of the instructions require uncertainty propagation and as a result you could make a case that repeated whole-program execution could be made more efficient.

One way to address the above criticism is to explicitly track uncertainty associated with arithmetic operations during program execution either by introducing new uncertainty-tracking instructions or by performing uncertainty-tracking for a subset of the instructions in an unmodified ISA within a microarchitectural implementation of an existing ISA.

A Second Alternative: Processor Architectures and Microarchitectures for Efficient Update of Variances

In Part 1, I mentioned the work of Manousakis et al. which uses an approximation for propagating uncertainty, represented by a variance, through an expression. The technique, which I referred to as the linear uncertainty propagation approximation, expresses the variance of a function or arithmetic operation in terms of the variances of its parameters and the partial derivatives of the operation or function with respect to those parameters.

Like the other methods which I describe in this post, this method could be implemented either as an ISA-exposed instruction set extension, with new instructions for variance-tracking arithmetic, or it could be implemented beneath the ISA, with the variance tracking implemented within a microarchitecture without exposing it to the ISA.

Whether implemented as an ISA-exposed extension or as a microarchitectural technique, the method would give us variances for each value and would not give us complete probability distributions for the uncertainty as we saw with the Monte Carlo evaluation in Part 1. Despite this limitation, such architecture support for variance tracking across arithmetic operations could be valuable for some applications. Because the representation of uncertainty is a single number (the variance), you could argue it is in some ways conceptually similar to another alternative: interval analysis.

A Third Alternative: Processor Architectures and Microarchitectures for Efficient Interval Analysis

Rather than track the uncertainty of values as their variances, a processor could simply track uncertainty in values as an error bound interval using interval analysis. The IEEE 1788-2015 standard for interval arithmetic defines a standard for such interval arithmetic on IEEE 754 floating-point data within a microprocessor.

Challenges for All Three Alternatives

There are many challenges to implementing uncertainty tracking in future computer architectures whether above or below the ISA boundary and whether the direction taken is whole-program Monte Carlo, estimating only variances, estimating uncertainty intervals, or some efficient method for estimating complete uncertainty distributions. One of the larger challenges is dealing with correlations. As one simple example, for a register Rs with a given uncertainty distribution the distribution for the result Rd of instruction MUL Rn, Rn, Rd should have a non-negative distribution support.

For a more nuanced example, consider the problem of computing the mixing matrix in independent component analysis (ICA) for a mixture of signals. The input in a typical implementation on a programmable digital computing system will be a timeseries of samples, where each sample is a measurement of the mixture of signals. As all measurements have uncertainty, we would ideally have an uncertainty associated with each sample. The representation of the uncertainty could be in the form of a variance, which would map well to the method used in the work of Manousakis et al. that I described in Part 1 and to the second alternative computer architecture I’ve listed above. The per-sample uncertainty could also be in the form of an interval, which would map well to the third alternative computer architecture I listed previously. And, the uncertainty could be in the form of a complete empirical or parametric analytic probability distribution, which would in turn map well to the first alternative computer architecture I listed above.

The overall challenge in executing a program with such inputs having associated uncertainties, will be to continuously track uncertainties associated with data (in whatever uncertainty representation is chosen) from the time series of inputs all the way to the final output mixing matrix. Sub-challenges will include how to empirically quantify per-sample uncertainty in the first place, how to deal with correlations across members of an ensemble of data items and correlations over time, and how to do all of this in a way that is both efficient and architecturally scalable.

About the Author: Phillip Stanley-Marbell is an Associate Professor in the Department of Engineering at the University of Cambridge where he leads the Physical Computation Laboratory and a Faculty Fellow at the Alan Turing Institute where he co-leads the Interest Group on AI in Resource- and Data-Constrained Environments. His research focuses on investigating methods to use properties of physical systems to improve the efficiency of computation on data from nature.

Disclaimer: These posts are written by individual contributors to share their thoughts on the Computer Architecture Today blog for the benefit of the community. Any views or opinions represented in this blog are personal, belong solely to the blog author and do not represent those of ACM SIGARCH or its parent organization, ACM.